- Part 1: Deploying K3s, network and host machine security configuration

- Part 2: K3s Securing the cluster

- Part 3: Creating a security responsive K3s cluster

This is part 1 in a three part blog series on deploying k3s, a certified Kubernetes distribution from SUSE Rancher, in a secure and available fashion. A fullying working Ansible project, https://github.com/digitalis-io/k3s-on-prem-production, has been made available to deploy and secure k3s for you.

If you would like to know more about how to implement modern data and cloud technologies, such as Kubernetes, into to your business, we at Digitalis do it all: from cloud migration to fully managed services, we can help you modernize your operations, data, and applications. We provide consulting and managed services on Kubernetes, cloud, data, and DevOps for any business type. Contact us today for more information or learn more about each of our services here.

Introduction

There are many advantages to running an on-premises kubernetes cluster, it can increase performance, lower costs, and SOMETIMES cause fewer headaches. Also it allows users who are unable to utilize the public cloud to operate in a “cloud-like” environment. It does this by decoupling dependencies and abstracting infrastructure away from your application stack, giving you the portability and the scalability that’s associated with cloud-native applications.

There are obvious downsides to running your kubernetes cluster on-premises, as it’s up to you to manage a series of complexities like:

- Etcd

- Load Balancers

- High Availability

- Networking

- Persistent Storage

- Internal Certificate rotation and distribution

And added to this there is the inherent complexity of running such a large orchestration application, so running:

- kube-apiserver

- kube-proxy

- kube-scheduler

- kube-controller-manager

- kubelet

And ensuring that all of these components are correctly configured, talk to each other securely (TLS) and reliably.

But is there a simpler solution to this?

Introducing K3s

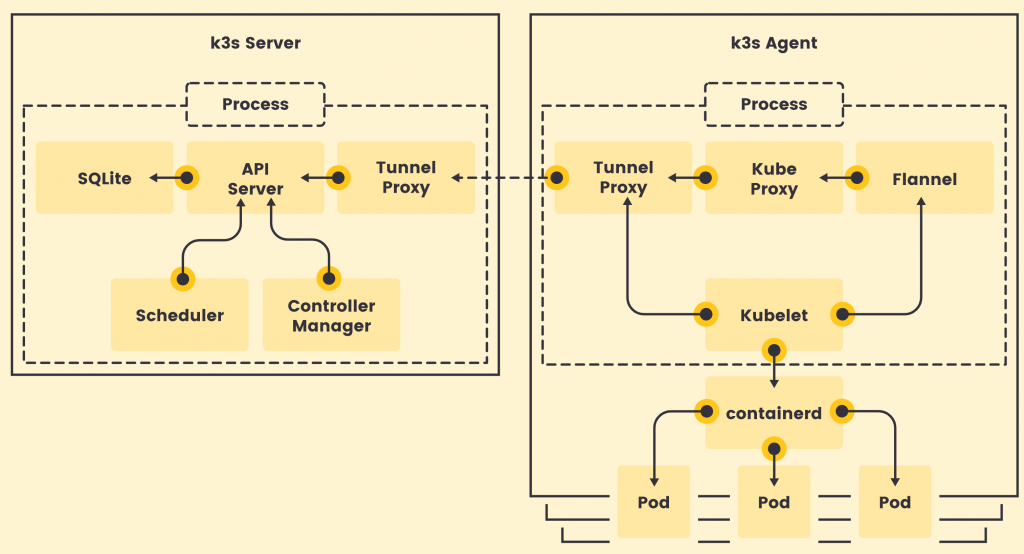

K3s is a fully CNCF (Cloud Native Computing Foundation) certified, compliant Kubernetes distribution by SUSE (formally Rancher Labs) that is easy to use and focused on lightness.

To achieve that it is designed to be a single binary of about 45MB that completely implements the Kubernetes APIs. To ensure lightness they removed a lot of extra drivers that are not strictly part of the core, but still easily replaceable with external add-ons.

So Why choose K3s instead of full K8s?

Being a single binary it’s easy to install and bring up and it internally manages a lot of pain points of K8s like:

- Internally managed Etcd cluster

- Internally managed TLS communications

- Internally managed certificate rotation and distribution

- Integrated storage provider (localpath-provisioner)

- Low dependency on base operating system

So K3s doesn’t even need a lot of stuff on the base host, just a recent kernel and `cgroups`.

All of the other utilities are packaged internally like:

This leads to really low system requirements, just 512MB RAM is asked for a worker node.

Image Source: https://k3s.io/

K3s is a fully encapsulated binary that will run all the components in the same process. One of the key differences from full kubernetes is that, thanks to KINE, it supports not only Etcd to hold the cluster state, but also SQLite (for single-node, simpler setups) or external DBs like MySQL and PostgreSQL (have a look at this blog or this blog on deploying PostgreSQL for HA and service discovery)

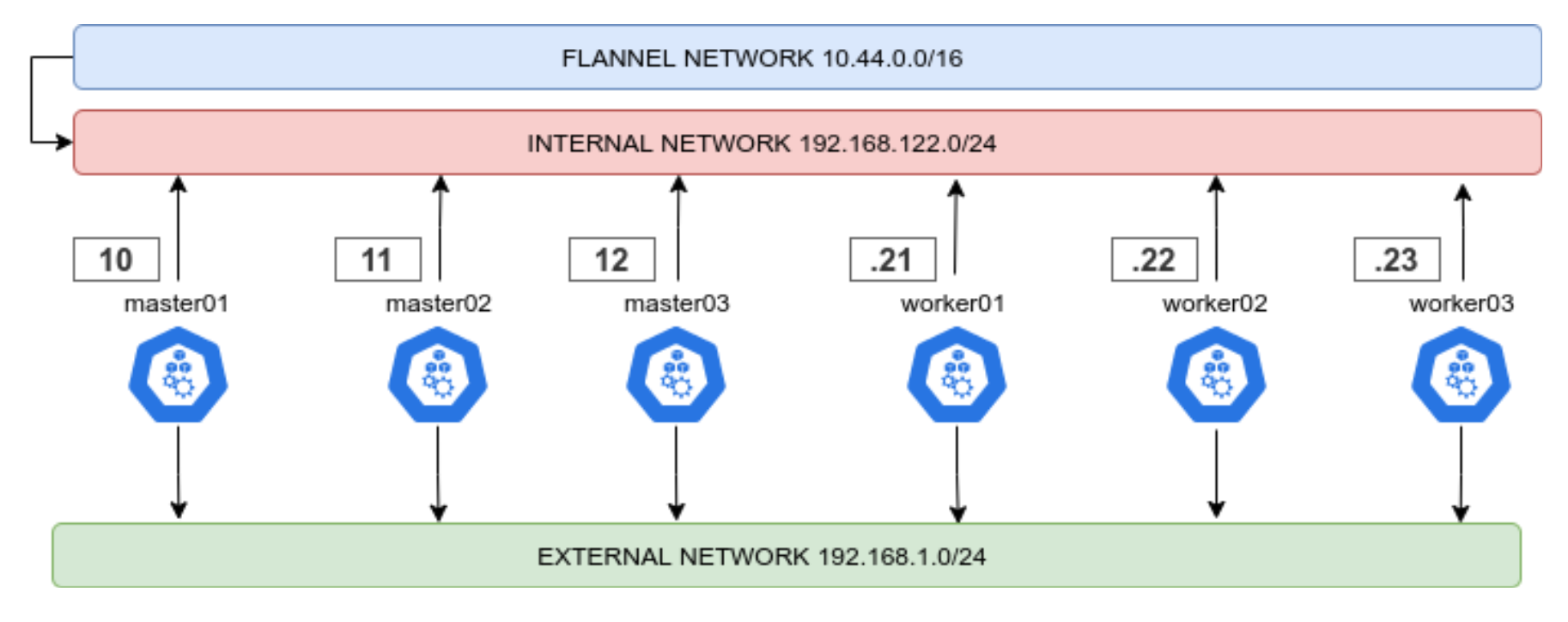

The following setup will be performed on pretty small nodes:

- 6 Nodes

- 3 Master nodes

- 3 Worker nodes

- 2 Core per node

- 2 GB RAM per node

- 50 GB Disk per node

- CentOS 8.3

What do we need to create a production-ready cluster?

We need to have a Highly Available, resilient, load-balanced and Secure cluster to work with. So without further ado, let’s get started with the base underneath, the Nodes. The following 3 part blog series is a detailed walkthrough on how to set up the k3s kubernetes cluster, with some snippets taken from the project’s Github repo: https://github.com/digitalis-io/k3s-on-prem-production

Secure the nodes

Network

First things first, we need to lay out a compelling network layout for the nodes in the cluster. This will be split in two, EXTERNAL and INTERNAL networks.

- The INTERNAL network is only accessible from within the cluster, and on top of that the Flannel network (using VxLANs) is built upon.

- The EXTERNAL network is exclusively for erogation purposes, it will just expose the port 80, 443 and 6443 for K8s APIs (this could even be skipped)

This ensures that internal cluster-components communication is segregated from the rest of the network.

Firewalld

Another crucial set up is the firewalld one. First thing is to ensure that firewalld uses iptables backend, and not nftables one as this is still incompatible with kubernetes. This done in the Ansible project like this:

- name: Set firewalld backend to iptables

replace:

path: /etc/firewalld/firewalld.conf

regexp: FirewallBackend=nftables$

replace: FirewallBackend=iptables

backup: yes

register: firewalld_backendThis will require a reboot of the machine.

Also we will need to set up zoning for the internal and external interfaces, and set the respective open ports and services.

Internal Zone

For the internal network we want to open all the necessary ports for kubernetes to function:

- 2379/tcp # etcd client requests

- 2380/tcp # etcd peer communication

- 6443/tcp # K8s api

- 7946/udp # MetalLB speaker port

- 7946/tcp # MetalLB speaker port

- 8472/udp # Flannel VXLAN overlay networking

- 9099/tcp # Flannel livenessProbe/readinessProbe

- 10250-10255/tcp # kubelet APIs + Ingress controller livenessProbe/readinessProbe

- 30000-32767/tcp # NodePort port range

- 30000-32767/udp # NodePort port range

And we want to have rich rules to ensure that the PODs network is whitelisted, this should be the final result

internal (active)

target: default

icmp-block-inversion: no

interfaces: eth0

sources:

services: cockpit dhcpv6-client mdns samba-client ssh

ports: 2379/tcp 2380/tcp 6443/tcp 80/tcp 443/tcp 7946/udp 7946/tcp 8472/udp 9099/tcp 10250-10255/tcp 30000-32767/tcp 30000-32767/udp

protocols:

masquerade: yes

forward-ports:

source-ports:

icmp-blocks:

rich rules:

rule family="ipv4" source address="10.43.0.0/16" accept

rule family="ipv4" source address="10.44.0.0/16" accept

rule protocol value="vrrp" acceptExternal Zone

For the external network we only want the port 80 and 443 and (only if needed) the 6443 for K8s APIs.

The final result should look like this

public (active)

target: default

icmp-block-inversion: no

interfaces: eth1

sources:

services: dhcpv6-client

ports: 80/tcp 443/tcp 6443/tcp

protocols:

masquerade: yes

forward-ports:

source-ports:

icmp-blocks:

rich rules: Selinux

Another important part is that selinux should be embraced and not deactivated! The smart guys of SUSE Rancher provide the rules needed to make K3s work with selinux enforcing. Just install it!

# Workaround to the RPM/YUM hardening

# being the GPG key enforced at rpm level, we cannot use

# the dnf or yum module of ansible

- name: Install SELINUX Policies # noqa command-instead-of-module

command: |

rpm --define '_pkgverify_level digest' -i {{ k3s_selinux_rpm }}

register: rpm_install

changed_when: "rpm_install.rc == 0"

failed_when: "'already installed' not in rpm_install.stderr and rpm_install.rc != 0"

when:

- "'libselinux' in ansible_facts.packages"This is assuming that Selinux is installed (RedHat/CentOS base), if it’s not present, the playbook will skip all configs and references to Selinux.

Node Hardening

To be intrinsically secure, a network environment must be properly designed and configured. This is where the Center for Internet Security (CIS) benchmarks come in. CIS benchmarks are a set of configuration standards and best practices designed to help organizations ‘harden’ the security of their digital assets, CIS benchmarks map directly to many major standards and regulatory frameworks, including NIST CSF, ISO 27000, PCI DSS, HIPAA, and more. And it’s further enhanced by adopting the Security Technical Implementation Guide (STIG).

All CIS benchmarks are freely available as PDF downloads from the CIS website.

Included in the project repo there is an Ansible hardening role which applies the CIS benchmark to the Base OS of the Node. Otherwise there are ready to use roles that it’s recommended to run against your nodes like:

https://github.com/ansible-lockdown/RHEL8-STIG/

https://github.com/ansible-lockdown/RHEL8-CIS/

Having a correctly configured and secure operating system underneath kubernetes is surely the first step to a more secure cluster.

Installing K3s

We’re going to set up a HA installation using the Embedded ETCD included in K3s.

Bootstrapping the Masters

To start is dead simple, we first want to start the K3s server command on the first node like this

K3S_TOKEN=SECRET k3s server --cluster-initK3S_TOKEN=SECRET k3s server --server https://<ip or hostname of server1>:6443How does it translate to ansible?

We just set up the first service, and subsequently the others

- name: Prepare cluster - master 0 service

template:

src: k3s-bootstrap-first.service.j2

dest: /etc/systemd/system/k3s-bootstrap.service

mode: 0400

owner: root

group: root

when: ansible_hostname == groups['kube_master'][0]

- name: Prepare cluster - other masters service

template:

src: k3s-bootstrap-followers.service.j2

dest: /etc/systemd/system/k3s-bootstrap.service

mode: 0400

owner: root

group: root

when: ansible_hostname != groups['kube_master'][0]

- name: Start K3s service bootstrap /1

systemd:

name: k3s-bootstrap

daemon_reload: yes

enabled: no

state: started

delay: 3

register: result

retries: 3

until: result is not failed

when: ansible_hostname == groups['kube_master'][0]

- name: Wait for service to start

pause:

seconds: 5

run_once: yes

- name: Start K3s service bootstrap /2

systemd:

name: k3s-bootstrap

daemon_reload: yes

enabled: no

state: started

delay: 3

register: result

retries: 3

until: result is not failed

when: ansible_hostname != groups['kube_master'][0]After that we will be presented with a 3 Node cluster working, here the expected output

NAME STATUS ROLES AGE VERSION

master01 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1

master02 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1

master03 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1- name: Stop K3s service bootstrap

systemd:

name: k3s-bootstrap

daemon_reload: no

enabled: no

state: stopped

- name: Remove K3s service bootstrap

file:

path: /etc/systemd/system/k3s-bootstrap.service

state: absent

- name: Deploy K3s master service

template:

src: k3s-server.service.j2

dest: /etc/systemd/system/k3s-server.service

mode: 0400

owner: root

group: root

- name: Enable and check K3s service

systemd:

name: k3s-server

daemon_reload: yes

enabled: yes

state: startedHigh Availability Masters

Another point is to have the masters in HA, so that APIs are always reachable. To do this we will use keepalived, setting up a VIP (Virtual IP) inside the Internal network.

We will need to set up the firewalld rich rule in the internal Zone to allow VRRP traffic, which is the protocol used by keepalived to communicate with the other nodes and elect the VIP holder.

- name: Install keepalived

package:

name: keepalived

state: present- name: Add firewalld rich rules /vrrp

firewalld:

rich_rule: rule protocol value="vrrp" accept

permanent: yes

immediate: yes

state: enabledThe complete task is available in: roles/k3s-deploy/tasks/cluster_keepalived.yml

vrrp_instance VI_1 {

state BACKUP

interface {{ keepalived_interface }}

virtual_router_id {{ keepalived_routerid | default('50') }}

priority {{ keepalived_priority | default('50') }}

...Joining the workers

Now it’s time for the workers to join! It’s as simple as launching the command, following the task in roles/k3s-deploy/tasks/cluster_agent.yml

K3S_TOKEN=SECRET k3s server --agent https://<Keepalived VIP>:6443- name: Deploy K3s worker service

template:

src: k3s-agent.service.j2

dest: /etc/systemd/system/k3s-agent.service

mode: 0400

owner: root

group: root

- name: Enable and check K3s service

systemd:

name: k3s-agent

daemon_reload: yes

enabled: yes

state: restartedNAME STATUS ROLES AGE VERSION

master01 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1

master02 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1

master03 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1

worker01 Ready <none> 2d16h v1.20.5+k3s1

worker02 Ready <none> 2d16h v1.20.5+k3s1

worker03 Ready <none> 2d16h v1.20.5+k3s1Base service flags

--selinux--disable traefik

--disable servicelbAs we will be using ingress-nginx and MetalLB respectively.

And set it up so that is uses the internal network

--advertise-address {{ ansible_host }} \

--bind-address 0.0.0.0 \

--node-ip {{ ansible_host }} \

--cluster-cidr={{ cluster_cidr }} \

--service-cidr={{ service_cidr }} \

--tls-san {{ ansible_host }}Ingress and LoadBalancer

The cluster is up and running, now we need a way to use it! We have disabled traefik and servicelb previously to accommodate ingress-nginx and MetalLB.

MetalLB will be configured using layer2 and with two classes of IPs

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- {{ metallb_external_ip_range }}

- name: metallb_internal_ip_range

protocol: layer2

addresses:

- {{ metallb_internal_ip_range }}So we will have space for two ingresses, the deploy files are included in the playbook, the important part is that we will have an internal and an external ingress. Internal ingress to expose services useful for the cluster or monitoring, external to erogate services to the outside world.

We can then simply deploy our ingresses for our services selecting the kubernetes.io/ingress.class

For example, an internal ingress:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: dashboard-ingress

namespace: kubernetes-dashboard

annotations:

kubernetes.io/ingress.class: "internal-ingress-nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

rules:

- host: dashboard.192.168.122.200.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kubernetes-dashboard

port:

number: 443apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

namespace: my-service

annotations:

kubernetes.io/ingress.class: "ingress-nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

rules:

- host: my-service.192.168.1.200.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: my-service

port:

number: 443Checkpoint

Mem: total used free shared buff/cache available CPU%

master01: 1.8Gi 944Mi 112Mi 20Mi 762Mi 852Mi 3.52%

master02 1.8Gi 963Mi 106Mi 20Mi 748Mi 828Mi 3.45%

master03 1.8Gi 936Mi 119Mi 20Mi 763Mi 880Mi 3.68%

worker01 1.8Gi 821Mi 119Mi 11Mi 877Mi 874Mi 1.78%

worker02 1.8Gi 832Mi 108Mi 11Mi 867Mi 884Mi 1.45%

worker03 1.8Gi 821Mi 119Mi 11Mi 857Mi 894Mi 1.67%Good! We now have a basic HA K3s cluster on our machines, and look at that resource usage! In just 1GB of RAM per node, we have a working kubernetes cluster.

But is it ready for production?

Not yet. We need now to secure the cluster and service before continuing!

In the next blog we will analyse how this cluster is still vulnerable to some types of attack and what best practices and remediations we will adopt to prevent this.

Remember – all of the Ansible playbooks for deploying everything are available for you to checkout on Github https://github.com/digitalis-io/k3s-on-prem-production

Related Articles

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

Digitalis becomes a SUSE Gold Partner specialising in Rancher and Kubernetes

Digitalis is now a SUSE Gold Partner specialising in SUSE Rancher Kubernetes products and services