What is it?

When you use Kubernetes to deploy an application, say a Deployment, you are calling the underlying Kubernetes API which hands over your request to an application and applies the config as you requested via the Yaml configuration file.

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

labels:

app: nginx

spec:

[...]In this example, Deployment is part of the default K8s server but there are many others you are probably using that are not and you installed beforehand. For example, if you use a nginx ingress controller on your server you are installing an API (kind: Ingress) to modify the behaviour of nginx every time you configure a new web entry point.

The role of the controller is to track a resource type until it achieves the desired state. For example, another built-in controller is the Pod kind. The controller will loop over itself ensuring the Pod reaches the Running state by starting the containers configured in it. It will usually accomplish the task by calling an API server.

We can find three important parts of any controller:

- The application itself is a docker container running inside your Kubernetes which loops itself continuously checking and ensuring the end state of the resources you are deploying

- A Custom Resource Definition (CRD) which describes the yaml/json config file required to invoke this controller.

- Usually you will also have an API server doing the work in the background

The good, the bad and the ugly

The good

Kubernetes Operators offer a way to extend the functionality of Kubernetes beyond its basics. This is especially interesting for complex applications which require intrinsic knowledge of the functionality of the application to be installed. We saw a good example earlier with the Ingress controller. Others are databases and stateful applications.

It can also reduce the complexity and length of the configuration. If you look for example at the postgres operator by Zalando you can see that with only a few lines you can spin up a fully featured cluster

apiVersion: "acid.zalan.do/v1"

kind: postgresql

metadata:

name: acid-minimal-cluster

namespace: default

spec:

teamId: "acid"

volume:

size: 1Gi

numberOfInstances: 2

users:

zalando: # database owner

- superuser

- createdb

foo_user: [] # role for application foo

databases:

foo: zalando # dbname: owner

preparedDatabases:

bar: {}

postgresql:

version: "13"

The badThe bad

The ugly

The worst thing in my opinion is that it can lead to abuse and overuse.

You should only use an operator if the functionality cannot be provided by Kubernetes. K8s operators are not a way of packaging applications, they are extensions to Kubernetes. I often see community projects for K8s Operators I would easily replace with a helm chart, in most cases a much better option

Hacking it

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

# name must match the spec fields below, and be in the form: <plural>.<group>

name: crontabs.stable.example.com

spec:

# group name to use for REST API: /apis/<group>/<version>

group: stable.example.com

# list of versions supported by this CustomResourceDefinition

versions:

- name: v1

# Each version can be enabled/disabled by Served flag.

served: true

# One and only one version must be marked as the storage version.

storage: true

schema:

openAPIV3Schema:

type: object

properties:

spec:

type: object

properties:

cronSpec:

type: string

image:

type: string

replicas:

type: integer

# either Namespaced or Cluster

scope: Namespaced

names:

# plural name to be used in the URL: /apis/<group>/<version>/<plural>

plural: crontabs

# singular name to be used as an alias on the CLI and for display

singular: crontab

# kind is normally the CamelCased singular type. Your resource manifests use this.

kind: CronTab

# shortNames allow shorter string to match your resource on the CLI

shortNames:

- ctThe good news is you may never have to. Enter kubebuilder. Kubebuilder is a framework for building Kubernetes APIs. I guess it is not dissimilar to Ruby on Rails, Django or Spring.

I took it out for a test and created my first API and controller 🎉

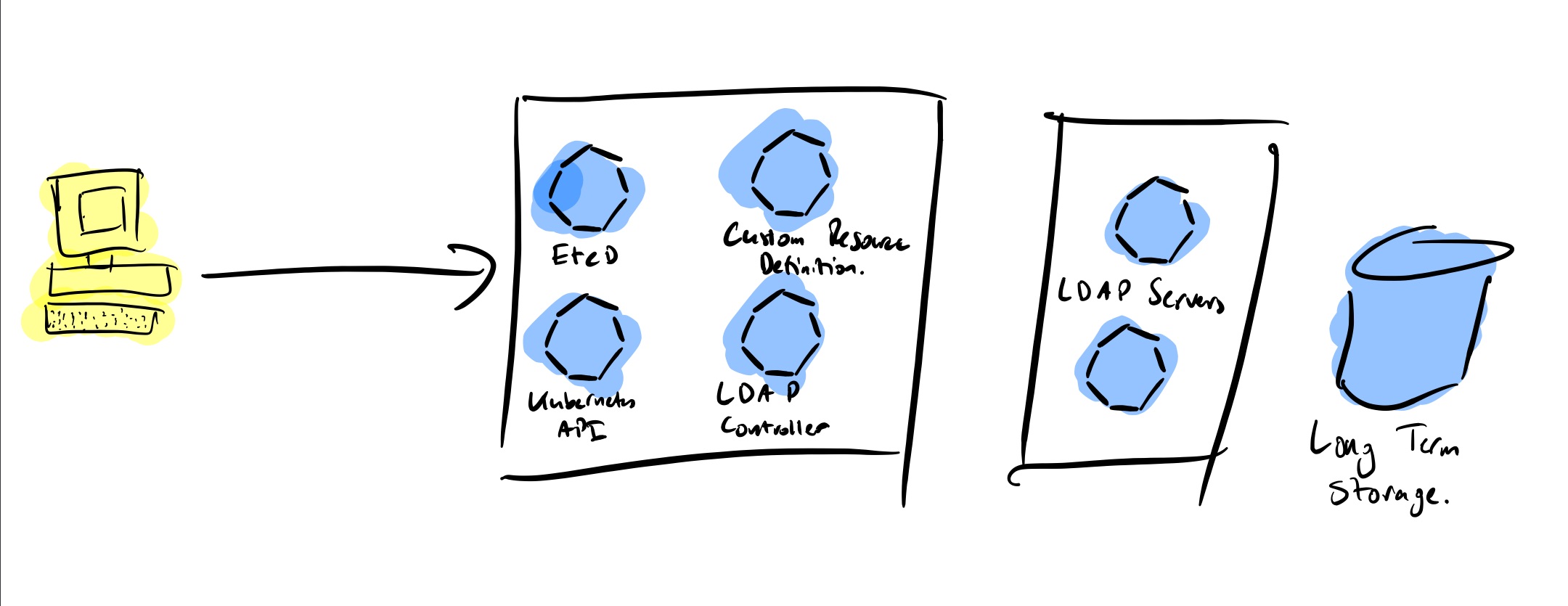

I have Kubernetes running on my laptop with minikube. There I installed OpenLDAP and I got to work to see if I could manage the LDAP users and groups from Kubernetes.

Start project

For my project I need to create two APIs, one for managing users and another for groups. Let’s initialise it create the APIs:

kubebuilder init --domain digitalis.io --license apache2 --owner "Digitalis.IO"

kubebuilder create api --group ldap --version v1 --kind LdapUser

kubebuilder create api --group ldap --version v1 --kind LdapGroupThese commands create everything I need to get started. Have a good look to the directory tree from where I would highlight these three folders:

api: it contains a sub directory for each of the api versions you are writing code for. In our example you should only see v1

config: all the yaml files required to set up the controller when installing in Kubernetes, chief among them the CRD.

controller: the main part where you write the code to Reconcile

The next part is to define the API. Using kubebuilder rather than having to edit the CRD manually you just need to add your code and kubebuilder will generate them for you.

If you look into the api/v1 directory you’ll find the resource type definitions for users and groups:

type LdapUserSpec struct {

Username string `json:"username"`

UID string `json:"uid"`

GID string `json:"gid"`

Password string `json:"password"`

Homedir string `json:"homedir,omitempty"`

Shell string `json:"shell,omitempty"`

}For example I have defined my users with these struct and the groups with:

type LdapGroupSpec struct {

Name string `json:"name"`

GID string `json:"gid"`

Members []string `json:"members,omitempty"`

}Once you have your resources defined just run make install and it will generate and install the CRD into your Kubernetes cluster.

The truth is kubebuilder does an excellent job. After defining my API I just needed to update the Reconcile functions with my code and voila. This function is called every time an object (user or group in our case) is added, removed or updated. I’m not ashamed to say it took me probably 3 times longer to write up the code to talk to the LDAP server.

func (r *LdapGroupReconciler) Reconcile(req ctrl.Request) (ctrl.Result, error) {

ctx := context.Background()

log := r.Log.WithValues("ldapgroup", req.NamespacedName)

[...]

}My only complication was with deleting. On my first version the controller was crashing because it could not find the object to delete and without that I could not delete the user/group from LDAP. I found the answer in finalizer.

A finalizer is added to a resource and it acts like a pre-delete hook. This way the code captures that the user has requested the user/group to be deleted and it can then do the deed and reply back saying all good, move along. Below is the relevant code adapted from the kubebuilder book with extra comments:

//! [finalizer]

ldapuserFinalizerName := "ldap.digitalis.io/finalizer"

// Am I being deleted?

if ldapuser.ObjectMeta.DeletionTimestamp.IsZero() {

// No: check if I have the `finalizer` installed and install otherwise

if !containsString(ldapuser.GetFinalizers(), ldapuserFinalizerName) {

ldapuser.SetFinalizers(append(ldapuser.GetFinalizers(), ldapuserFinalizerName))

if err := r.Update(context.Background(), &ldapuser); err != nil {

return ctrl.Result{}, err

}

}

} else {

// The object is being deleted

if containsString(ldapuser.GetFinalizers(), ldapuserFinalizerName) {

// our finalizer is present, let's delete the user

if err := ld.LdapDeleteUser(ldapuser.Spec); err != nil {

log.Error(err, "Error deleting from LDAP")

return ctrl.Result{}, err

}

// remove our finalizer from the list and update it.

ldapuser.SetFinalizers(removeString(ldapuser.GetFinalizers(), ldapuserFinalizerName))

if err := r.Update(context.Background(), &ldapuser); err != nil {

return ctrl.Result{}, err

}

}

// Stop reconciliation as the item is being deleted

return ctrl.Result{}, nil

}

//! [finalizer]Test Code

I created some test code. It’s very messy, remember this is just a learning exercise and it’ll break apart if you try to use it. There are also lots of duplications in the LDAP functions but it serves a purpose.

You can find it here: https://github.com/digitalis-io/ldap-accounts-controller

This controller will talk to a LDAP server to create users and groups as defined on my CRD. As you can see below I have now two Kinds defined, one for LDAP users and one for LDAP groups. As they are registered on Kubernetes by the CRD it will tell it to use our controller.

apiVersion: ldap.digitalis.io/v1

kind: LdapUser

metadata:

name: user01

spec:

username: user01

password: myPassword!

gid: "1000"

uid: "1000"

homedir: /home/user01

shell: /bin/bashapiVersion: ldap.digitalis.io/v1

kind: LdapGroup

metadata:

name: devops

spec:

name: devops

gid: "1000"

members:

- user01

- "90000"LDAP_BASE_DN="dc=digitalis,dc=io"

LDAP_BIND="cn=admin,dc=digitalis,dc=io"

LDAP_PASSWORD=xxxx

LDAP_HOSTNAME=ldap_server_ip_or_host

LDAP_PORT=389

LDAP_TLS="false"

make install runDemo

Related Articles

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 1

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.