Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post Digitalis becomes a SUSE Gold Partner specialising in Rancher and Kubernetes appeared first on digitalis.io.

]]>Digitalis is happy to announce we are now a SUSE Gold Partner providing services on the SUSE Rancher Kubernetes products. If you want to use Kubernetes in the cloud, on-premises or hybrid – Digitalis is here to help.

We can be your partner in leveraging the SUSE Rancher Kubernetes product capabilities. We pride ourselves in excelling in building, deploying and scaling modern applications in Kubernetes and the Cloud, to support your company in embracing innovations, improving speed and agility while retaining autonomy, good governance and mitigating cloud vendor lock in.

The SUSE Rancher products provide a comprehensive suite of tools and products to deploy, manage and secure your Kubernetes deployments across all CNCF certified Kubernetes deployments – from core to cloud to edge.

This partnership builds upon our extensive experience in Kubernetes, cloud native and distributed systems, data and development.

If you would like to know more about how to implement modern data and cloud native technologies, such as Kubernetes, in your business, we at Digitalis do it all: from Kubernetes and Cloud migration to fully managed services – we can help you modernize your operations, data, and applications. We provide consulting and managed services on Kubernetes, Cloud, Data, and DevOps. Contact us today for more information or learn more about each of our services here.

Related Articles

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 1

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post Digitalis becomes a SUSE Gold Partner specialising in Rancher and Kubernetes appeared first on digitalis.io.

]]>The post Apache Kafka and Regulatory Compliance appeared first on digitalis.io.

]]>Digitalis has extensive experience in designing, building and maintaining data streaming systems across a wide variety of use cases. Often in financial services, government, healthcare and other highly regulated industries.

This blog is intended to aid readers’ understanding of how Apache Kafka as a technology can support enterprises in meeting regulatory standards and compliance when used as an event broker to Security Information and Event Management (SIEM) systems.

As businesses continue to grow in complexity and embrace more and more diverse, distributed, technologies; the risk of cyber-attacks grows. This brings its own challenges from both a technical and compliance perspective; to this end we need to understand how the adoption of new technologies impact cyber risk and how we can address these through the use of modern event streaming, aggregation, correlation, and forensic techniques.

Apart from the technical considerations of any event management or SIEM system, enterprises need to understand regional legislation, laws and other compliance requirements. Virtually every regulatory compliance regime or standard such as GDPR, ISO 27001, PCI DSS, HIPAA, FERPA, Sarbanes-Oxley (SOX), FISMA, and SOC 2 have some requirements of log management to preserve audit trails of activity that addresses the CIA (Confidentiality, Integrity, and Availability) triad.

Why Event Streaming?

We need to look beyond the traditional view of data and logging, in that things happen, and you process that event which produces data, you then take that data and put it in a log or database for use at some point in the future. However, this no longer meets the security needs of modern enterprises, who need to be able to react quickly to security events.

In reality all your data is event streamed, where events happen, be it sensor readings or a transaction requests against a business system. These events happen and you process them, where a user does something, or a device does something, typically these events fall firmly in the business domain. Log files reside in the operational domain and are another example of event streams, new events can be written to the end of a log file, aka an event queue, creating a list of chronological events.

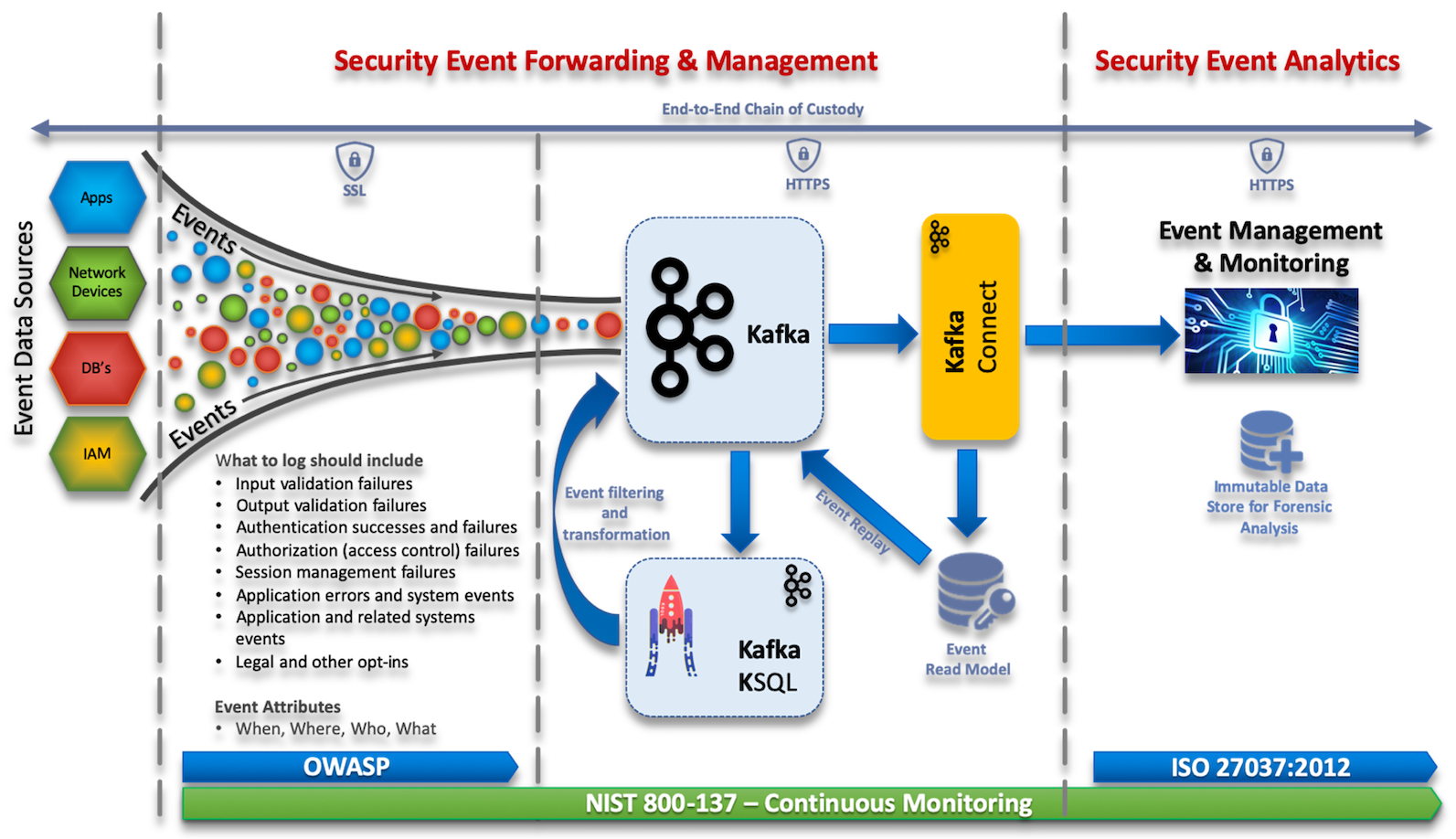

What becomes interesting is when we’re able to take events from the various enterprise systems, intersect this operational and business data and correlate events between them to provide real-time analytics. Using Apache Kafka, KSQL, and Kafka Connect, enterprises are now able to manipulate and route events in real-time to downstream analytics tools, such as SIEM systems, allowing organisations to make fast, informed decisions against complex security threats.

Apache Kafka is a massively scalable event streaming platform enabling back-end systems to share real-time data feeds (events) with each other through Kafka topics. Used alongside Kafka is KSQL, a streaming SQL engine, enabling real-time data processing against Apache Kafka. Kafka Connect is a framework to stream data into and out of Apache Kafka.

Standards & Guidance

- OWSAP Logging – Provides developers with guidance on building application logging mechanisms, especially related to security logging.

- ISO 27037:2012 – Provides guidelines for specific activities in the handling of digital evidence, which are identification, collection, acquisition and preservation of potential digital evidence that can be of evidential value.

- NIST 800-137 – Provides details for Information Security Continuous Monitoring.

Event Management & Compliance

The ability to secure event data end-to-end, from the time it leaves a client to the time it’s streamed into your event management tool is critical in guaranteeing the confidentiality, integrity and availability of this data. Kafka can help meet this by protecting data-in-motion, data-at-rest and data-in-use, through the use of three security components, encryption, authentication, and authorisation.

From a Kafka perspective this could be achieved though encryption of data-in-transit between your applications and Kafka brokers, this ensures your applications always uses encryption when reading and writing data to and from Kafka.

From a client authentication perspective, you can define that only specific applications are allowed to connect to your Kafka cluster. Authorisation usually exists under the context of authentication, where you can define that only specific applications are allowed to read from a Kafka topic. You can also restrict write access to Kafka topics to prevent data pollution or fraudulent activities.

For example, to secure client/broker communications, we would:

- Encrypt data-in transit (network traffic) via SSL/TLS

- Authentication via SASL, TLS or Kerberos

- Authorization via access control lists (ACLs) to topics

Securing data-at-rest, Kafka supports cluster encryption and authentication, including a mix of authenticated and unauthenticated, and encrypted and non-encrypted clients.

In order to be able to perform effective investigations and audits, and if needed take legal action, we need to do two things. Firstly, prove the integrity of the data through a Chain of Custody and secondly ensure the event data is handled appropriately and contains enough data to be Compliant with Regulations.

Chain of Custody

It’s critical to maintain the integrity and protection of digital evidence from the time it was created to the time it’s used in a court of law. This can be achieved through the technical controls mentioned above and operational handling processes of the data. Any break in the chain of custody or if the integrity of the data is not preserved, including any time the evidence may have been in an unsecured location, may lead to evidence presented in court being challenged and ruled inadmissible.

ISO 27037:2012 – provides guidelines for specific activities in the handling of digital evidence, which are identification, collection, acquisition and preservation of potential digital evidence that can be of evidential value.

What do we mean when we say Compliant?

This is not an easy question to answer and is dependant on the market sector and geographic location of your organisation (or in some instances where your data is hosted), as this will determine the compliance regimes that need to be followed for IT compliance. There are some commonalities between the regulative bodies for IT compliance, as a minimum you would at least have to:

- Record the time the event occurred and what has happened

- Define the scope of the information to be captured (OWASP provides guidance for logging security related events)

- Log all relevant events

- Have a documented process for handling events, breaches and threats

- Document where event data and associated records are stored

- Have a policy defining the management of event data and associated records throughout its life cycle: from creation and initial storage to the time when it becomes obsolete and is deleted

- Document what is classified as an event or incident. ITIL defines an incident as “an unplanned interruption to or quality reduction of an IT service” and an event is a “change of state that has significance for the management of an IT service or other configuration item (CI)”

- Define which events are considered a threat

Remember compliance is more about people & process than purely technical controls.

IT Compliance

The focus of IT compliance is ensuring due diligence is practiced by organisations for securing its digital assets. This is usually centred around the requirements defined by a third party, such as government, standards, frameworks, and laws. Depending on the country in which your organisation is based there are several regulation acts that require compliance reports:

ISO 27001

(International Standard)

ISO 27001 is a specification for an information security management system (ISMS) and is based on a “Plan-Do-Check-Act” four-stage process for the information security controls. An ISMS is a framework of policies and procedures that includes all legal, physical and technical controls involved in an organisation’s information risk management processes.

This framework clearly states that organisations must ensure they develop best practices in log management of their security operations, ensuring they are kept in sufficient detail to meet audit and compliance requirements.

Essentially, organisations must demonstrate their processes for confidentiality, integrity, and availability when it comes to information assets.

GDPR

(European Union Legal Framework)

The General Data Protection Regulation (GDPR) is a legal framework that sets guidelines for the collection and processing of personal information from individuals who live in the European Union (EU).

GDPR explains the general data protection regime that applies to most UK businesses and organisations. It covers the General Data Protection Regulation as it applies in the UK, tailored by the Data Protection Act 2018.

Any system would need to demonstrate demonstrable compliance with GDPR Article 25 (Data protection by design and by default) and article 32 (Security of processing).

PCI DSS

(Worldwide Payment Card Industry Data Security Standard)

The Payment Card Industry Data Security Standard (PCI DSS) consists of a set of security standards designed to ensure that ALL organisations that accept, process, store or transmit credit card information maintain a secure environment.

To become compliant, small to medium size organisations should:

- Complete the appropriate self-assessment Questionnaire (SAQ).

- Complete and obtain evidence of a passing vulnerability scan with a PCI SSC Approved Scanning Vendor (ASV). Note scanning does not apply to all merchants. It is required for SAQ A-EP, SAQ B-IP, SAQ C, SAQ D-Merchant and SAQ D-Service Provider.

- Complete the relevant Attestation of compliance in its entirety.

- Submit the SAQ, evidence of a passing scan (if applicable), and the Attestation of compliance, along with any other requested documentation.

HIPAA

(US legislation for data privacy and security of medical information)

The Health Insurance Portability and Accountability Act (HIPAA), is a US law designed to provide privacy standards to protect patients’ medical records and other personal information. There are two rules within the ACT that have an impact on log management and processing, the Security Rule and the Privacy Rule.

The HIPAA Security Rule establishes national standards to protect individuals’ electronic personal health information that is created, received, used, or maintained by a covered entity. The Security Rule requires appropriate administrative, physical and technical safeguards to ensure the confidentiality, integrity, and security of electronic protected health information.

The HIPAA Privacy Rule establishes national standards to protect individuals’ medical records. The Rule requires appropriate safeguards to protect the privacy of personal health information and sets limits and conditions on the uses and disclosures that may be made of such information without patient authorisation.

According to the act, entities covered by it must:

- Ensure the confidentiality, integrity, and availability of all e-PHI they create, receive, maintain or transmit

- Identify and protect against reasonably anticipated threats to the security or integrity of the information

- Protect against reasonably anticipated, impermissible uses or disclosures

- Ensure compliance by their workforce

FISMA

(US framework for protecting information)

The Federal Information Security Management Act (FISMA) is United States legislation that defines a comprehensive framework to protect government information, operations and assets against natural or man-made threats.

FISMA states that “any federal agency document and implement controls of information technology systems which are in support to their assets and operations.” The National Institute of Standards and Technology (NIST) has developed further the guidance to support FISMA, “NIST SP 800-92 Guide to Computer Security Log Management”.

- Organisations should establish policies and procedures for log management

- Organisations should prioritize log management appropriately throughout the organisation

- Organisations should create and maintain a log management infrastructure.

- Organisations should provide proper support for all staff with log management responsibilities.

- Organisations should establish standard log management operational processes.

FERPA

(US federal law protecting the privacy of student education records)

FERPA (Family Educational Rights and Privacy Act of 1974) is federal legislation in the United States that protects the privacy of students’ personally identifiable information (PII), educational information and directory information. As far as IT compliance is concerned, there are several activities your organisations can implement to support compliance:

- Encryption will help secure your data on a physical level

- Find and Eliminate Vulnerabilities. Perform vulnerability scans on your systems and databases

- Use Compliance-Monitoring Mechanisms

- Ensure that you have a data breach policy set in place

- Ensure that you have well-developed policies and procedures, such as an information security plan

Implementation of a SIEM and log management tools can support organisation in achieving FERPA compliance.

SOC 2 Compliance

There are three types of SOC reports, but SOC 2 focuses explicitly on the security protecting financial transactions. SOC 2 compliance requires organisations to submit a written overview of how their system works and the measures in place to protect it. External auditors assess the extent to which an organisation complies with one or more of the five trust principles based on the systems and processes in place.

- Security

- Availability

- Processing Integrity

- Confidentiality

- Privacy

References: Regulation acts and standards

- GDPR

https://eugdpr.eu/ - SOC 2

https://www.aicpa.org/content/dam/aicpa/interestareas/frc/assuranceadvisoryservices/downloadabledocuments/trust-services-criteria.pdf - Sarbanes Oxley

http://www.sarbanes-oxley-101.com/ - FISMA

https://www.dhs.gov/sites/default/files/publications/FY%202018%20CIO%20FISMA%20Metrics_V2.0.1_final_0.pdf - PCI DSS

https://www.pcisecuritystandards.org/document_library - HIPPA

https://www.hhs.gov/hipaa/for-professionals/security/laws-regulations/index.html - FERPA

https://www2.ed.gov/policy/gen/guid/fpco/ferpa/index.html - ISO 27001

https://www.iso.org/isoiec-27001-information-security.html

Related Articles

Getting started with Kafka Cassandra Connector

If you want to understand how to easily ingest data from Kafka topics into Cassandra than this blog can show you how with the DataStax Kafka Connector.

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post Apache Kafka and Regulatory Compliance appeared first on digitalis.io.

]]>The post Distributed Data Summit 2018 appeared first on digitalis.io.

]]>We are supporting and sponsoring the Distributed Data Summit for Apache Cassandra this year.

If you are attending this summit do come and chat to us!

Related Articles

Cassandra with AxonOps on Kubernetes

How to deploy Apache Cassandra on Kubernetes with AxonOps management tool. AxonOps provides the GUI management for the Cassandra cluster.

AxonOps Beta Released

We are excited to announce that AxonOps beta is now available for you to download and install!

AxonOps

AxonOps is a platform we have created which consists of 4 key components – javaagent, native agent, server, and GUI making it extremely simple to deploy in any Linux infrastructure

The post Distributed Data Summit 2018 appeared first on digitalis.io.

]]>