If you want to understand how to easily ingest data from Kafka topics into Cassandra than this blog can show you how with the DataStax Kafka Connector.

The post Deploying PostgreSQL for High Availability with Patroni, etcd and HAProxy – Part 2 appeared first on digitalis.io.

]]>In the first part of this blog we configured an etcd cluster on top of three CentOS 7 servers. We had to tweak the operating system configuration in order to have everything running smoothly. In this post we’ll see how to configure Patroni using the running etcd cluster as a distributed configuration store (DCS) and HAProxy to route connections to the active leader.

Patroni Configuration

The patroni configuration is a yaml file divided in sections with each section controlling a specific part of the patroni behaviour. We’ll save the patroni configuration file in /etc/patroni/patroni.yml

Let’s have a look at the configuration file in detail.

Namespace, Scope and name

The keys scope, namespace and name properties in the yml file control the node cluster’s membership – the namespace is where the cluster is created within the DCS and the identification for the node.

This comes quite handy if we have a dedicated DCS cluster with multiple patroni clusters configured. We can define either a namespace for each cluster or store multiple clusters within the same namespace.

Scope and namespace are the same across the three nodes, the name value must be within the cluster.

Our example we’ll have the following settings:

# patroni01

scope: region_one

namespace: /patroni_test/

name: patroni01

# patroni02

scope: region_one

namespace: /patroni_test/

name: patroni02

# patroni03

scope: region_one

namespace: /patroni_test/

name: patroni02restapi

The restapi dictionary defines the configuration for the REST API used by patroni. In particular, the key listen – this defines the address and the port where the REST API service listens. Similarly the key connect_address – this defines the address and port used by patroni for querying the REST API.

The restapi can be secured by defining the path to the certificate file and key using the certfile and keyfile configuration options. It’s also possible to configure authentication for the restapi using the authentication configuration option within restapi config.

In a production setting it would be reccomended to enable the above security options. However, in our example the restapi is configured in a simple fashion, with no security enabled, as below.

#patroni 01

restapi:

listen: 192.168.56.40:8008

connect_address: 192.168.56.40:8008

#patroni 02

restapi:

listen: 192.168.56.41:8008

connect_address: 192.168.56.41:8008

#patroni 02

restapi:

listen: 192.168.56.42:8008

connect_address: 192.168.56.42:8008Obviously, the ip address is machine specific.

etcd

The etcd: configuration value is used to define the connection to the DCS if etcd is used. In our example we store all the participating hosts in the key hosts as a comma separated string.

The configuration in our example is the same on all of the patroni nodes and is the following

etcd:

hosts: 192.168.56.40:2379,192.168.56.41:2379,192.168.56.42:2379bootstrap

The bootstrap section is used during the bootstrap of the patroni cluster.

The contents of the dcs configuration is written into the DCS in the position /<namespace>/scope/config after the patroni cluster is initialized.

The data stored in the DCS is then used as the global configuration for all the members in the cluster and should be managed only by interacting via patronictl or REST api call.

However some parameters like ttl, loop_wait etc. are dynamic and read from the DCS in a global fashion. Other parameters like postgresql.listen, postgresql.data_dir are local to the node and shall be set in the configuration file instead.

In our example we are setting up the bootstrap section in this way.

bootstrap:

dcs:

ttl: 10

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

postgresql:

use_pg_rewind: true

parameters:

initdb:

- encoding: UTF8

- data-checksums

pg_hba:

- host replication replicator 0.0.0.0/0 md5

- host all all 0.0.0.0/0 md5

users:The dcs section defines the behaviour of the check against the DCS to manage the primary status and the eventual new leader election.

- ttl: defines the lifetime in seconds for the token held by the primary

- loop_wait: seconds the loop check for the token will sleep

- retry_timeout: timeout in seconds for DCS and PostgreSQL operation retries. Any timeout shorter than this value will not cause the leader demotion

- maximum_lag_on_failover: if the lag in bytes between the primary and the follower is larger than this value then the follower won’t participate in the new leader election

- postgresql: dictionary where it’s possible to define specific options like the usage of pg_rewind of the replication slots and the cluster parameters.

We are also configuring the postgresql dictionary to initialize the cluster with certain parameters. The initdb list defines options to pass to initdb, during the bootstrap process (e.g. cluster encoding or the checksum usage).

The pg_hba list defines the entries in pg_hba.conf set after the cluster is initialized.

The users key defines additional users to create after initializing the new cluster. In our example is empty.

postgresql

The postgresql section defines the node specific settings. Our configuration is the following.

postgresql:

listen: "*:6432"

connect_address: patroni01:6432

data_dir: /var/lib/pgsql/data/postgresql0

bin_dir: /usr/pgsql-13/bin/

pgpass: /tmp/pgpass0

authentication:

replication:

username: replicator

password: replicator

superuser:

username: postgres

password: postgres

rewind:

username: rewind_user

password: rewind

parameters:In particular the key listen is used by patroni to set the postgresql.conf parameters listen_addresses and port.

The key connect_address defines the address and the port through which Postgres is accessible from other nodes and applications.

The key data_dir is used to tell patroni the path of the cluster’s data directory.

The key bin_dir is used to tell patroni where the PostgreSQL binaries are located.

The key pg_pass specifies the filename of the password authentication file used by patroni to connect to the running PostgreSQL database.

The authentication dictionary is used to define the connection parameters for the replication user, the super user and the rewind user if we are using pg_rewind to remaster an old primary.

Service setup

In order to have patroni started automatically we need to setup a systemd unit file in /etc/systemd/system. We name our file patroni.service with the following contents.

[Unit]

Description=Runners to orchestrate a high-availability PostgreSQL

After=syslog.target network.target

[Service]

Type=simple

User=postgres

Group=postgres

WorkingDirectory=/var/lib/pgsql

# Start the patroni process

ExecStart=/bin/patroni /etc/patroni/patroni.yml

# Send HUP to reload from patroni.yml

ExecReload=/bin/kill -s HUP $MAINPID

# only kill the patroni process, not its children, so it will gracefully stop postgres

KillMode=process

# Give a reasonable amount of time for the server to start up/shut down

TimeoutSec=30

# Do not restart the service if it crashes, we want to manually inspect database on failure

Restart=no

[Install]

WantedBy=multi-user.targetAfter the service file creation we need to make systemd aware of the new service.

Then we can enable the service and start it.

sudo systemctl daemon-reload

sudo systemctl enable patroni

sudo systemctl start patroniAs soon as we start the patroni service we should see PostgreSQL bootstrap on the first node.

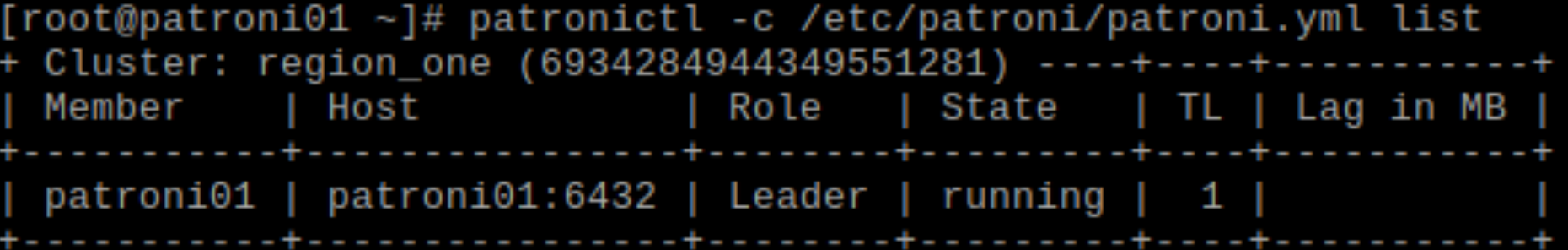

We can monitor the process via patronictl with the following command:

patronictl -c /etc/patroni/patroni.yml list

The output is something like this:

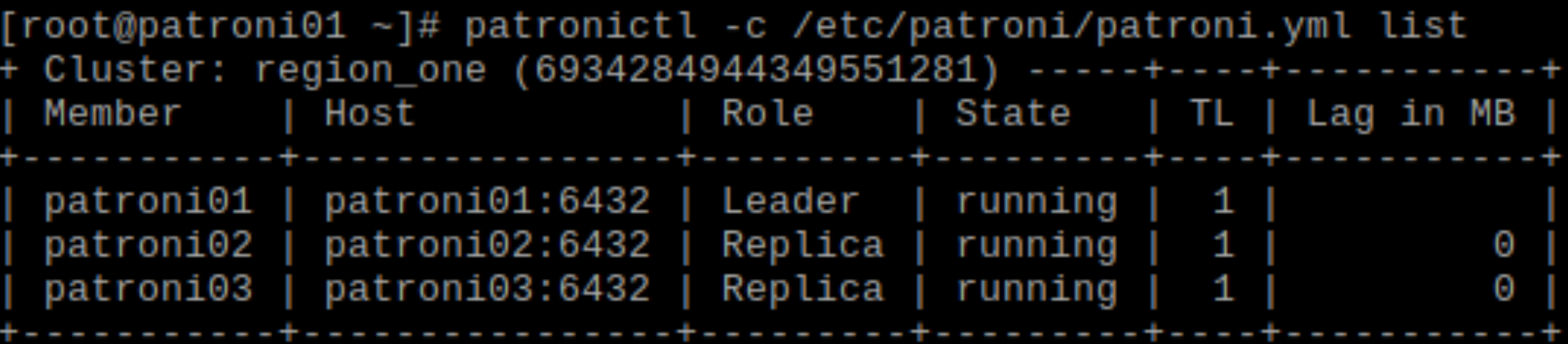

We can then start the patroni service on the other two nodes to make the follower join the cluster. By default patroni will build the new replicas by using pg_basebackup.

When all the nodes are up and running the patronictl command output will change in this way.

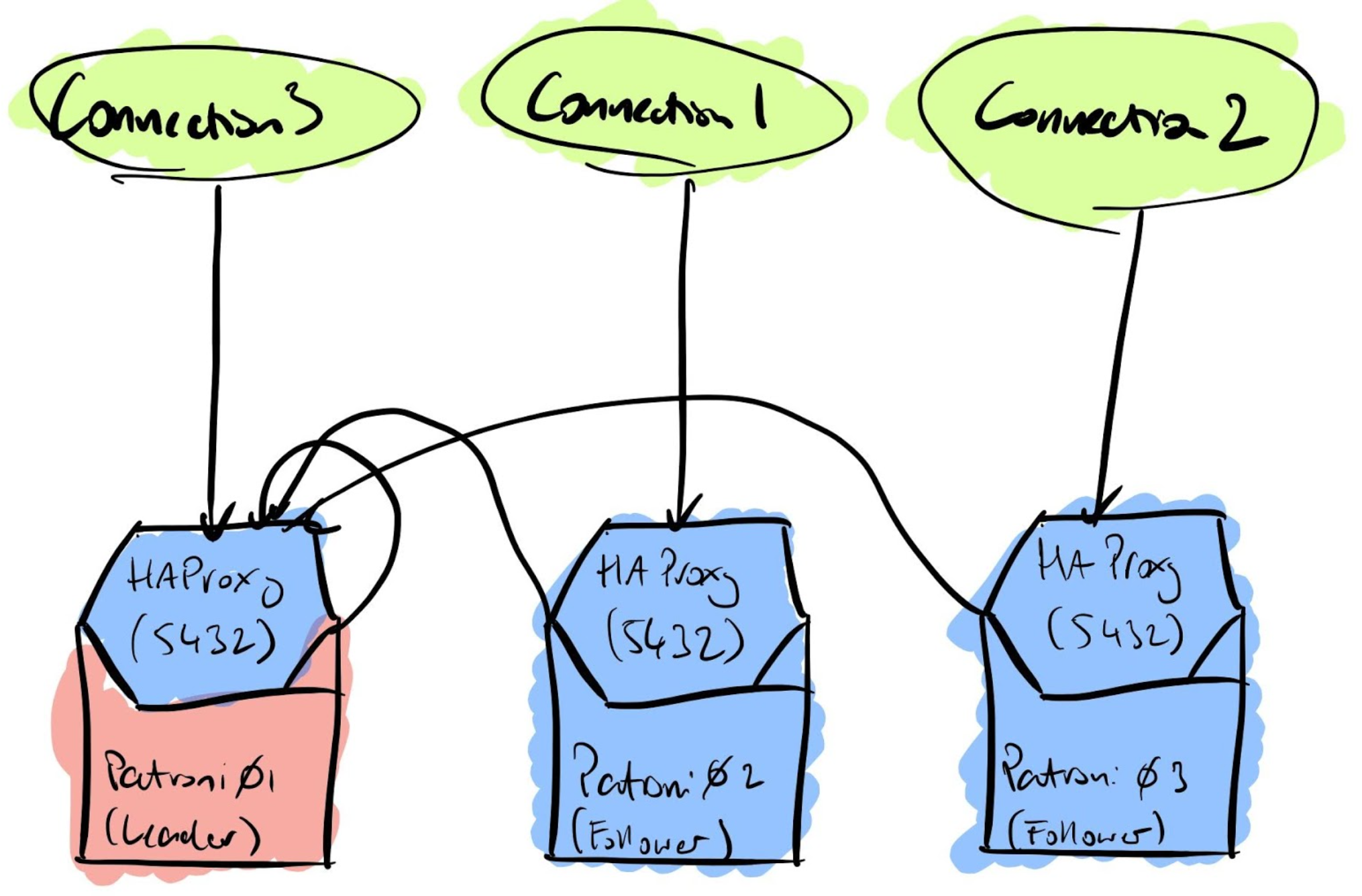

HAProxy connection router

In order to have the connection routed to the active primary we need to configure the HAProxy service in a proper way.

First we need to have HAProxy to listen for connections on the PostgreSQL standard port 5432. Then HAProxy should check the patroni api to determine which node is the primary.

This is done with the following configuration.

global

maxconn 100

defaults

log global

mode tcp

retries 2

timeout client 30m

timeout connect 4s

timeout server 30m

timeout check 5s

listen stats

mode http

bind *:7000

stats enable

stats uri /

listen region_one

bind *:5432

option httpchk

http-check expect status 200

default-server inter 3s fall 3 rise 2 on-marked-down shutdown-sessions

server patroni01 192.168.56.40:6432 maxconn 80 check port 8008

server patroni02 192.168.56.41:6432 maxconn 80 check port 8008

server patroni03 192.168.56.42:6432 maxconn 80 check port 8008This example configuration enables the HAProxy statistics on port 7000. The region_one section is named after the patroni scope for consistency and listens on the port 5432. Each patroni server is listed as a server to be checked on port 8008, the REST api port, to determine whether the node is up.

After configuring starting HAProxy on each node we will be able to connect on any of the nodes and end always on the primary. In case of failover the connection will drop and at the next connection attempt we’ll connect to the new primary.

Wrap up

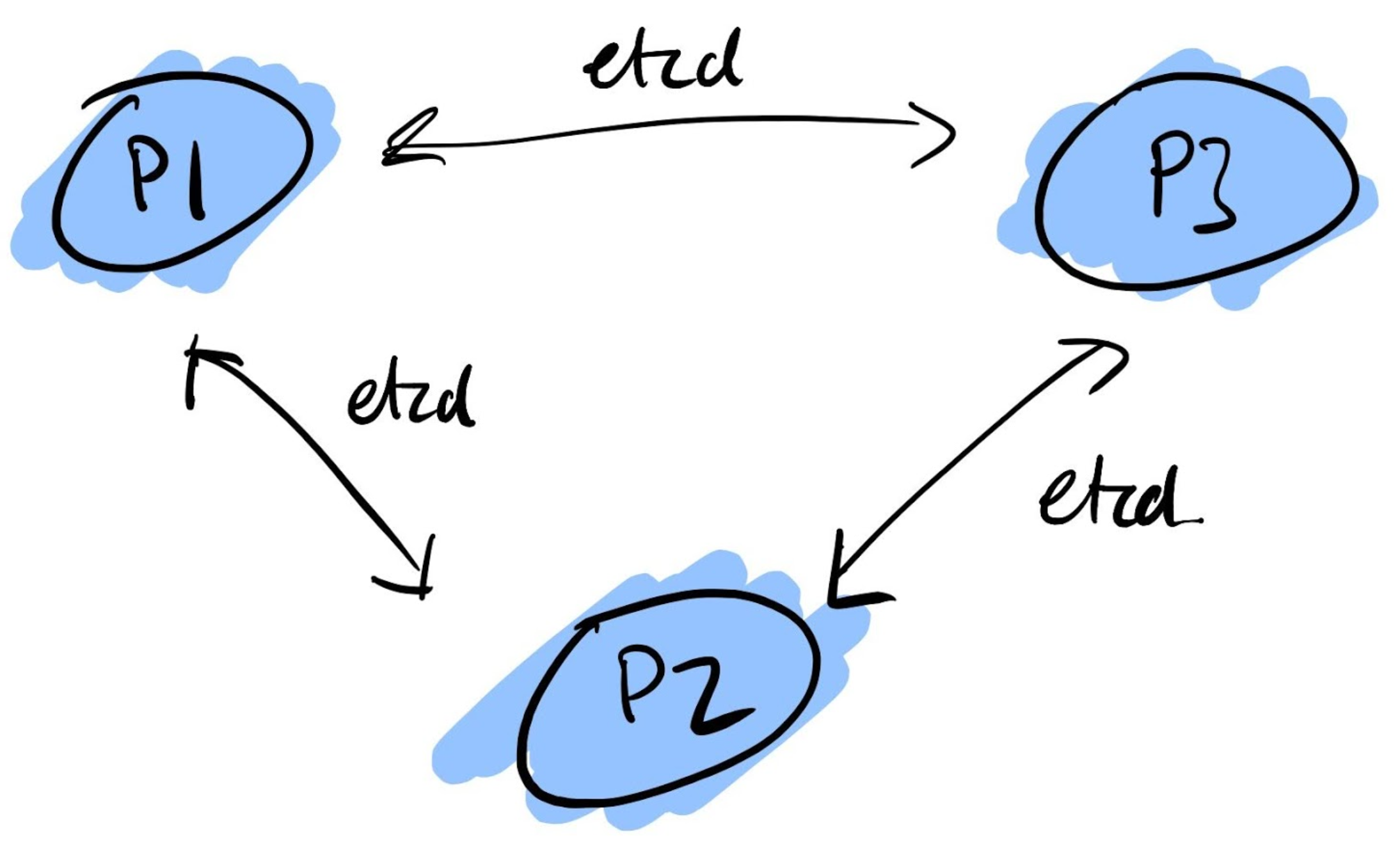

This simple example shows how to set up a three node Patroni cluster without no single point of failure (SPOF). To do this we have etcd configured in a cluster with a member installed on each database node. In a similar fashion we have HAProxy insatlled and running on each database node.

However for production it would be reccomended to setup etcd on dedicated hosts and configure SSL for etcd and the Patroni REST APIs, if the network is not trusted or to avoid accidents.

Additionally, for HAProxy in production it is strongly suggested to have a load balancer capable of checking if the HAProxy service is available before attempting a connection.

Having an up and running Patroni cluster requires a lot of configuration. Therefore it is strongly recommended to use a configuration management tool such as Ansible to deploy and confgure your cluster.

If you would like to know more about how to implement modern data and cloud technologies, such as PostgreSQL, into to your business, we at Digitalis do it all: from cloud migration to fully managed services, we can help you modernize your operations, data, and applications. We provide consulting and managed services on cloud, data, and DevOps for any business type. Contact us for more information.

Related Articles

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post Deploying PostgreSQL for High Availability with Patroni, etcd and HAProxy – Part 2 appeared first on digitalis.io.

]]>The post Deploying PostgreSQL for High Availability with Patroni, etcd and HAProxy – Part 1 appeared first on digitalis.io.

]]>In the first of a series of blogs on deploying PostgreSQL for High Availability (HA), we show how this can be done by leveraging technologies such as Patroni, etcd and HAProxy.

This blog gives an introduction to the relevant technologies and starts with showing how to deploy etcd as the distributed configuration store that Patroni will subsequently use for HA. Subsequent blogs will show how to configure Patroni, HAProxy and PostgreSQL

The second part of this blog can be found here.

For demonstration purposes the examples show how to manually install and configure the relevant components. However it is highly recommended to follow standard DevOps practices and use a configuration management tool such as Ansible or any other tool to install and configure the various software packages and OS.

If you would like to know more about how to implement modern data and cloud technologies, such as PostgreSQL, into to your business, we at Digitalis do it all: from cloud migration to fully managed services, we can help you modernize your operations, data, and applications. We provide consulting and managed services on cloud, data, and DevOps for any business type. Contact us today for more information.

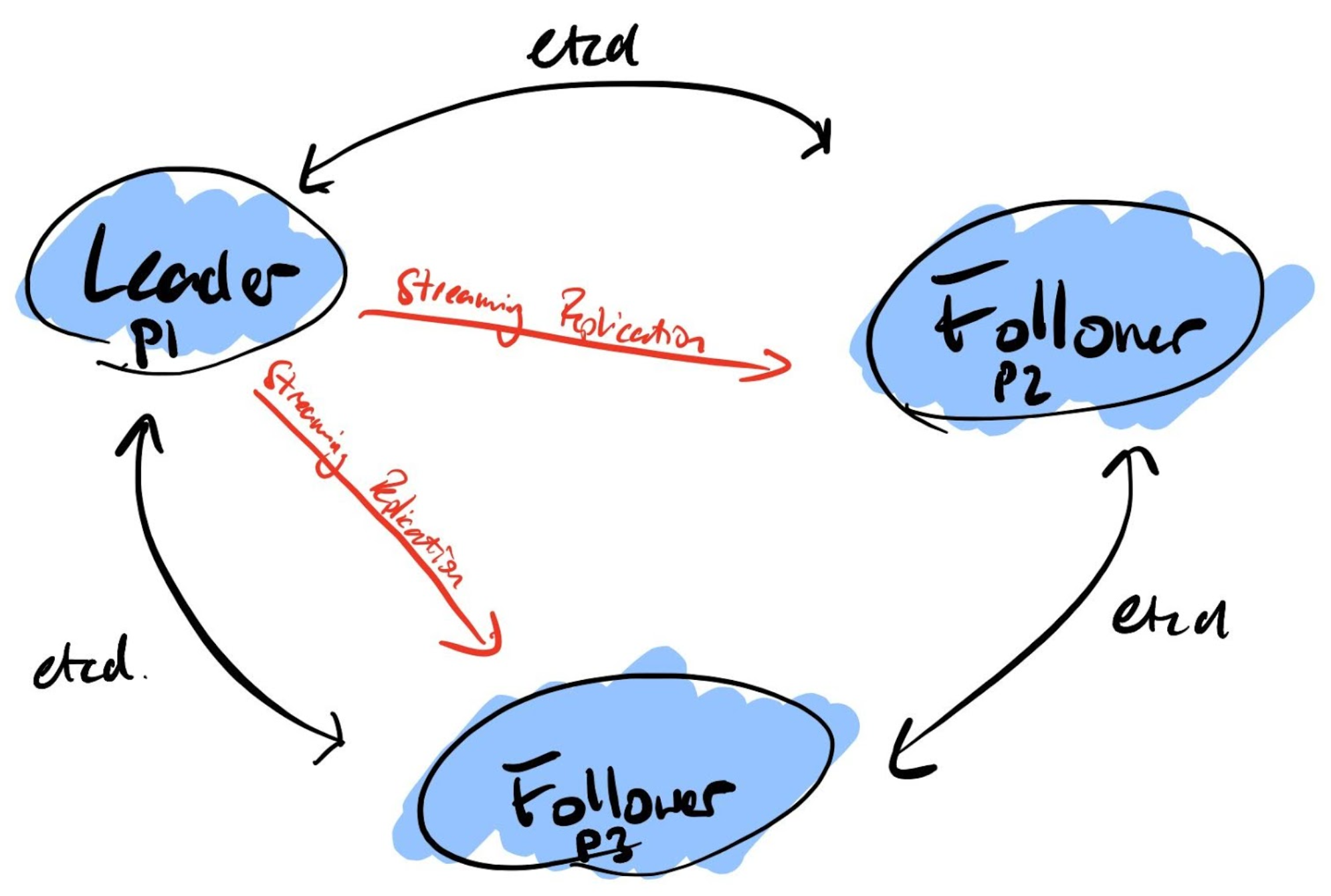

Patroni at a glance

Patroni is an automatic failover system for PostgreSQL built by Zalando. It provides automatic or manual failover and keeps all of the vital data stored into a distributed configuration store (DCS) that can be one of etcd, zookeeper, consul or a pure python RAFT implementation based on the library pysyncobj. Patroni is available either as a pip package or via the official RPM/DEB PostgreSQL repositories.

When Patroni runs on the top of the primary node it stores a token into the DCS. The token has a limited TTL measurable in seconds. If the primary node becomes unavailable then the token expires and patroni on the followers via the DCS initiates the election of a new primary.

When the old primary comes back on line then it discovers that the token is held by another node and patroni transforms the old primary into the follower of the new primary automatically.

The database connections do not happen directly to the database nodes but are routed via a connection proxy like HAProxy or pgbouncer. This proxy, by querying the patroni rest api, determines the active node.

It’s then clear that by using Patroni the risk of having a split brain scenario is very limited.

However by using patroni a DBA needs to surrender completely the manual database administration to patroni because all the dynamic settings are stored into the DCS in order to have complete consistency on the participating nodes.

In this blog post we’ll see how to build a Patroni cluster on the top of CentOS 7 by using etcd in clustering and HAProxy active on each database node capable of routing the database connection automatically to the primary, whatever node we decide to connect to.

Software installation

In order to set up PostgreSQL and Patroni we need to add the official pgdg (PostgreSQL Global Development Group) yum repository to CentOS.

The PostgreSQL website has an easy to use wizard to grab the commands depending on the distribution in use at the url https://www.postgresql.org/download/linux/redhat/ .

For CentOS 7 adding the yum repository is simple as that.

sudo yum install -y https://download.postgresql.org/pub/repos/yum/reporpms/EL-7-x86_64/pgdg-redhat-repo-latest.noarch.rpmWe can now install the PostgreSQL 13 binaries by running the following command.

sudo yum install -y postgresql13-server postgresql13-server postgresql13 postgresql13-contribIn order to use the packaged version of patroni we need to install the epel-release provides additional packages required by patroni

sudo yum install -y epel-releaseFinally we can install the following packages

- python36

- patroni

- patroni-etcd

- etcd

- HAProxy

OS setup

The CentOS base installation needs to be configured to prevent firewalld and selinux that need to be adjusted before configuring our Patroni cluster.

For firewalld we need to open the ports required by etcd, PostgreSQL, HAProxy and the Patroni REST api.

For selinux we need to enable the flag enabling HAProxy binding to the ip addresses.

Firewalld

The ports required for operating patroni/etcd/haproxy/postgresql are the following.

- 5432 PostgreSQL standard port, not used by PostgreSQL itself but by HAProxy

- 6432 PostgreSQL listening port used by HAproxy to route the database connections

- 2380 etcd peer urls port required by the etcd members communication

- 2376 etcd client port required by any client including patroni to communicate with etcd

- 8008 patroni rest api port required by HAProxy to check the nodes status

- 7000 HAProxy port to expose the proxy’s statistics

Enabling the ports is very simple and can be automated via script or ansible using the firewalld module.

By using bash it is possible to enable the ports with a simple for loop.

for service_port in (5432 6432 2380 2376 8008 7000)

do

sudo firewall-cmd --permanent --zone=public --add-port=${service_port}/tcp

done

sudo systemctl reload firewalldselinux

selinux by default prevents the new services to bind to all the ip addresses.

In order to allow HAProxy to bind the ports required for its functionality we need to run this command.

sudo setsebool -P haproxy_connect_any=1Network

For our example we are using three virtualbox virtual machines. Each machine has two network interfaces. The first interface is bridged on the host network adapter and is used for internet access. The second interface is connected to the host only network provided by virtualbox and used for inter communication between the machines.

The second interface network is configured with a static ip address and each node has the hosts file configured with the other hosts ip names resolved to machine names.

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.56.40 patroni01

192.168.56.41 patroni02

192.168.56.42 patroni03Etcd three node cluster

We are now ready to configure etcd to work as a cluster of three nodes.

For doing so we need to edit the file in /etc/etcd/etcd.conf and modify the following variables.

ETCD_LISTEN_PEER_URLS="http://192.168.56.40:2380"

ETCD_LISTEN_CLIENT_URLS="http://192.168.56.40:2379"

ETCD_NAME="patroni01"

ETCD_INITIAL_ADVERTISE_PEER_URLS="http://192.168.56.40:2380"

ETCD_ADVERTISE_CLIENT_URLS="http://192.168.56.40:2379"

ETCD_INITIAL_CLUSTER="patroni01=http://192.168.56.40:2380,patroni02=http://192.168.56.41:2380,patroni03=http://192.168.56.42:2380"All the variables except ETCD_INITIAL_CLUSTER are machine specific and must be set accordingly with the machine name and ip address.

The variable ETCD_INITIAL_CLUSTER is a comma separated values list of the hosts participating in the etcd cluster.

After configuring etcd on each node we can enable and start the service.

sudo systemctl enable etcd

sudo systemctl start etcdWe can check the cluster’s health status with the following command.

etcdctl --endpoints http://patroni01:2379 cluster-health

member 75e96c8926bc6382 is healthy: got healthy result from http://192.168.56.40:2379

member 7c1dfc5e13a8008a is healthy: got healthy result from http://192.168.56.42:2379

member c920522ba9a75e17 is healthy: got healthy result from http://192.168.56.41:2379

cluster is healthyWrap up

We’ve seen how to configure a three node etcd cluster on the patroni nodes. This example configuration is made of three members.

In the next posts we’ll see how to configure and initialise a patroni cluster using the DCS (etcd) running on the database nodes and how to setup HAProxy for routing efficiently the database connections to the active leader.

Related Articles

Getting started with Kafka Cassandra Connector

If you want to understand how to easily ingest data from Kafka topics into Cassandra than this blog can show you how with the DataStax Kafka Connector.

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post Deploying PostgreSQL for High Availability with Patroni, etcd and HAProxy – Part 1 appeared first on digitalis.io.

]]>