If you want to understand how to easily ingest data from Kafka topics into Cassandra than this blog can show you how with the DataStax Kafka Connector.

The post Apache Pulsar standalone usage and basic topics appeared first on digitalis.io.

]]>In my last Pulsar post I did a side by side comparison of Apache Kafka and Apache Pulsar. Let’s continue looking at Pulsar a little closer, there are some really interesting things when it comes to topics and the options available.

Starting a Standalone Pulsar Cluster

As with Kafka, Pulsar lets you operate a standalone cluster so you can get the grasp of the basics. For this blog I’m going to assume that you have installed the Pulsar binaries, while you can operate Pulsar in a Docker container or via Kubernetes, I will not be covering those in this post.

In the bin directory of the Pulsar distribution is the pulsar command. This gives you control on starting a standalone cluster or the Zookeeper, Bookkeeper and Pulsar broker components separately. I’m going to start a standalone cluster:

$ bin/pulsar standaloneAfter a few minutes you will see that the cluster is up and running.

11:02:06.902 [worker-scheduler-0] INFO org.apache.pulsar.functions.worker.SchedulerManager - Schedule summary - execution time: 0.042227224 sec | total unassigned: 0 | stats: {"Added": 0, "Updated": 0, "removed": 0}

{

"c-standalone-fw-localhost-8080" : {

"originalNumAssignments" : 0,

"finalNumAssignments" : 0,

"instancesAdded" : 0,

"instancesRemoved" : 0,

"instancesUpdated" : 0,

"alive" : true

}

}

Pulsar Consumers

In the comparison blog post I noted that where Kafka pulls messages from the brokers, Pulsar pushes messages out to consumers. Pulsar uses subscriptions to route messages from the brokers to any number of consumers that are subscribed. The read position of the log is handled by the brokers.

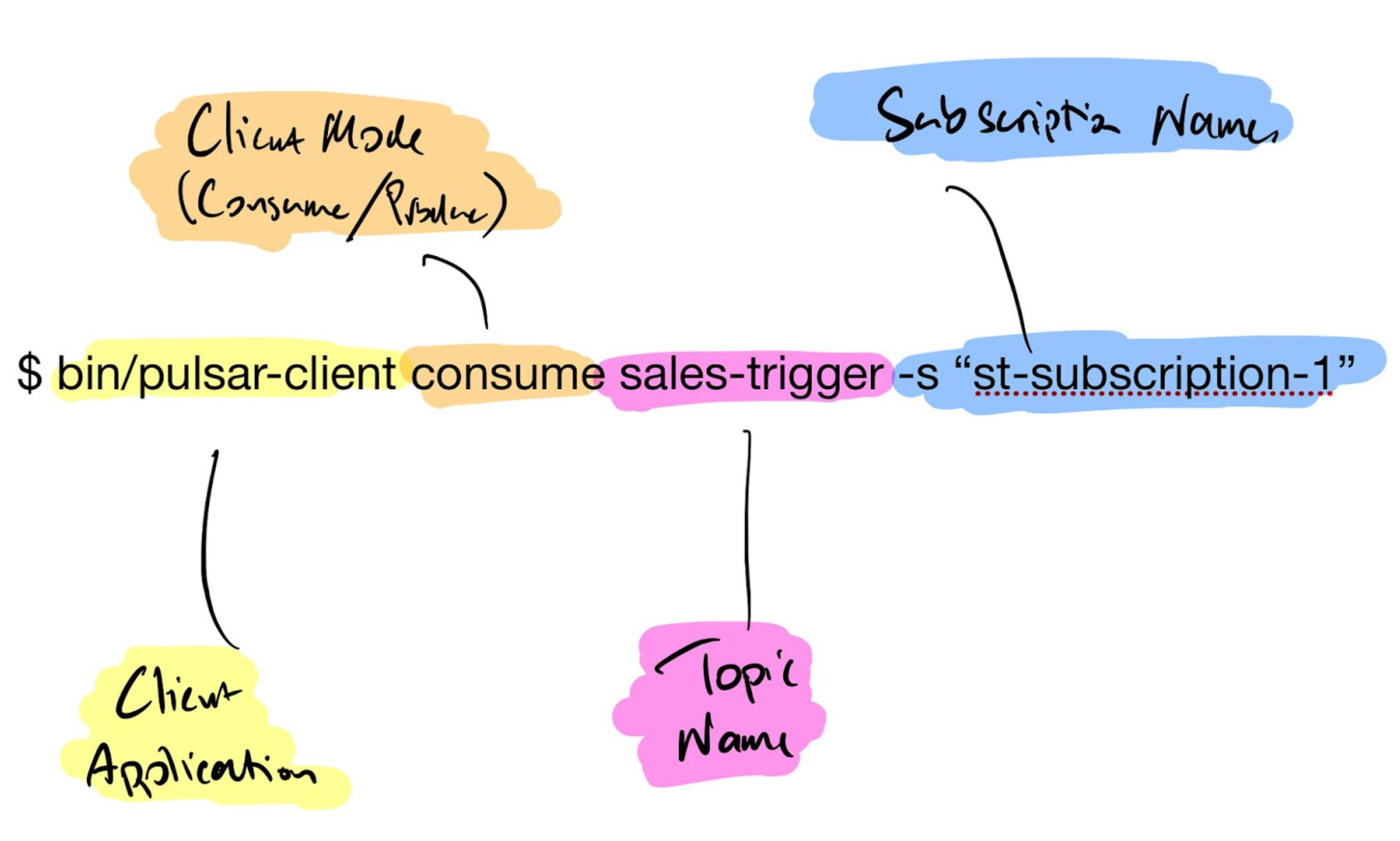

I’m going to create a basic consumer to the standalone cluster. In the bin directory there is a Pulsar client application that we can use without having to code anything, very similar to the Kafka console-producer and console-consumer applications.

$ bin/puslar-client consume sales-trigger -s "st-subscription-1"Let’s break this command down a little bit.

Once executed you will see in the consumer application output that it has subscribed to the topic and is awaiting for a message.

12:04:50.621 [pulsar-client-io-1-1] INFO org.apache.pulsar.client.impl.ConsumerImpl - [sales-trigger][st-subscription-1] Subscribing to topic on cnx [id: 0x049a4567, L:/127.0.0.1:63912 - R:localhost/127.0.0.1:6650], consumerId 0

12:04:50.664 [pulsar-client-io-1-1] INFO org.apache.pulsar.client.impl.ConsumerImpl - [sales-trigger][st-subscription-1] Subscribed to topic on localhost/127.0.0.1:6650 -- consumer: 0

You may have noticed that the topic hasn’t been created yet, the consumer is up and running waiting though. Now let’s create the producer and send a message.

Pulsar Producers

Opening another terminal window, I’m going to run the Pulsar client as a producer this time and send a single message.

$ bin/pulsar-client produce sales-trigger --messages "This is a test message"When executed the producer will connect to the cluster and send the message, the output shows that the message was sent.

13:50:56.342 [main] INFO org.apache.pulsar.client.cli.PulsarClientTool - 1 messages successfully produced If you are running your own consumer and producer, now take a look at the consumer and see what’s happened, it’s received the message from the broker and then cleanly exited.

----- got message -----

key:[null], properties:[], content:This is a test message

13:50:56.378 [main] INFO org.apache.pulsar.client.impl.PulsarClientImpl - Client closing. URL: pulsar://localhost:6650/

13:50:56.404 [pulsar-client-io-1-1] INFO org.apache.pulsar.client.impl.ConsumerImpl - [sales-trigger] [st-subscription-1] Closed consumer

13:50:56.409 [pulsar-client-io-1-1] INFO org.apache.pulsar.client.impl.ClientCnx - [id: 0x049a4567, L:/127.0.0.1:63912 ! R:localhost/127.0.0.1:6650] Disconnected

13:50:56.422 [main] INFO org.apache.pulsar.client.cli.PulsarClientTool - 1 messages successfully consumed

$If you are used to using Kafka you would expect your consumer client to wait for any more messages from the broker, however, with Pulsar this is not the default behaviour of the client application.

Ideally the client consumer should keep running, awaiting more messages from the brokers. There is an additional flag in the client that can be set.

$ bin/puslar-client consume sales-trigger -s "st-subscription-1" -n 0The -n flag stands for the number of messages to accept before the consumer disconnects from the cluster and closes, the default is 1 message, if set to 0 then no limit is set and it will consume any messages the brokers push to it.

Like the consumer settings, the producer can send multiple messages in one execution

$ bin/pulsar-client produce sales-trigger --messages "This is a test message" -n 100With the -n flag in the produce mode, the client will send one hundred messages to the broker.

15:01:03.339 [main] INFO org.apache.pulsar.client.cli.PulsarClientTool - 100 messages successfully produced

The active consumer will receive the messages and await more.

----- got message -----

key:[null], properties:[], content:This is a test message

----- got message -----

key:[null], properties:[], content:This is a test message

----- got message -----

key:[null], properties:[], content:This is a test message

----- got message -----

key:[null], properties:[], content:This is a test message

----- got message -----

key:[null], properties:[], content:This is a test message

Keys and Properties

You may have noticed in the consumer output that along with the content of the message are two other sections, a key and properties.

Each message can have a key, optional but highly advised. Properties are based on key/value pairs, you have multiple properties by comma separating them. Supposing I want to have an action property with some form of command and the key being the current Unix timestamp, the client would look like the following:

$ bin/pulsar-client produce sales-trigger --messages "This is a test message" -n 100 -p action=create -k `date +%s`As the consumer is still running, awaiting new messages, you will see the output with the key and properties.

----- got message -----

key:[1611328125], properties:[action=create], content:This is a test messagePersistent and Non-Persistent Messages

There are few differences between Kafka and Pulsar when it comes to persistence of messages. By default Pulsar will assume a topic is classed as persistent and will save messages to the Bookkeeper instances (called Bookies).

Whereas Kafka has a time to live for messages regardless of whether the consumer has read the message or not, the default is seven days (168 hours), Pulsar will keep the messages persisted. Once all subscribed consumers have successfully read the messages and acknowledged so back to the broker, the messages will then be removed from storage.

Pulsar can be configured, and should be in production environments, to have a time-to-live (TTL) for messages held in persistent storage.

In Memory Topics

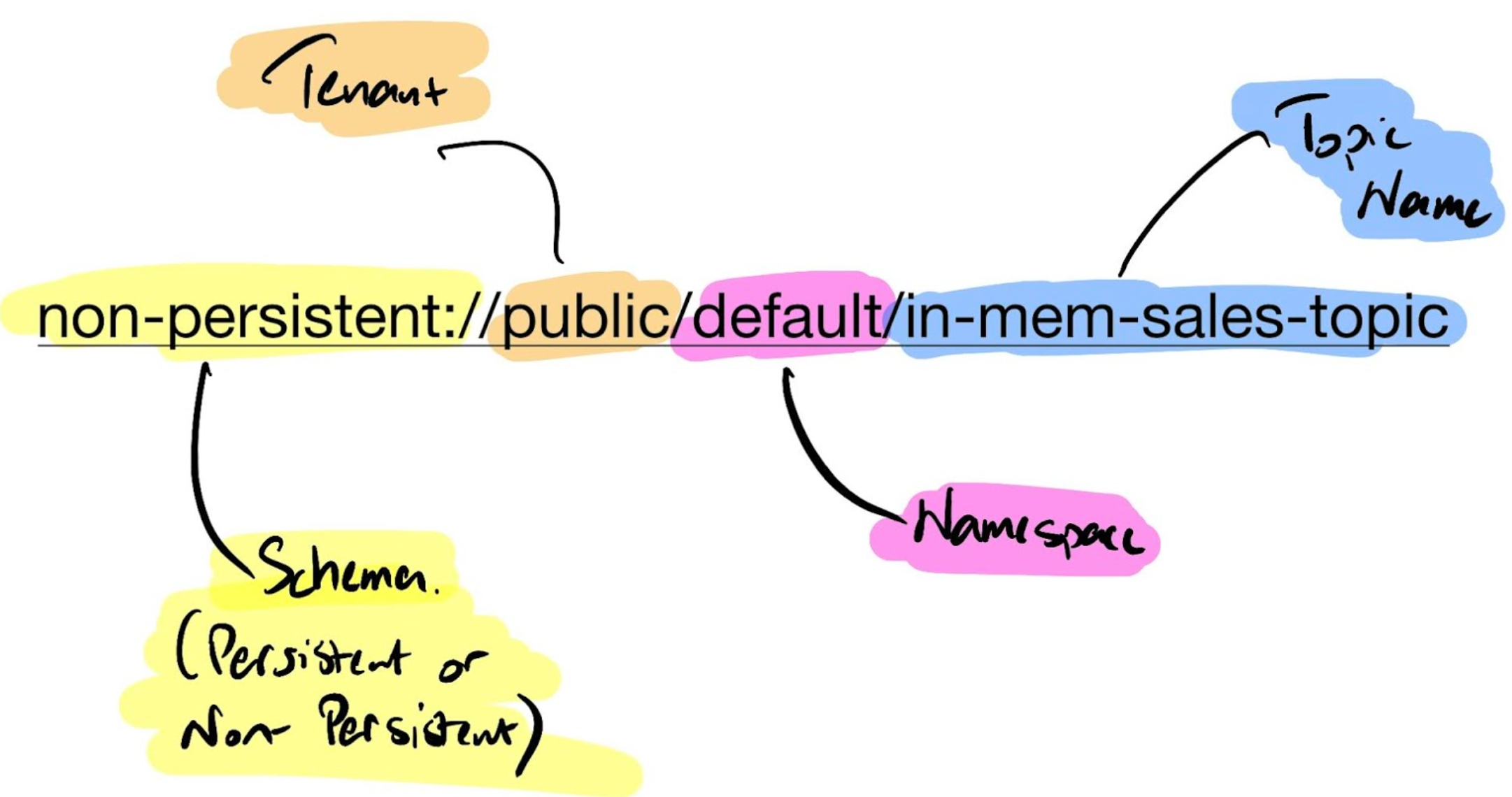

If you wish for topic messages to be stored within memory and not to disk then non-persistent topics are available.

Creating non-persistent topics can be done for the client but require the full namespace configuration.

$ bin/pulsar-client consume non-persistent://public/default/sales-trigger2 -s "st-subscription-2" -n 0$ bin/pulsar-client consume persistent://public/default/sales-trigger2 -s "st-subscription-2" -n 0Listing Topics

The Pulsar admin client handles all aspects of the cluster from the command, this includes broker, bookies, topics and TTL configurations and specific configurations for named subscriptions if required.

For now, let’s just list the topics I’ve been working with in this post:

$ bin/pulsar-admin topics list public/default

"non-persistent://public/default/sales-trigger2"

"persistent://public/default/sales-trigger"Summary

This post should give you a basic starting point of how the Pulsar client and the standalone cluster work. Consumers and producers give us the backbone of a streaming application, with the added features such as whether a topic is persistent or non-persistent (in memory).

All this has been done from the command line, in a future post I’ll look at putting a basic Producer and Consumer application together in code.

If you would like to know more about how to implement modern data, streaming and cloud technologies into your business, we at Digitalis do it all: from cloud migration to fully managed services, we can help you modernize your operations, data, streaming and applications. We provide consulting and managed services on cloud, data, and DevOps for any business type. Contact us for more information.

Jason Bell

DevOps Engineer and Developer

With over 30 years’ of experience in software, customer loyalty data and big data, Jason now focuses his energy on Kafka and Hadoop. He is also the author of Machine Learning: Hands on for Developers and Technical Professionals. Jason is considered a stalwart in the Kafka community. Jason is a regular speaker on Kafka technologies, AI and customer and client predictions with data.

Related Articles

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post Apache Pulsar standalone usage and basic topics appeared first on digitalis.io.

]]>The post Apache Kafka vs Apache Pulsar appeared first on digitalis.io.

]]>Digitalis has extensive experience in designing, building and maintaining data streaming systems across a wide variety of use cases – on premises, all cloud providers and hybrid. If you would like to know more or want to chat about how we can help you, please reach out.

When we talk about streaming data systems it’s hard to ignore Apache Kafka. Its adoption has risen dramatically over the last five years. The ecosystem around it has grown too. While Kafka dominates the online talks, meetups and conference agendas, there are other streaming platforms that exist.

In this blog post I’m going to compare Apache Kafka and Apache Pulsar. By the end of this post you should have a good comparison of the two platforms. I will cover the core components and some of the common requirements of any streaming platform.

The Core Components

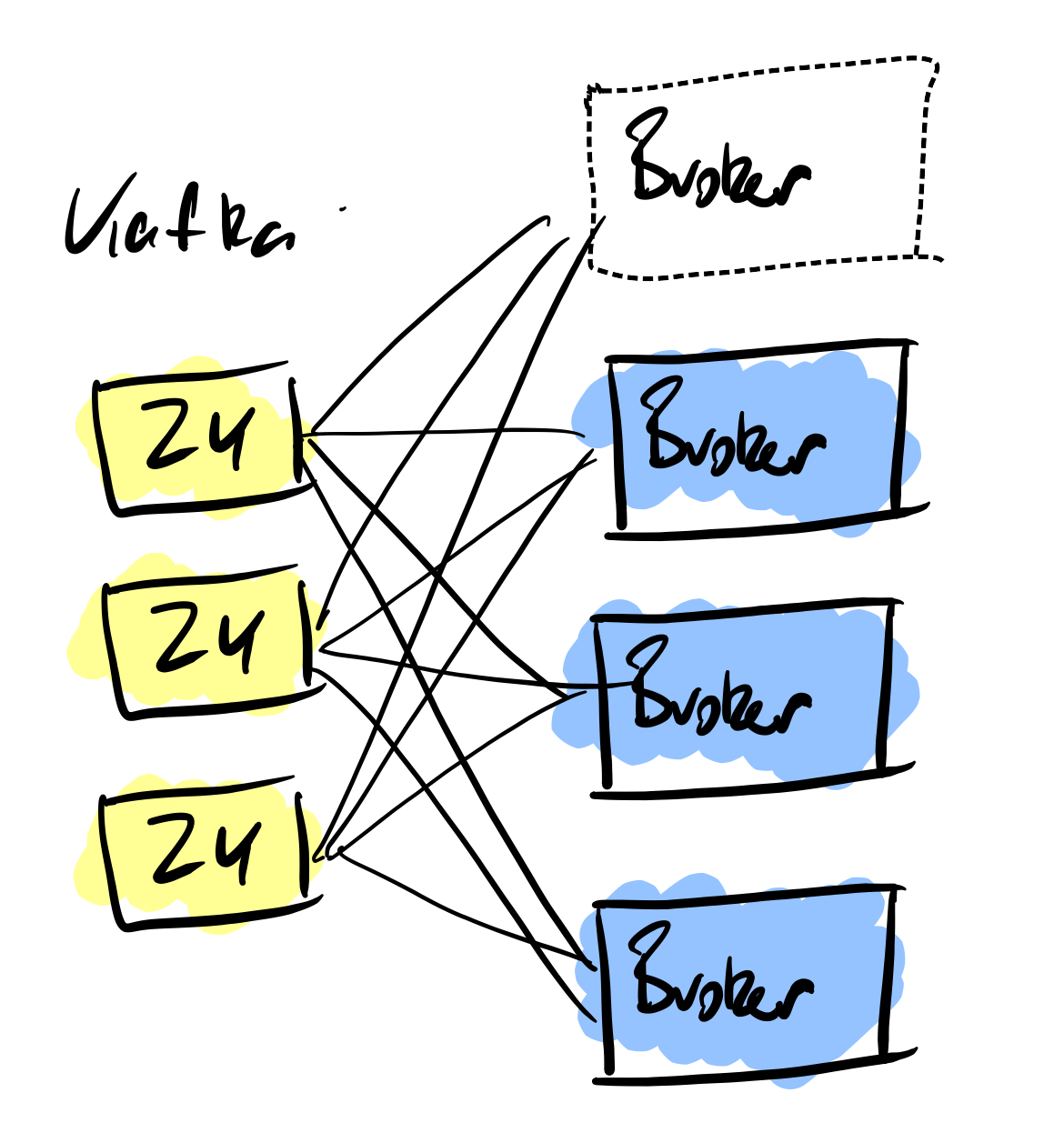

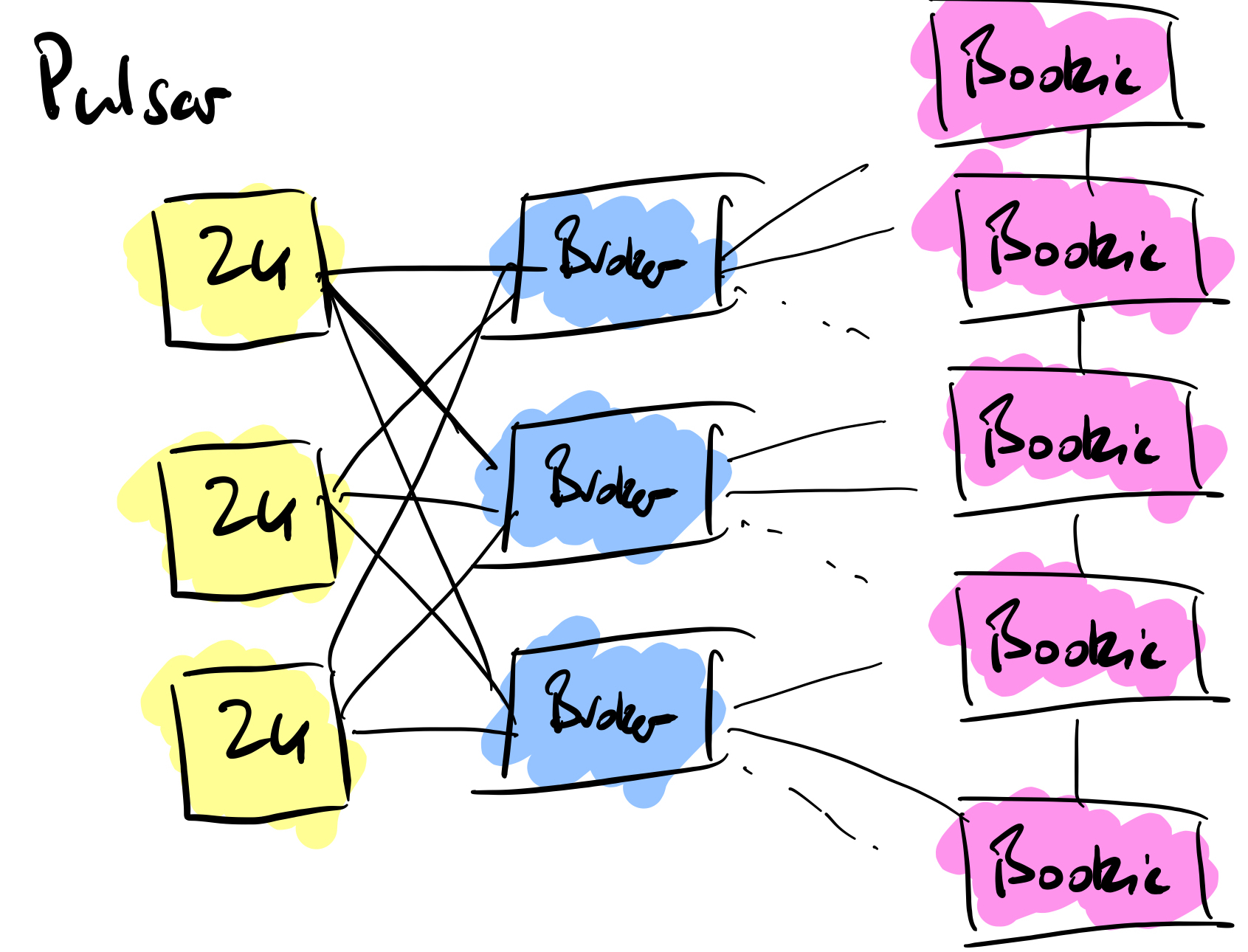

Brokers

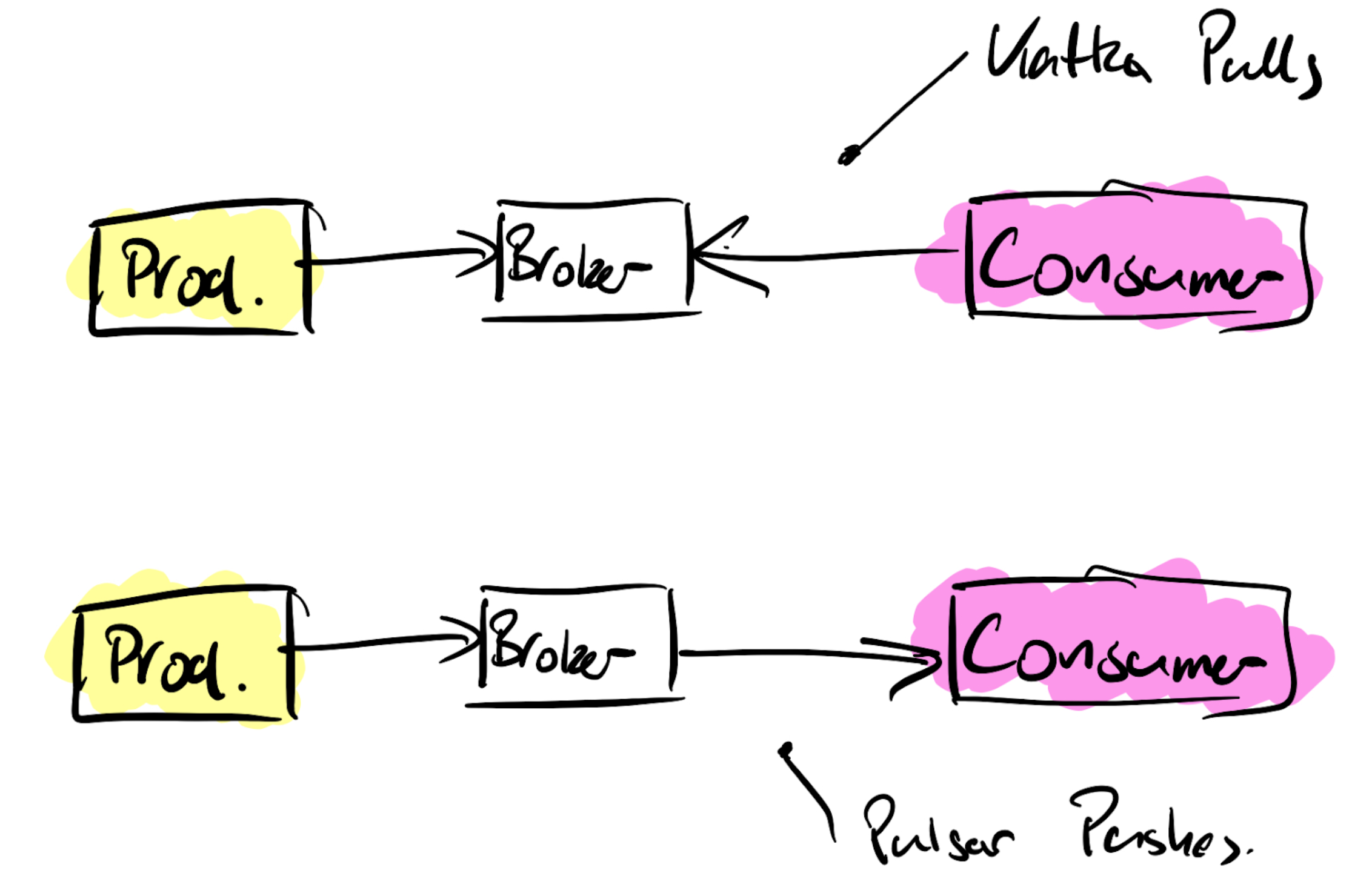

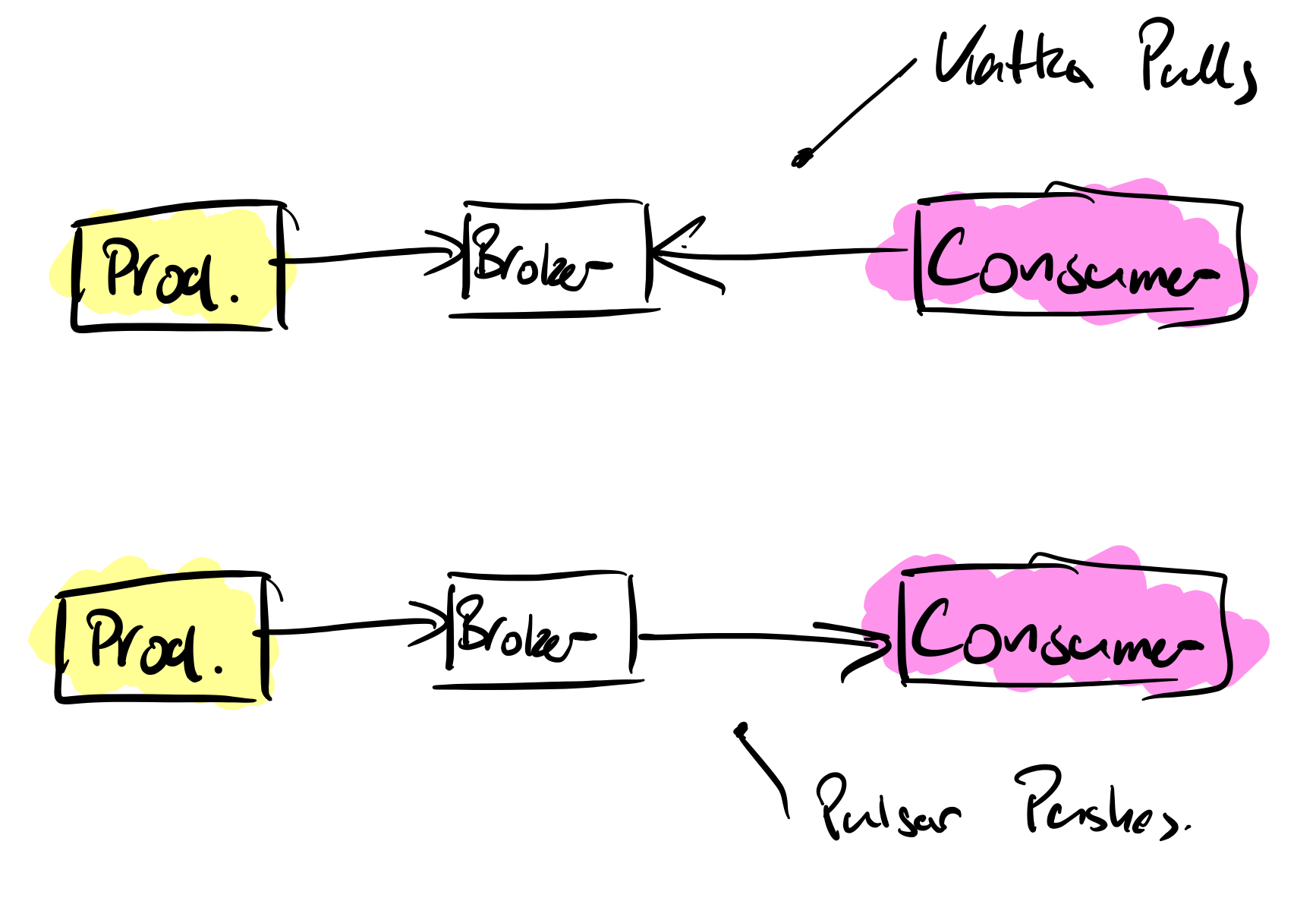

Within both Kafka and Pulsar is a broker architecture, these handle incoming messages from producers and then handle the messages that are handled by the consumers. When it comes to the messages, with Kafka the messages are pulled from the Kafka brokers to the consumers. In Pulsar it’s the other way around, they are pushed to the subscribing consumers.

One of the major advantages of Pulsar over Kafka is around the number of topics you can produce. There are hard limitations on a Kafka cluster when it comes to partitions, a limit of 4000 partitions per broker and a total of 200,000 across the entire cluster, there will be a time when you cannot create more topics. Pulsar doesn’t suffer from this limitation, you can scale with millions of topics as the data is not stored within the brokers themselves but externally in Bookkeeper nodes.

Zookeeper

Both systems use Apache Zookeeper for cluster coordination. Kafka, at present, uses Zookeeper for metadata on topic configuration and access control lists (ACLs), Pulsar uses Zookeeper for the same purposes.

With KIP-500 improvement proposal, the removal of Zookeeper in Kafka will happen – it’s currently being tested. This means that Kafka will operate on it’s own, only relying on the operating brokers for all the cluster metadata. It’s worth noting that Kafka can still be run in “legacy mode” if you still want to have Zookeeper handle its metadata.

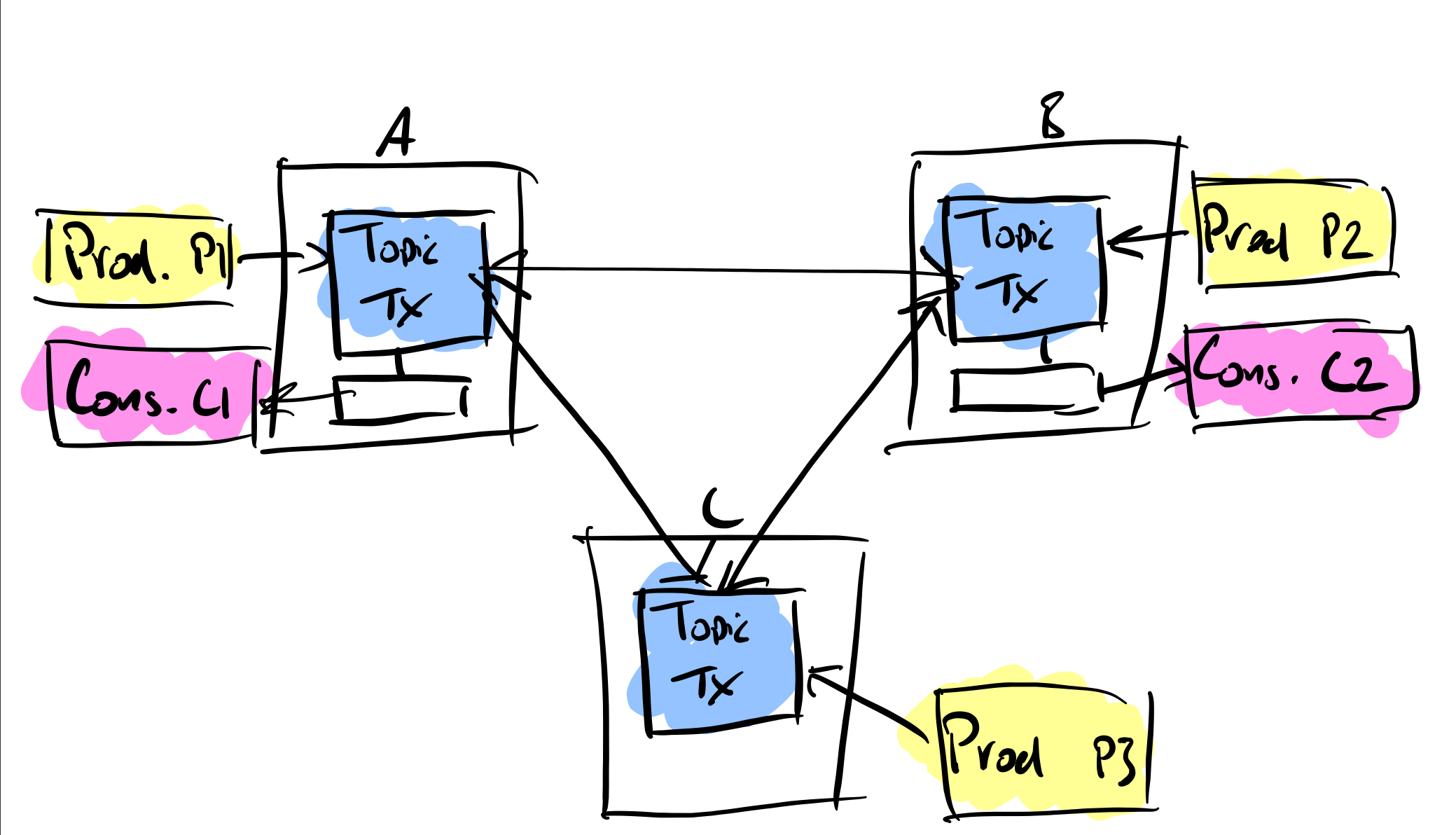

Multi Data Center Replication

For me Pulsar wins the replication battle, it provides geo-replication out of the box. A replicated cluster can be created across multiple data centers. Applications can be blocked from consuming from local clusters until messages have been replicated and acknowledged.

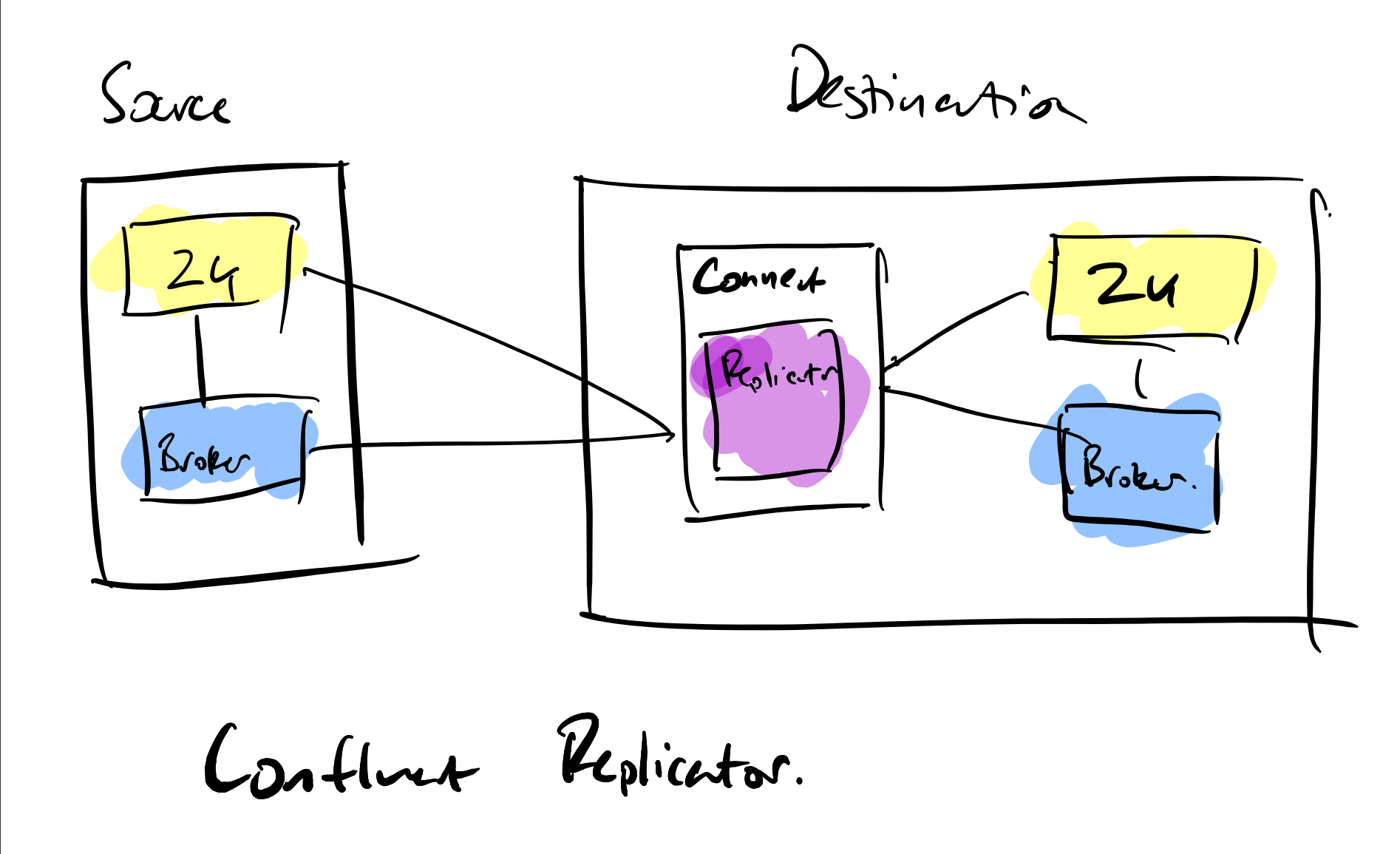

Kafka has two methods for replication, Mirror Maker 2 or Confluent Replicator. If you are using the Apache Kafka distribution then you have Mirror Maker 2, it works well but takes time to configure. If you have purchased a Confluent licence then Replicator is available to you as a standalone application or a connector running on a Kafka Connect node.

Offset handling is incredibly difficult to achieve with replicated Kafka, with some custom API coding required in applications to read from the replicated cluster. Pulsar doesn’t suffer from these problems. It’s worth pointing out that multi DC operation is coming to the Confluent Platform in the future but will be part of the paid for licence.

Service Discovery

If you have used Kafka then you will be aware of the properties configuration and the adding of bootstrap servers, broker lists or Zookeeper nodes depending on the operation you are doing. When new brokers are added then properties need amending with the new addresses appended to the configuration.

Pulsar provides a proxy layer to address the cluster with a single address. This is a huge advantage over Kafka especially when you are deploying with frameworks such as Kubernetes where direct access to the brokers is not possible. Another win is that you are allowed to run as many Pulsar proxies as you wish and they can be accessed via a single point with a load balancer. For cloud based deployments this makes managing and accessing the cluster easy.

Scaling Clusters

As message frequencies increase then there comes a time when you have to scale up the cluster to accommodate the volume of messages. With Kafka this means adding more brokers to the cluster. Adding new brokers to Kafka is not an easy task, this is something Pulsar is far superior to. With Kafka the broker is added to the cluster and then the manual process of repartitioning and replicating the message data to the new broker is done. Depending on the message volumes this can take a lot of time.

Where Kafka uses the brokers for storage, Pulsar uses Apache Bookkeeper and not in the brokers themselves. The main difference is that Pulsar is storing unacknowledged messages, replication and separating the message persistence from the brokers.

With Pulsar if you want to increase message capacity then you add as many Bookkeeper instances as you require without having to add the equivalent number of brokers (as you would with Kafka).

Pulsar provides the option to use non persistent topics in memory, with no data being written to disk. Note however, if the Pulsar broker disconnects from the cluster then those messages and non persistent topics will be lost, whether it is stored in the broker or in transit to the consumer

Clients – Producing and Consuming

| Language | Kafka Client | Pulsar Client |

| C | ✔ | |

| Clojure | ✔ | Using Java Interop |

| C# / .Net | ✔ | ✔ |

| Go | ✔ | ✔ |

| Groovy | ✔ | |

| Java | ✔ | ✔ |

| Spring Boot | ✔ | |

| Kotlin | ✔ | |

| Node.js | ✔ | |

| Python | ✔ | ✔ |

| Ruby | ✔ | |

| Rust | ✔ | |

| Scala | ✔ |

While there are various Kafka client libraries available it’s worth taking the time to study the Kafka features they support, not all aspects of the Kafka APIs are covered in the client libraries. For example if you want to handle Kafka’s Streaming API, only Java covers that API.

One of the interesting bonuses of the Pulsar client Java libraries is that they drop in to existing Kafka producer and consumer code. The only thing that you need to do is update the client dependency in Maven. This gives you an excellent way to evaluate Pulsar without having to refactor all your code.

There are some differences between Pulsar and Kafka when it comes to reading messages. Kafka is an immutable log, with the offset controlling which is the latest message the consumer would read from. If you don’t want to get in the detail of committing your own offsets then you can let the Kafka client API do that for you.

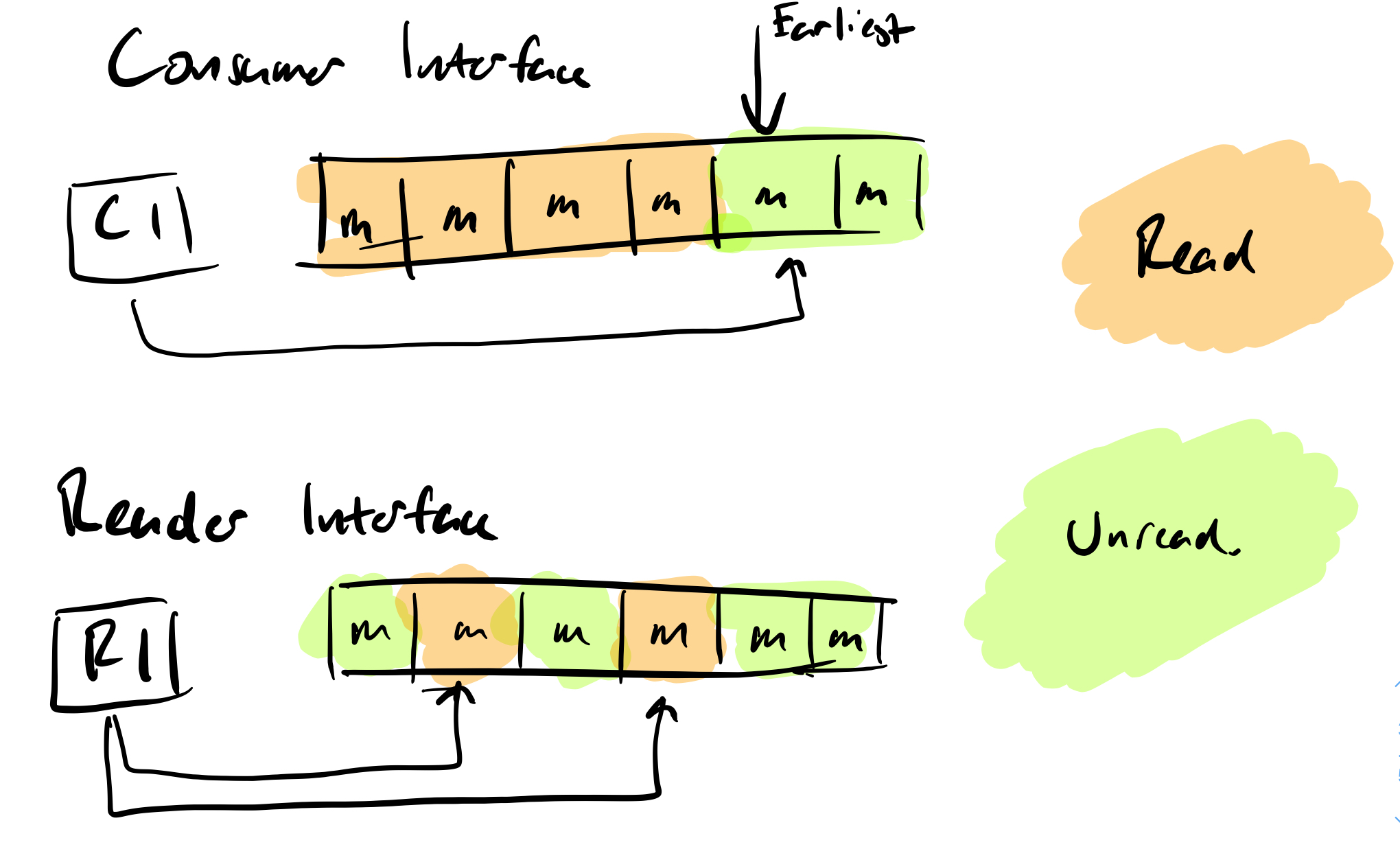

With Pulsar you have a choice of two consuming methods:

- The consumer interface – this behaves in the same way as a Kafka consumer, reading the latest available message from the log.

- The reader interface – this enables the application to read a specific message regardless of ordering in the log.

Reading and Persisting Data from External Sources

For anyone who remembers writing producers and consumers that handled database data, it was a difficult process and difficult to scale.

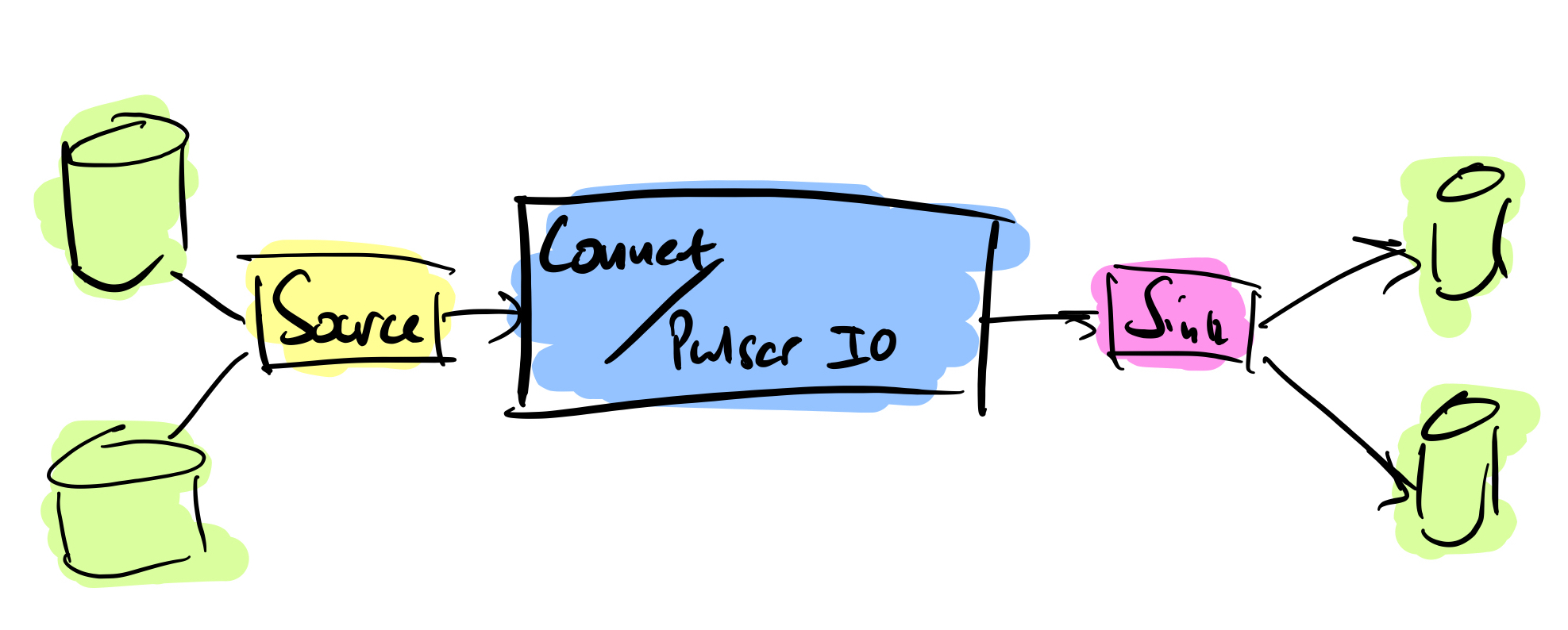

Within Kafka the Kafka Connect system provided a convenient method of either sourcing data to topics or persisting data to a sink.

Apache Pulsar has a similar method called Pulsar IO. It has the same source/sink method of acquiring data or persisting it. The disadvantage here is the support for those external systems.

For some systems such as Apache Cassandra, both systems are supported. If it’s JDBC operations that you want to do, once again both are supported well. Other open source systems like Flume, Debezium, Hadoop HDFS, Solr and ElasticSearch are supported by both systems. There are far more supported vendors for Kafka Connect than there are for Pulsar IO. While you can write your own plugins it is far easier to use an off the shelf one. In terms of connector availability Kafka Connect is an easy choice.

Please note that not all connectors for Kafka are free, some of them you will have to purchase with a licence from Confluent (the commercial arm of Kafka).

If the ease of availability and implementation is important to you then the Kafka connector support is far superior to the Pulsar option.

SQL Type Operations

Using SQL like queries on message streams can speed up the development of basic applications and bypassing any code development being required. These SQL engines also make the use of aggregating data (counting frequencies of certain keys, averages and so on) very easy.

There are SQL engines for both Kafka and Pulsar. The Kafka KSQL engine is a standalone product produced by Confluent and does not come with the Apache Kafka binaries. It is licenced under the Conflent Community Licence.

Apache Pulsar uses the Presto SQL engine to query messages with a schema stored in its schema register. Messages are required to be ingested first and then queried, where KSQL streams the data in the same way a Streaming API application would continuously run and apply the queries.

While there are a few issues with KSQL once you go beyond the basics, I prefer it over Pulsar’s read and then query mechanism.

Long Term Storage

The ability to store old data beyond the retention period of the brokers is one that’s often overlooked. It has become more important as machine learning is being used on the data for recommendation systems, or replaying the data as a system of a record.

Before Kafka Connect it was common for developers to write their own streaming jobs to persist to the likes of Amazon S3 or other types of storage buckets. Tiered storage appeared in Kafka only recently and is only available in the Confluent Kafka Platform 6.0.0 onwards as a paid for option. Persistence is to Amazon S3, Google Cloud Storage or Pure Storage FlashBlade.

Pulsar offers tiered storage as part of the open source distribution, using the Apache JCloud framework to store data to Amazon S3 or Google Cloud Storage, with other vendors planned for the future. The fact that the tiered storage is available for free and out of the box is a huge advantage for Pulsar against Kafka.

Community Support

While comparing the feature and technological aspects of Kafka and Pulsar, to me, the biggest differentiator is the community support. Everyone has questions, everyone looks for help at some point and it’s something that the Kafka community have managed to excel on, the time investment has certainly paid off.

The support in the Confluent Slack channels is excellent (if you’re not a member and you’re using Kafka, I strongly suggest you join). There are lots of meetups available online covering various aspects of the Kafka ecosystem, there is plenty going on.

Unfortunately Pulsar still has a small (but growing) community, so it can be difficult to find answers. The Kafka community support wins hands down. If it is to compete with Kafka going forward then this is the area I feel it needs to focus on the most.

Summary

As you would expect there are parts of Pulsar that shine and there are parts of Kafka that also shine. When it comes to connectivity to external sources and simple querying of the message data then Kafka definitely comes out on top.

The more core elements of the broker systems, Pulsar offers a lot upfront, especially when it comes to using Bookies for expanding persistent storage and the ability to use tiered storage out of the box for free. If you are using frameworks like Kubernetes for deployment then Pulsar’s proxy addressing makes broker access far easier and can be load balanced if you are running multiple proxies. Pulsar also wins on multi datacenter replication out of the box, the ability to block consumers until a message is populated fully is a big benefit.

If the features that Pulsar provides are important to you then you really should consider Pulsar – its ease of scale, tiered storage and multi-dc support are compelling features for any streaming application.

However, community support is vitally important also. Access to help when you need it and getting answers from those who have already done those tasks is immensely advantageous when you are deploying a streaming message system. Confluent has invested heavily in supporting the Kafka community and its ecosystem. At this point I would advise anyone wanting to learn and get up and running quickly to consider Kafka.

Jason Bell

DevOps Engineer and Developer

With over 30 years’ of experience in software, customer loyalty data and big data, Jason now focuses his energy on Kafka and Hadoop. He is also the author of Machine Learning: Hands on for Developers and Technical Professionals. Jason is considered a stalwart in the Kafka community. Jason is a regular speaker on Kafka technologies, AI and customer and client predictions with data.

Related Articles

Getting started with Kafka Cassandra Connector

If you want to understand how to easily ingest data from Kafka topics into Cassandra than this blog can show you how with the DataStax Kafka Connector.

K3s – lightweight kubernetes made ready for production – Part 3

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post Apache Kafka vs Apache Pulsar appeared first on digitalis.io.

]]>