Why and How I Use Distributed Inference to Run a Large Language Model (LLM)

.avif)

Sometimes bigger is better

Introduction

As large language models (LLMs) continue to grow in size and capability, running them efficiently for real-world applications presents significant technical challenges. Distributed inference — spreading the workload across multiple GPUs, nodes, or even edge devices — has become an essential strategy for overcoming these hurdles. Here’s the lowdown on why we need distributed inference and how we actually make it happen.

Why Use Distributed Inference for LLMs?

Let’s look at some of the reasons why you may require Distributed Inference to run your self-hosted LLM:

Model Size Exceeds Single Device Limits

Modern LLMs, such as those with 50B+ parameters, simply cannot fit into the memory of a single GPU or even a single node. Distributed inference allows us to partition the model and spread it across multiple devices, making it possible to run inference on models that would otherwise be inaccessible.

Scalability and Throughput

As demand for LLM-powered applications (chatbots, code completion, etc.) increases, single-node optimizations hit their limits. Distributing inference across a cluster enables higher throughput and supports more concurrent users, which is critical for production workloads.

Lower Latency

Distributed systems can parallelise different parts of the inference workflow, reducing bottlenecks and improving response times. Techniques like preemptive scheduling and disaggregation of inference phases (prefill and decode) further minimise latency.

Cost-Efficiency

By pooling together commodity hardware or leveraging idle compute resources (even across unreliable or heterogeneous devices), distributed inference can dramatically reduce the cost of serving large models.

Flexibility and Resilience

Distributed systems can be designed to tolerate node failures and dynamically balance load, ensuring robust and reliable service even in the face of hardware or network issues.

How Distributed Inference Works: Tensor Parallelism and Pipeline Parallelism

These techniques are all about splitting up a huge AI model so it can run efficiently across many powerful computer chips (GPUs). Imagine tensor parallelism as taking a single, massive math problem and breaking it into smaller chunks, with each GPU tackling its own piece of the calculation simultaneously. This is great for those really big, intensive steps in the AI’s “thinking” process.

Then there’s pipeline parallelism, which is like an assembly line. Each GPU handles a specific section or “layer” of the AI model. Data flows from one GPU to the next, with each one doing its part before passing the result down the line. Many real-world systems use a hybrid approach, mixing and matching these strategies to get the absolute best performance and make the most out of all the available hardware.

Requests are dynamically routed to the best-suited node or GPU, taking into account cache hits, current load, and hardware availability. This minimises redundant computation and improves overall efficiency while intermediate results (like prefix encodings) are cached and reused across requests, especially beneficial in chat or retrieval-augmented generation (RAG) scenarios where context remains stable across turns.

How can you implement it?

My two top choices for running LLMs are vLLM with Ray and Llama.cpp’s RPC server. While vLLM is arguably the more cutting-edge option, especially for high-throughput scenarios, Llama.cpp truly shines with its broader compatibility across a wider range of hardware.

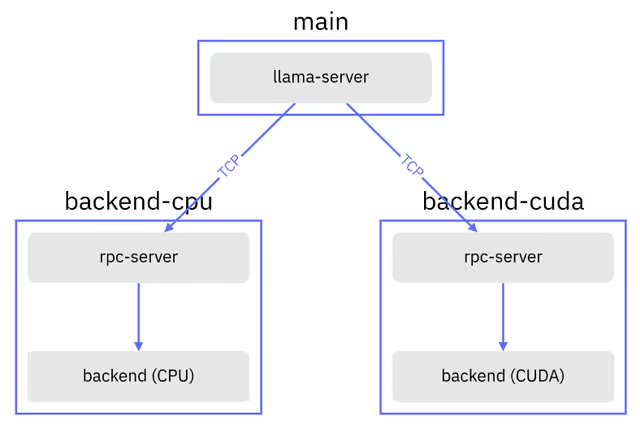

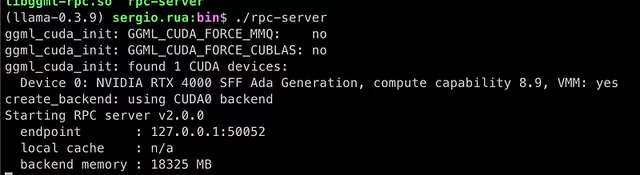

Llama.CPP RPC

The Llama.CPP implementation is probably the easiest to implement. Once compiled with RPC support, all you need is to run the server in one node and the rpc servers in the others.

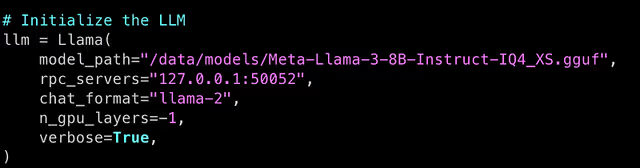

Then set up the clients to send the requests to the RPC. The rpc_servers variable is a list of available servers to distribute.

vLLM Ray

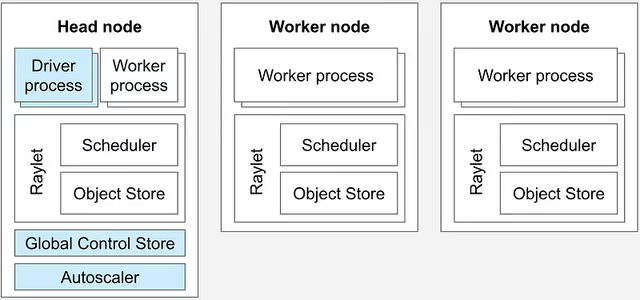

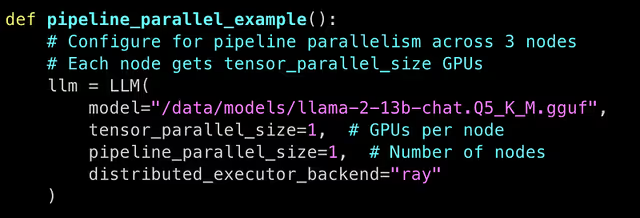

Ray coordinates resource discovery, task scheduling, and inter-node communication, ensuring that each GPU receives its designated partition and that data flows efficiently through the pipeline. Users configure the tensor parallel size (number of GPUs per node) and pipeline parallel size (number of nodes), and Ray launches and synchronises worker processes accordingly, enabling the entire cluster to function as a unified inference engine for large models that exceed the capacity of a single node.

When you initialise your LLM code, you have to tell it to use ray as well as any configuration options required, like the number of GPUs you’ll be using.

If you use Kubernetes, don’t miss out on the KubeRay project, alongside its operator, which provides an effective method for deploying vLLM, leveraging Ray, within Kubernetes clusters provisioned with GPU resources.

Conclusion

Distributed inference is not just a technical luxury — it’s a necessity for running today’s largest and most capable LLMs in production. By intelligently partitioning models, disaggregating workloads, and leveraging smart routing and caching, we can deliver fast, scalable, and cost-effective AI services that meet the demands of modern applications. As LLMs continue to evolve, distributed inference will remain at the heart of scalable AI deployment.

Contact our team for a free consultation to discuss how we can tailor our approach to your specific needs and challenges.

I, for one, welcome our new robot overlords