Data Engineering for the Enterprise: Solving Complex Problems with Confidence

In modern enterprises, data engineering is no longer just a backend function—it’s at theheart of operational resilience, compliance, and competitive edge. At Digitalis.io, our dataengineering solutions are built on years of experience working with some of the largest, mostregulated organisations in the world, particularly in finance, where the stakes are high, andthe margin for error is zero.

Our mission is to enable organisations to unlock the full value of their data infrastructure,securely, reliably, and without disruption to core business operations. This means tacklingtough engineering problems head-on: from integrating diverse data sources at scale toensuring regulatory compliance in every pipeline.

What Is Data Engineering?

Data engineering is the discipline that enables organisations to collect, transport, transform, and make sense of their data at scale. It is foundational to everything from business analytics and artificial intelligence to operational monitoring and regulatory compliance. Yetdespite its growing importance, data engineering is often misunderstood or conflated withadjacent functions like data science or analytics. The reality is that data engineering is a distinct, complex, and deeply technical field—one that sits at the intersection of software engineering, infrastructure, and governance.

At its core, data engineering involves the design, construction, and maintenance of systems and pipelines that move data from its point of origin—often scattered across microservices, logs, APIs, databases, and external feeds—into formats and platforms where it can be queried, analysed, and acted upon. This includes ingesting both structured and unstructured data, often in real time, and transforming it into usable formats through ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform) processes.

But in the enterprise, data engineering is about much more than just moving bytes. It’s about creating trust in data. This means implementing repeatable, observable, and resilient data flows. It means validating data quality, tracking lineage, managing schema evolution, and ensuring that every transformation is auditable. It means understanding how storage formats, indexing strategies, and partitioning schemes affect downstream performance and cost. And it means enabling safe and governed access to the right people at the right time, without ever exposing sensitive or regulated information.

Data engineers are expected to master a diverse toolkit - Apache Kafka for stream processing; Apache Spark, Flink, or dbt for transformation; distributed stores like Cassandra, ClickHouse, or Delta Lake for persistence; and orchestration platforms like Airflow, Argo, or Dagster for workflow management. But tooling alone isn’t sufficient. Enterprise data engineers must also understand security, compliance, and process discipline. For example, they must know how to design pipelines that can meet GDPR data deletion requirements, implement fine-grained RBAC and encryption at rest and in transit, and enable secure cross-environment data movement without compromising auditability.

In heavily regulated sectors like finance, healthcare, or telecoms, this discipline becomes non-negotiable. We’ve worked with large banks where every pipeline must pass multiple layers of scrutiny and not just technical testing, but also compliance checks, data privacy reviews, and operational risk assessments. This includes ensuring that data copied from production to non-production environments is appropriately masked, that retention policies are applied automatically, and that recovery plans are documented and regularly exercised.

Moreover, data engineering is a deeply collaborative practice. Engineers must work across boundaries, with security teams to define access controls, with infrastructure teams to provision scalable resources, with governance teams to classify and catalogue data, and with data consumers to ensure outputs meet expectations. A successful data engineering team combines the rigour of software engineering with the responsiveness of DevOps and the awareness of data stewardship.

Ultimately, data engineering is about building the invisible scaffolding that supports modern digital business. It requires not just code, but architecture. Not just technical execution, but operational excellence. And not just tools, but trust.

Here are some examples of our data engineering engagements.

Real-Time Data Challenges in Insurance Aggregation Platforms

One of the most demanding and revealing environments for enterprise data engineering is the insurance industry, particularly within price comparison ecosystems. For insurers serving millions of retail customers, real-time integration with aggregators such as Compare the Market, GoCompare, or MoneySuperMarket is not a luxury. It is a commercial necessity. These platforms send quote requests in vast volumes, especially during peak times, and the insurer must respond with accurate, enriched pricing within a matter of milliseconds. Behind that instantaneous customer experience is an incredibly complex, high-throughput, tightly governed data platform.

We partnered with RSA Insurance Group, one of the UK’s leading general insurers, to help them solve this exact challenge. Their Personal Lines division was struggling to harness the full value of their high-velocity data due to foundational weaknesses in their data platform. While the policy administration systems produced a rich stream of behavioural and transactional data, ingesting, transforming, and operationalising that data consistently proved elusive. Until our collaboration began.

At the heart of the architecture was a webMethods-based orchestration layer that enriched incoming quote requests with data from third-party services, risk models, and internal systems. These enriched payloads were landed into Apache Cassandra, which provided the high availability and write throughput necessary to handle aggressive aggregator load patterns. The data was then transformed using Apache Spark, feeding both operational dashboards and downstream analytics platforms with consistent, auditable, and performance-tuned datasets.

"The Digitalis partnership finally gave us the robust data foundation we needed to build our exploitation story. Without that in place, we could not have created the sales insight, regulatory governance and customer outcomes that helped us to deliver the best customer care results for our portfolio of almost a million retail insurance policies."

A particularly complex part of this engagement was addressing PII, or Personally Identifiable Information, handling. This was a major requirement in financial services under GDPR and internal governance policies. RSA’s data teams needed access to production-like datasets in development, testing, and analytical environments, but could not risk exposing any customer PII in the process. This is a common enterprise challenge. Analysts, developers, and data scientists need high-fidelity datasets, but security and privacy teams need hard guarantees that sensitive information is never leaked.

To solve this, we designed and implemented a Spark-based PII masking framework as an integral part of the ETL pipelines. As data landed from operational systems into Cassandra, Spark jobs were triggered to identify and transform sensitive fields before any data left the secure production boundary.

Key features of the solution included:

- Automated detection of sensitive fields based on schema tagging and naming conventions.

- Support for deterministic and non-deterministic masking depending on the use case, ensuring referential integrity for testing while preserving anonymity.

Integration into CI/CD pipelines for non-production environments, so masked datasets were always fresh and consistent with production logic. - Auditability and traceability of every masking operation, fulfilling both internal governance policies and regulatory scrutiny.

"Digitalis brought me, as the lead of the Personal Lines Data & Insight Programme, the dependable partner I needed with the data engineering experience and battle-tested best practice Cassandra product knowledge that I simply could not get elsewhere."

This PII-handling capability became foundational. It not only protected RSA’s customer data, but also unlocked safe, compliant data sharing across internal teams. It enabled the business to move faster, test more confidently, and innovate responsibly, all while satisfying FCA oversight and internal risk controls.

Together, we enabled a shift in focus from infrastructure firefighting to business value generation. RSA was able to build out its data exploitation capabilities, derive sales insight, prove regulatory governance, and deliver tangible customer outcome improvements. Our involvement also included major platform upgrades and cost optimisation projects, which helped RSA remain within vendor support boundaries while improving the platform’s performance-to-cost ratio.

This engagement perfectly illustrates what modern data engineering should deliver. A platform that enables, rather than obstructs, business outcomes. It also highlights the importance of aligning architecture with operational realities, where scalability, compliance, and maintainability are not just technical goals but business enablers.

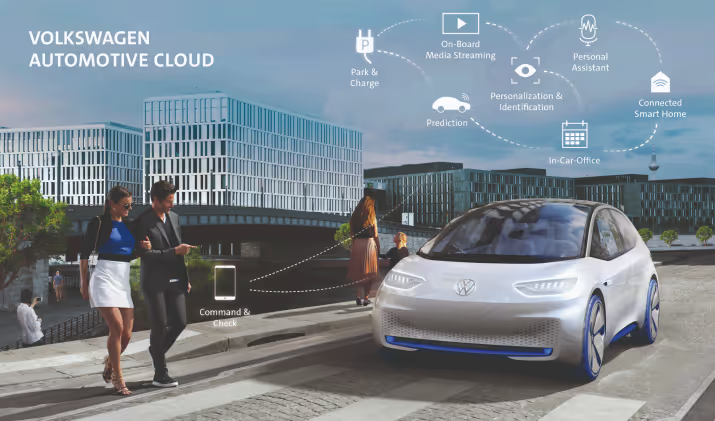

Connected Vehicle Data at Scale: Supporting Volkswagen Group’s Telemetry Platform

In the automotive industry, data flows not only from applications but from the physical world. Every connected vehicle generates a continuous stream of telemetry, including location, battery health, speed, error codes, and software versioning. For a global manufacturer like Volkswagen Group, which operates across multiple brands and markets, this data is critical. It powers predictive maintenance, remote diagnostics, feature usage analytics, and product lifecycle decisions.

Volkswagen Group engaged us to support the operation and evolution of their Connected Services platform, built on Apache Kafka and Apache Cassandra, deployed within AWS. At first glance, the challenge appeared to be about scale. In practice, it was about delivering a platform that could be trusted across technical, security, and regulatory boundaries. The business needed a foundation that was not only fast and resilient but auditable, controlled, and safe to innovate on.

Apache Kafka was responsible for real-time ingestion, receiving millions of events per second from in-vehicle gateways. Each message contained time-sensitive and often highly sensitive data. Kafka topics were tightly governed, with authentication, authorisation, and schema validation in place to prevent misuse or inconsistency. Encryption in transit and strict identity enforcement ensured secure communication between vehicle systems and cloud services.

Apache Cassandra served as the persistent store, selected for its ability to support fast, high-volume writes and regional redundancy. The deployment spanned multiple AWS availability zones, with full encryption at rest and in transit. Data models were carefully designed to partition information in ways that supported regional access boundaries and efficient lookups by vehicle ID and time. This allowed downstream systems to query data safely without compromising isolation or performance.

Security was a first-class concern. The data included live vehicle location and behavioural signals that, if mishandled, could lead to severe privacy violations. We implemented strict role-based access controls, segregated workloads by trust boundary, and configured infrastructure with zero public exposure. All administrative access was logged, monitored, and restricted by least-privilege principles.

Just as importantly, the platform was built to be operationally robust. Observability was implemented from day one. Metrics were collected using AxonOps and visualised, with alerting tied into incident response workflows. Recovery procedures were documented and rehearsed. Infrastructure was defined and deployed via Terraform, giving the team full version control and repeatability for every environment change.

The result was a data platform that did more than just keep up with the scale of the connected vehicle ecosystem. It enabled Volkswagen Group to trust the data flowing in, share it safely across teams, and use it to power real-time and analytical use cases. From fault detection to customer experience improvements, the platform delivered measurable business outcomes without compromising on security, compliance, or operational control.

This project reinforced our belief that data engineering is not simply about pipelines or tooling. It is about enabling data to move through an organisation safely, reliably, and meaningfully. In this case, it meant turning raw telemetry into structured, governed, and accessible data that could be used to make better decisions, faster.

Conclusion

Data engineering is not just about connecting systems or processing data. It is about building trust, ensuring compliance, and enabling business teams to work with confidence. At Digitalis.io, we take a practical and disciplined approach to data engineering, shaped by years of experience working with complex enterprise environments.

From streaming data in financial services to processing connected vehicle telemetry in the cloud, we have delivered platforms that are secure, reliable, and ready for real-world demands. In every project, whether supporting regulatory requirements or enabling business analytics, we focus on long-term maintainability, operational robustness, and clarity of purpose.

Our work with RSA and Volkswagen Group shows what is possible when strong data engineering foundations are in place. These organisations did not just want data pipelines. They needed data platforms they could trust, that their teams could build on, and that would hold up under scrutiny from regulators and customers alike.

If your organisation is struggling with fragmented data systems, unreliable pipelines, or growing compliance risk, we can help. At Digitalis.io, we partner with enterprises to turn data into a strategic advantage, backed by sound engineering and operational discipline.