Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post What is Apache NiFi? appeared first on digitalis.io.

]]>If you would like to know more about how to implement modern data and cloud technologies into to your business, we at Digitalis do it all: from cloud and Kubernetes migration to fully managed services, we can help you modernize your operations, data, and applications. We provide consulting and managed services on Kubernetes, cloud, data, and DevOps.

Contact us today for more information or to learn more about each of our services.

Introduction

So, what is Apache NiFi, well, in short, an open-source, flexible, feature rich, dataflow management tool. It is distributed, under the terms of the Apache License and it is managed and maintained, by a self-selected team of technical experts, who are active contributors to the project. It’s main purpose is to automate the flow of data between systems.

It has a simple, drag-and-drop, user interface (UI), which allows users to create visual dataflows and manipulate the flows in real time and it provides the user with information pertaining to audit, lineage, and backpressure.

It is easily deployed, on all standard platforms, highly configurable, data agnostic, offering loss-tolerant, guaranteed delivery, low latency, high throughput and priority-based queuing.

One can think of it as a data transfer tool, taking data from one place to another, while, optionally, doing some kind of transformation of data. The data goes through the flow in a construct known as a flow file.

It supports a number of different endpoints including, but not limited to:

File Based, Mongo, Oracle, HDFS, AMQP, JMS, FTP, SFTP, KAFKA, HTTP(S) Rest, AWS S3, AWS SNS, AWS SQS

This makes it a powerful tool for pulling data from external sources, routing, transforming, and aggregating it and finally delivering it, to its final destination.

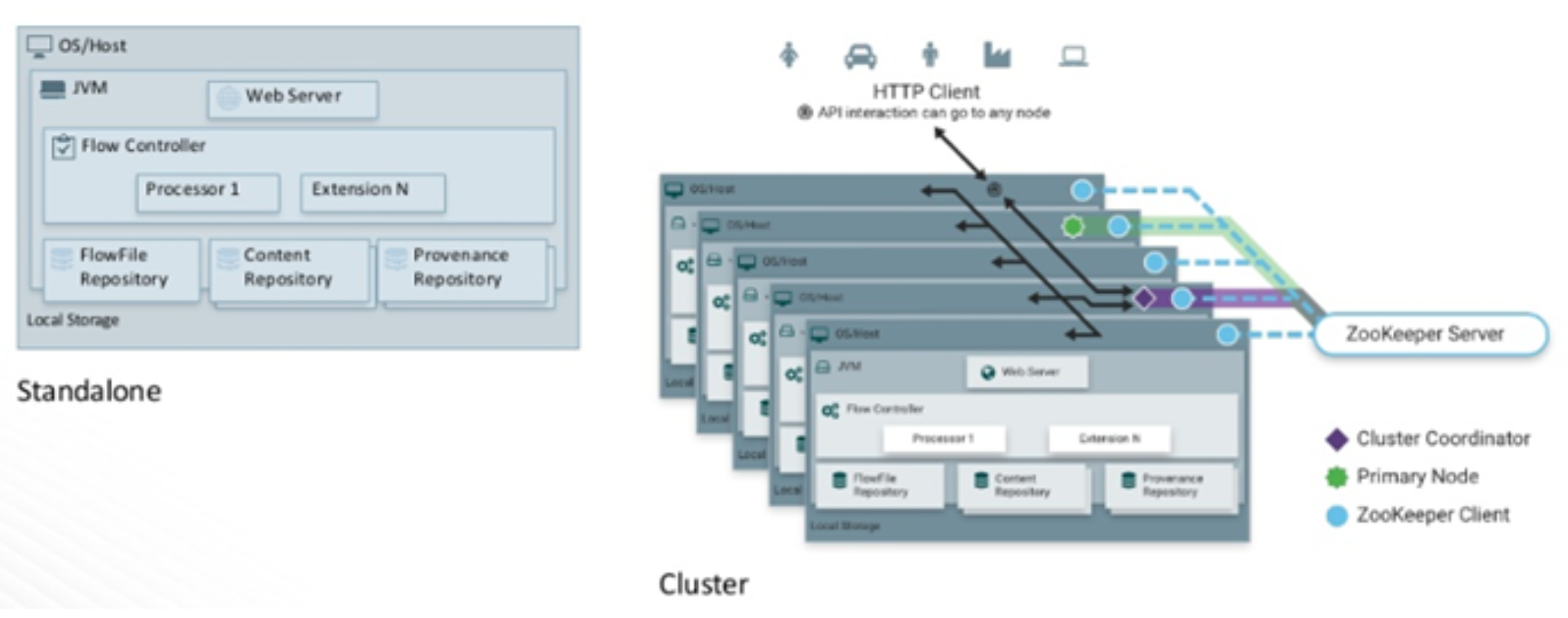

Architecture

The architecture of nifi is simplistic in nature. It consists of a web server, flow controller and a processor, which runs on a Java Virtual Machine (JVM). Like most Hadoop related, open-source software, it can be deployed, in standalone mode or in cluster mode. In cluster mode, which is the normal way to deploy it, each node performs the same tasks on the data, but each operates on a different set of data.

Coordination and Cluster management in nifi is handled by Apache ZooKeeper. Zookeeper elects a single node, as the Cluster Coordinator, all cluster nodes report heartbeat and status information to the Cluster Coordinator. The Cluster Coordinator is responsible for automatic failover and disconnecting and reconnecting nodes.

Additionally, every cluster has one Primary Node, also elected by ZooKeeper. Any change you make, is replicated to all nodes in the cluster, allowing for multiple entry points.

For metadata and data flow management, nifi has 3 repositories

- Flowfile Repository which contains metadata for all the current FlowFiles in the flow.

- Content Repository which holds the content for current and past FlowFiles.

- Provenance Repository which holds the history of FlowFiles.

Apache NiFi components

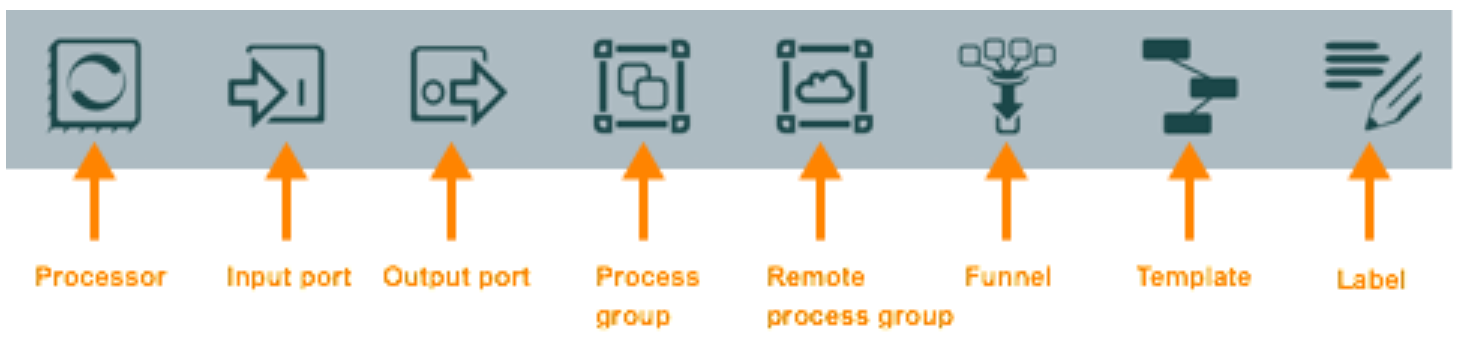

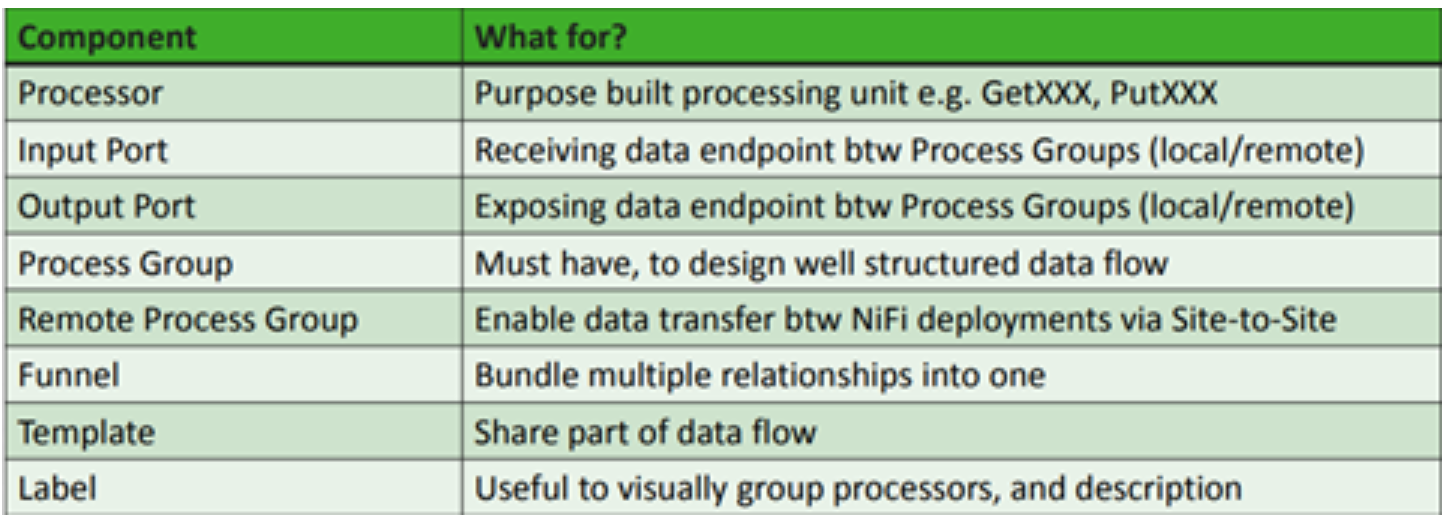

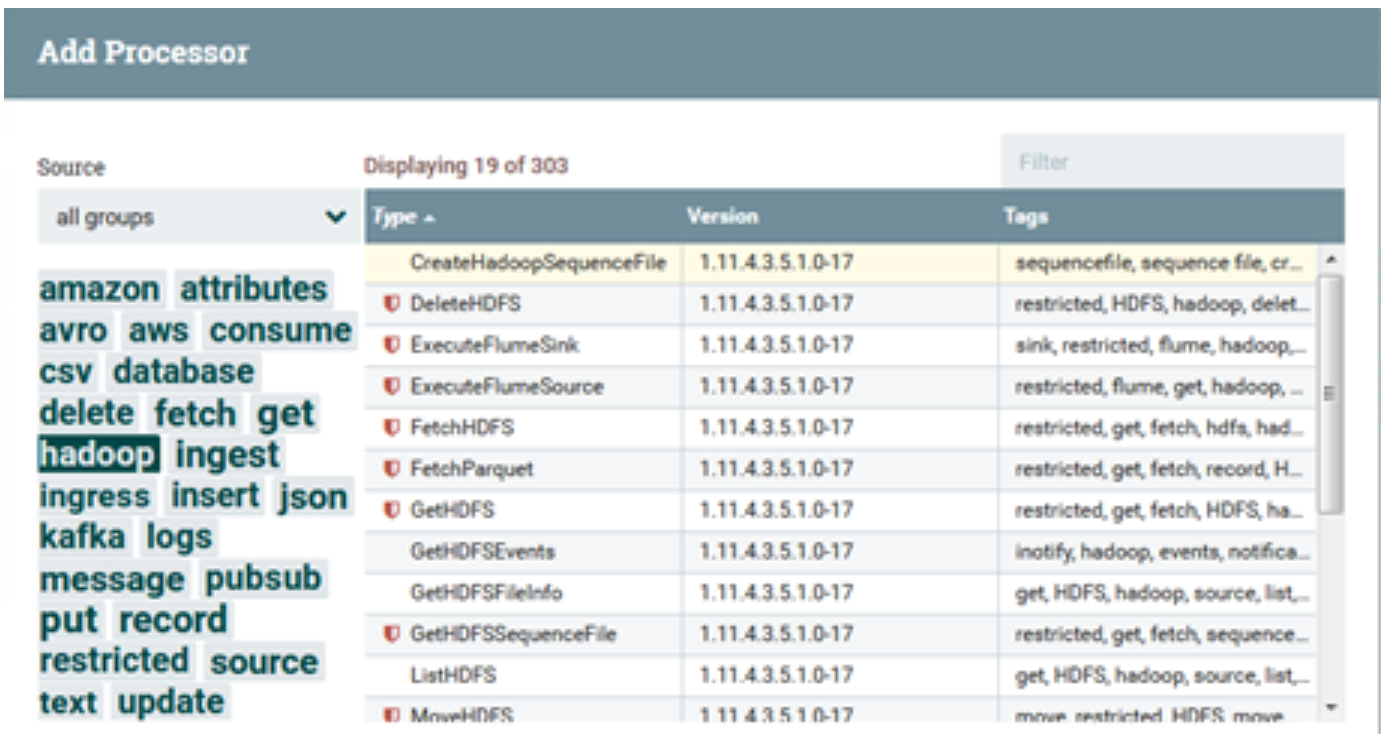

NiFi, as you can imagine, contains many different canned Processors.

Highlighting each group displays processors associated with that group. These Processors provide capabilities to ingest data from numerous different systems, route, transform, process, split, and aggregate data and distribute data to many systems. Custom one’s can also be developed, if required, relatively easily.

Creating a workflow is a simple process, just drag and drop components onto the nifi canvas, connect them up, configure each component and start the flow. Simple as that.

Sample Flow

There are many sample flow templates available. The Apache project supplies multiple examples here. Cloudera also makes freely available sample template flows here and here.

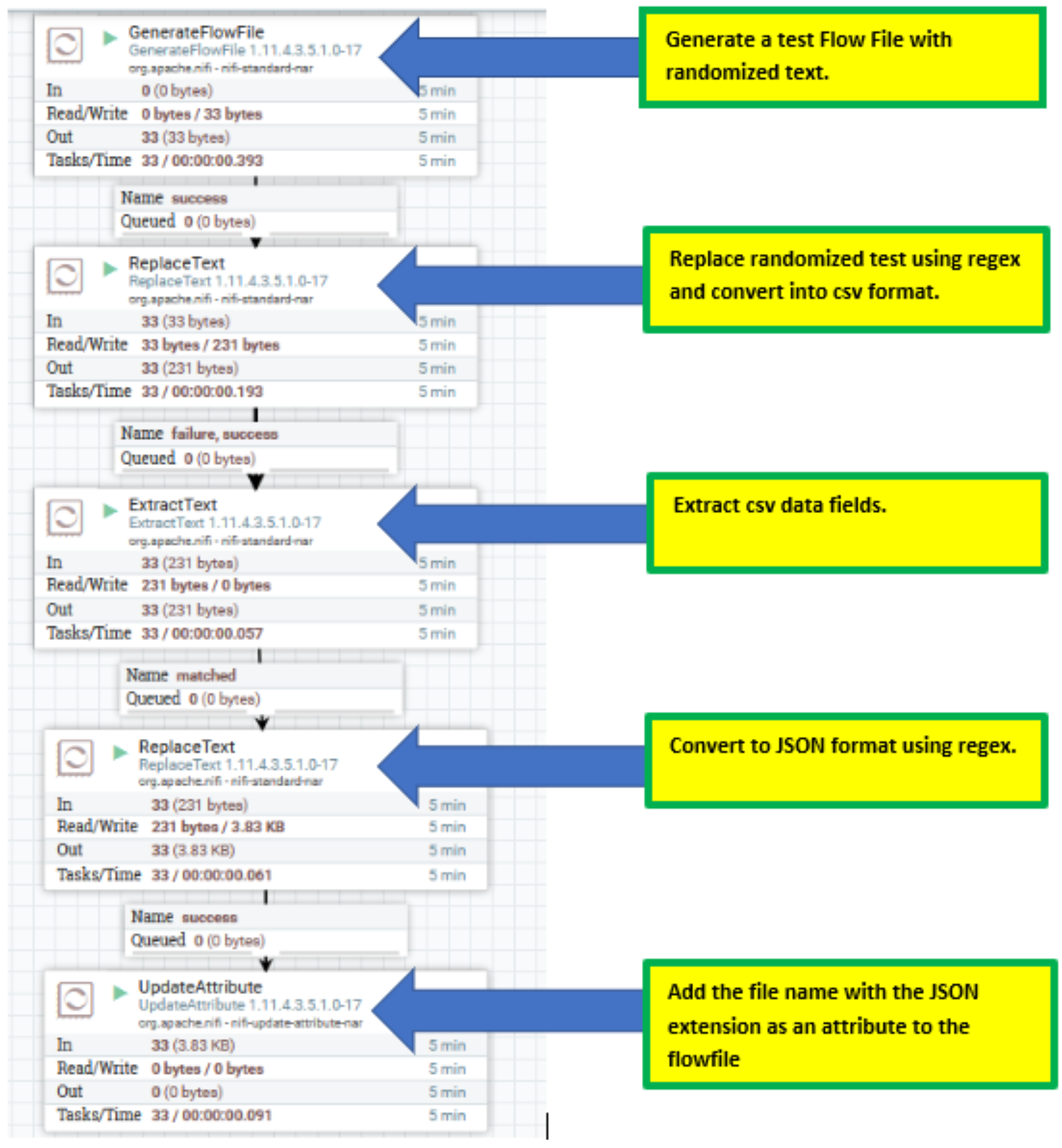

I have chosen a basic simple flow (which you can download here). This shows how to create a flow file, generate a CSV entry, and convert that csv file to a JSON document. It demonstrates the simplicity of Nifi.

Security

Authentication

NiFi supports numerous user authentication options, such as:

- Client Certificates

- SAML

- Apache Knox

- OpenId Connect

- Login Identity Providers (such as LDAP and Kerberos).

Enabling authentication, as always, can be tricky and time consuming, but once configured, runs smoothly.

It is important to note that NiFi does not perform user authentication over HTTP. Using HTTP, all users will be granted all roles. Not Desirable.

Authorization

Multiple options are available to control who has access to the system, and of course, the level of their access. Primarily, authorization can be implemented using multi-tenant authorization. This enables multiple groups of users (tenants) to command, control, and observe different parts of the dataflow, with varying levels of authorization. When an authenticated user attempts to view or modify a NiFi resource, the system checks whether the user has privileges to perform that action. These privileges are defined by policies that you can apply system-wide or to individual components.

Handily, Nifi supplies a number of UserGroupProvider authorizers.The default one is FileUserGroupProvider. Another option nifi provides for the UserGroupProvider is LdapUserGroupProvider. This will sync users and groups from a directory server and will present them in the NiFi UI in read only form.

The most popular LdapUserGroupProvider authorizer is the Apache Ranger authorizer, which uses Apache Ranger to make access decisions. Apache Ranger provides a nice central location for managing users, groups and policies, as well as a mechanism for auditing access decisions. It’s auditing capabilities make it a more popular choice, although admittedly, this can be hard to configure.

You can, also, if required, develop your own, additional, customized UserGroupProviders, as extensions.

Encryption In Flight

Nifi provides a number of cmd line utilities. One being TLS Toolkit. This toolkit, allows one to automatically generate the required keystores, truststore, and relevant configuration files, enabling ssl encryption, in an easy and secure manner. This is especially useful for securing multiple NiFi nodes, which can be a tedious and error-prone process. For more information, see the TLS Toolkit section in the NiFi Toolkit Guide.

It is my understanding that, In future releases, hardware security modules (HSM) and external secure storage mechanisms will be integrated into nifi, but for now, an AES encryption provider is the default implementation.

Encryption At Rest

Provenance and Data Lineage

A serious concern, in any data flow routing & management platform, is tracking what happens to the data, from the point of origin, to its endpoint. NiFi allows users to answer these questions, by automatically, recording everything that happens to your data, at a very granular level.

Any event (data being created, ingested, routed, modified, tagged, viewed, exported, or deleted) is recorded, along with the time, the identity of the component that acted on it, where it was sent, what was changed, etc.

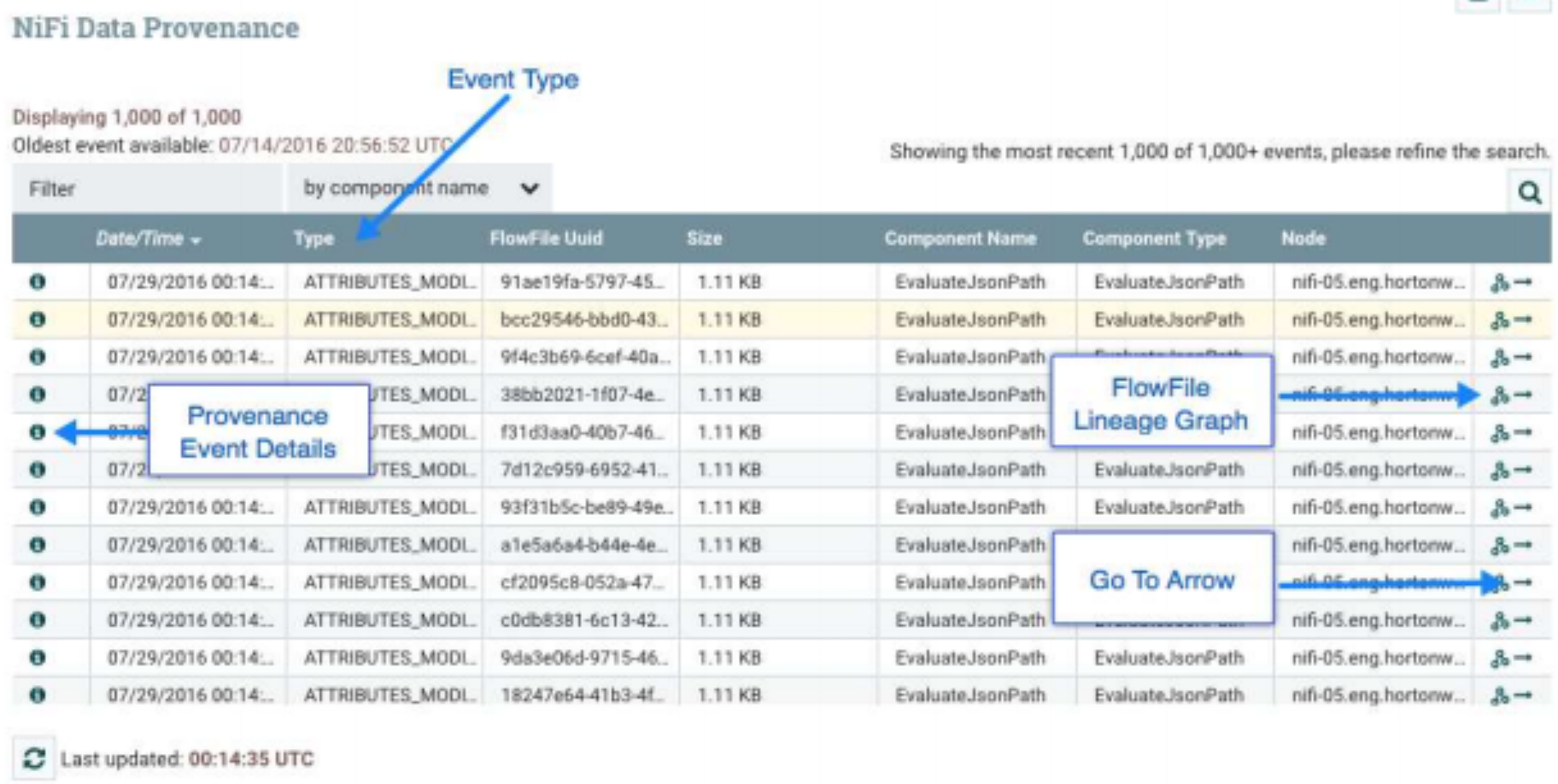

Fully configurable, users can explore the provenance chain (the directed graph representation of the provenance events) of any flowfile to review the path it followed through the data flow.

The image above, outlines how for each processor and connection, within NiFi, one can click on the component and inspect the data provenance. We are provided with a table of Provenance events based on our search criteria

In addition to viewing the details of a Provenance event, we can also view the lineage of the FlowFile involved by clicking on the Lineage Icon from the table view.

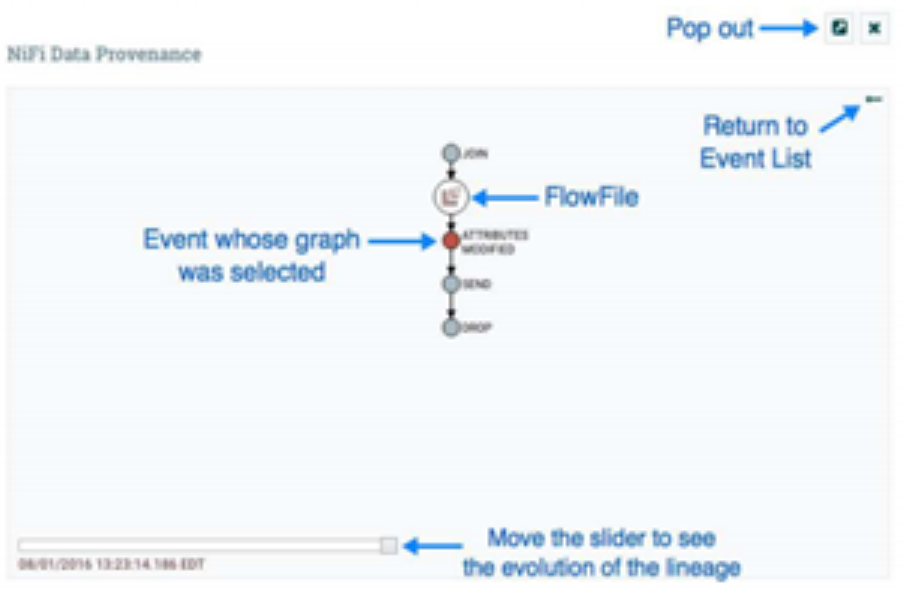

This provides us with a graphical representation of exactly what happened to that piece of data as it traversed the system. The slider in the bottom-left corner allows us to see the time at which these events occurred. By sliding it left and right, we can see which events introduced latency into the system so that we have a very good understanding of where in our system we may need to provide more resources, such as the number of Concurrent Tasks for a Processor.

In later releases of Nifi, you also have the ability to replay flowfiles. As long as the provenance data has not been archived and the referenced content is still present in the content repository, any flowfile can be replayed from any point in the flow. This greatly enhances the flow development lifecycle – rather than use a “build and deploy” cycle, this replay feature allows continuous refinement of a flow, as the data flows through different branches. This allows the end-user to understand the exact context in which the FlowFile was processed. The user is able to perform iterative development of the flow, until it is processing the data, exactly as intended.

Development

NiFi is at its core, an extendable architecture, as such, it is a platform, on which, dataflow processes, can execute and interact, in a predictable and iterative manner. Extension points include, processors, Controller Services, Reporting Tasks and Customer User Interfaces.

It provides a custom class loader model, ensuring that each extension bundle is exposed to a very limited set of dependencies. As a result, extensions can be built with little concern for dependencies with other extensions.

Nifi provides an expression language for configuring processor properties. So what does that give you, well, you can read more about it here, but a basic example would be, if a user wants to configure a property value to be the literal text Hello ${UserName}. In such a case, this can be accomplished by using an extra $ (dollar sign symbol) just before the expression to escape it (i.e., Hello $${UserName}).

It provides greater functionality than that, of course, one can utilize a wide range of in-built functions to generate dynamic flows, manipulating dynamically flow component attributes, as you wish. Functions that can be performed using the NiFi Expression Language are: String Manipulation, Maths Operations, If Then Else…. DateTime operations and encoding.

Excellent blogs have already been written around the nifi expression language, take a look here and here.

It is important to note, not all Processor properties support the Expression Language. That is determined by the developer of the Processor and how the Processor is written. However, to be fair, nifi does clearly illustrate for each Property whether or not the Expression Language is supported.

For development testing, nifi provides a nifi-mock module that can be used in conjunction with JUnit to provide extensive testing of components. The Mock Framework is mostly aimed at testing Processors, However, the framework does provide the ability to test Controller Services as well and is primarily written in the values of processor properties.

Summary

Nifi is an excellent data flow tool. It is easily extendible, highly scalable, fully secure, user friendly, performant and easy to learn. End Users can command & control flows visually and can configure error handling capabilities, relatively easily. Importantly, it guarantees delivery, even at an extremely high scale.

It has the ability to buffer queued data and handle back pressure, this makes it highly effective for flow management. From a security point of view, it delivers on all the main security tenants, that makes a product secure and enterprise ready.

From a personal point of view, it’s easy useability, feature rich content, ability to extend and scale at ease, makes me a big fan. I look forward to later releases and I sincerely hope the product grows from strength to strength.

At Digitalis we have extensive experience dealing with data in complex and critical environments. We are experts in data and streaming technologies along with DevOps and DataOps practices. If you could like to know more, please let us know.

Where To Go For More Information

Related Articles

K3s – lightweight kubernetes made ready for production – Part 2

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

K3s – lightweight kubernetes made ready for production – Part 1

Do you want to know securely deploy k3s kubernetes for production? Have a read of this blog and accompanying Ansible project for you to run.

The post What is Apache NiFi? appeared first on digitalis.io.

]]>